Any time you need to procedurally generate some content or assets in a game, you're going to want to use an algorithm to create something that has both form and variation. The naive approach is to use purely random numbers. This certainly meets the noise requirement but has no form since it is just static noise. What we want is some technique for generating controllable randomness which isn't too different from nearby random values (ie, not static). Pretty much any time you have this requirement, the go-to solution is almost always "Use Perlin noise - end of discussion". Easier said than done. If you've tried to build your own version based off of Perlin's C++ code implementation or Hugo Elias' follow up article on it, you're probably going to be lost and confused. Let's break the problem down into something simple and easy to implement quickly.

Terminology

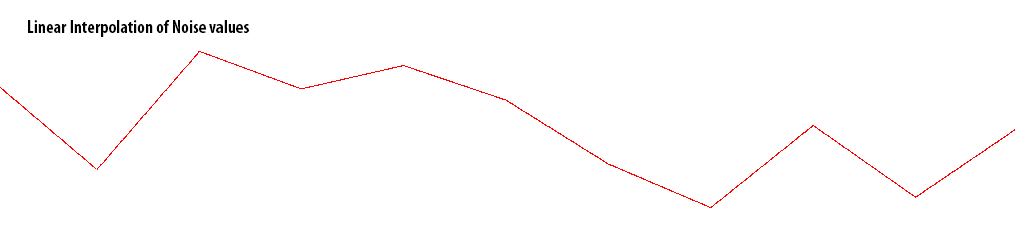

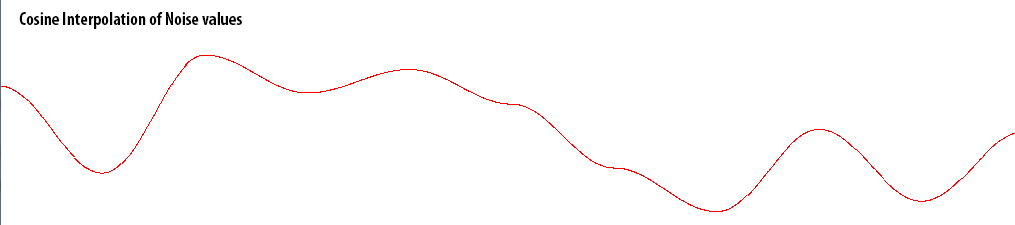

Noise is a set of random values over some fixed interval. Example: Let X be the interval; Let Y be the noise value; Noise = {(0,6),(1,3),(2,4),(3,8),(4,2),(5,1),(6,5),(6,6),(7,4),(8,2),(9,5)} Static Noise is a type of noise where there is no continuity between values. In the example above, there is no smooth variation in Y values between X values. Smoothed Noise is a type of noise where there is continuity between values. This continuity is accomplished by using an interpolation between values. The most common interpolation function to use is Linear Interpolation. A slightly better function to use is Cosine Interpolation at a slight cost in CPU.

Amplitude is the maximum amount of vertical variation in your noise values (think of Sine waves). Frequency is the periodicity of your noise values along your scale. In the example above, the frequency is 1.0 because a new noise value is generated at every X integer. If we changed the period to 0.5f, our frequency would double.

Concept on how it works

There is a semantic distinction between Perlin's noise implementation and the common implementation. Perlin doesn't add layers together (he uses gradients). The end result is pretty much the same.

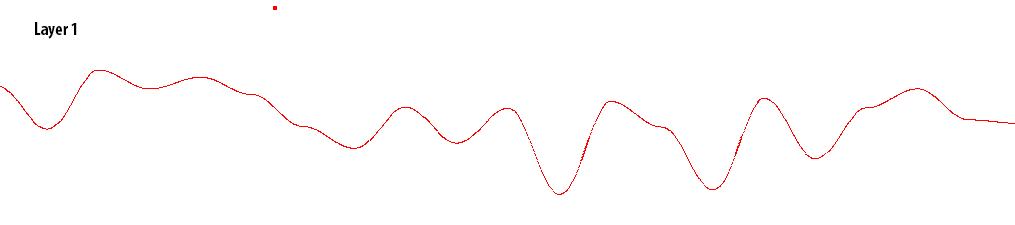

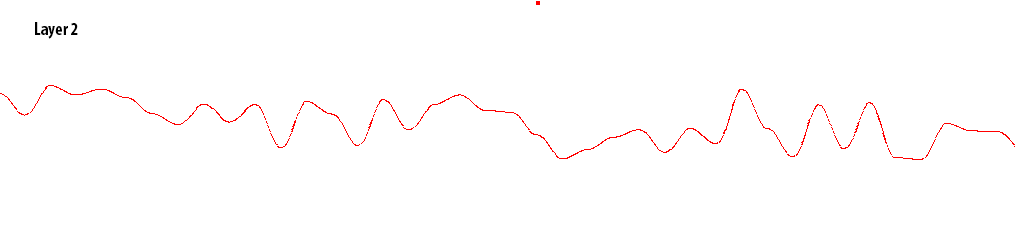

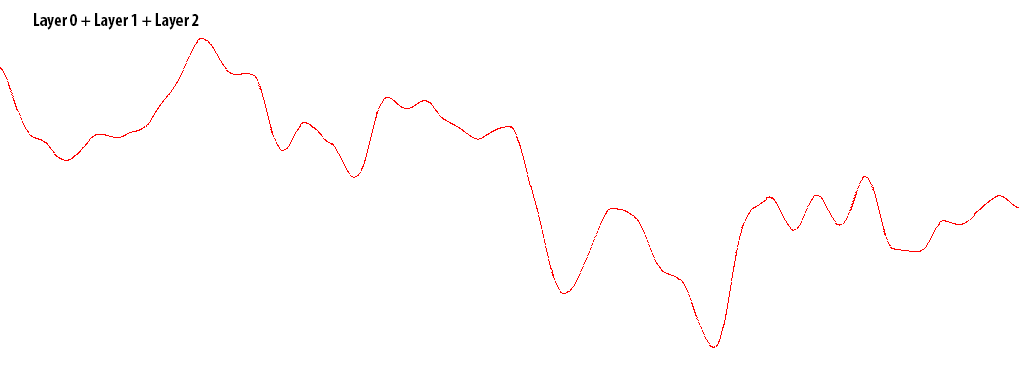

The core idea behind the layered summation approach to noise generation is that we first generate a very rough layer by generating noise with low frequency and high amplitude. Let's call this "Layer 0". Then, we create another layer with half the amplitude and double the frequency. Let's call this "Layer 1". We keep creating additional layers, with each layer having half the amplitude and double the frequency. When all of our layers have been created, we merge all of the layers together to get a final result. Layer 0 tends to give the final output noise its general contours (think of mountains and valleys). Each successive layer adds a bit of variation to the contours (think of the roughness of the mountains and valleys, all the way down to pebbles). Here is an example of 3 additive noise layers in 1 dimension:

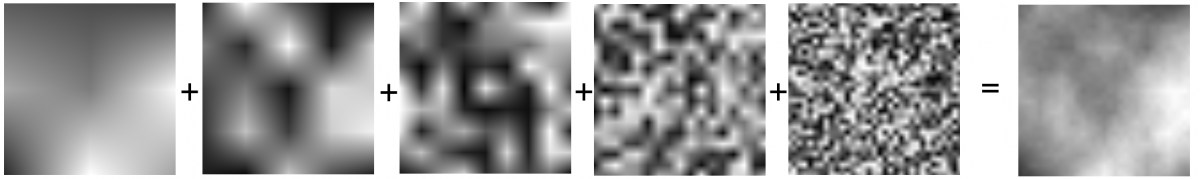

When it comes to 2D, the underlying principle is the same:

(click for large version)

Noise generation

There are four distinct parts to generating the final noise texture. 1) Generate a set of random numbers for each layer, with the range being a function of amplitude, and the quantity being a function of the layer resolution. 2) Determine which interpolation method you want to use for smoothing (linear, cubic, etc) 3) For each point in the output, create interpolated noise values for all X, Y values which fall between noise intervals 4) Sum all interpolated noise layers together to get the final product In the code below, I've heavily commented and explained each step of the process to generate 2D noise with as much simplicity as possible.

/// /// Entry point: Call this to create a 2D noise texture ///

public void Noise2D()

{

//Set the size of our noise texture

int size = 256;

//This will contain sum of all noise layers added together.

float[] NoiseSummation = new float[size * size];

//Let's create our layers of noise. Take careful note that the noise layers do not care about the dimensions of our output texture.

float[][,] noiseLayers = GenerateLayers(8, 8);

//Now, we have to merge our layers of noise into a summed product. This is when the size of our result becomes important. Smooth_and_Merge(noiseLayers, ref NoiseSummation, size); //Now, we have a summation of noise values. We need to normalize them so that they are between 0.0 -> 1.0 //this is necessary for generating our RGB values correctly and working in a known space of ranged values. float max = NoiseSummation.Max(); for (int a = 0; a < NoiseSummation.Length; a++) NoiseSummation[a] /= max; //At this point, we're done. Everything else is just for using/displaying the generated noise product. //I've added the following for illustration purposes: //Create a block of color data to be used for building our texture. Color[] vals = new Color[size * size]; //Convert the noise data into color information. Note that I'm generating a grayscale image by putting the same noise data in each //color channel. If you wanted, you could create three noise values and put them in seperate color channels. The Red channel could //store terrain height map information. The green channel could contain vegetation maps. The blue channel could store anything else. for (int a = 0; a < NoiseSummation.Length; a++) vals[a] = new Color(NoiseSummation[a], NoiseSummation[a], NoiseSummation[a]); //Create the output texture and copy the color data into it. The texture is ready for drawing on screen. TextureResult = new Texture2D(BaseSettings.Graphics, size, size); TextureResult.SetData(vals); } /// /// Takes the layers of noise data and generates a block of noise data at the given resolution /// /// Layers of noise data to merge into the final result /// The resulting output of merging layers of noise /// The size resolution of the output texture private void Smooth_and_Merge(float[][,] noiseData, ref float[] finalResult, int resolution) { //This takes all of the layers of noise and creates a region of interpolated values //using bilinear interpolation. http://en.wikipedia.org/wiki/Bilinear_interpolation int totalLayers = noiseData.Length; for (int layer = 0; layer < totalLayers; layer++) { //to figure out the length of our square, we just sqrt our array length. It's guaranteed //to be an integer square root. ie, 25 = 5x5. int squareSize = (int)Math.Sqrt(noiseData[layer].Length); //This is our step size between noise data points for this layer. //as we go into higher resolution layers, this value gets smaller. int gridWidth = resolution / (squareSize - 1); //Go through every X/Y coordinate in the resolution for (int y = 0; y < resolution; y++) { for (int x = 0; x < resolution; x++) { //map each X/Y coordinate to the nearest noise data point int gridY = (int)Math.Floor(y / (float)gridWidth); int gridX = (int)Math.Floor(x / (float)gridWidth); //define the four corners on the unit square float x1 = gridX * gridWidth; float x2 = x1 + gridWidth; float y1 = gridY * gridWidth; float y2 = y1 + gridWidth; //BILINEAR INTERPOLATION: (see wikipedia article) //perform our linear interpolations on the X-axis float R1 = ((x2 - x) / gridWidth) * noiseData[layer][gridX, gridY] + ((x - x1) / gridWidth) * noiseData[layer][gridX + 1, gridY]; float R2 = ((x2 - x) / gridWidth) * noiseData[layer][gridX, gridY + 1] + ((x - x1) / gridWidth) * noiseData[layer][gridX + 1, gridY + 1]; //Now, finish by interpolating on the Y-axis to get our final value float final = ((y2 - y) / gridWidth) * R1 + ((y - y1) / gridWidth) * R2; //Summation step: Add the interpolated result to our existing noise data. finalResult[y * resolution + x] += final; } } } } /// /// Creates a series of layers with STATIC noise data in each layer. /// /// The maximum variation in noise /// The number of layers you want to generate. Each layer has 2^x more data! /// A jagged array of floats which contain the noise data points per each layer private float[][,] GenerateLayers(float amplitude, int layerCount) { /* Note that we do not care about period or frequency here. We're still resolution independent. */ float[][,] ret = new float[layerCount][,]; //A seeded pseudo random number generator. Use different fixed seeds to generate different noise maps. Random r = new Random(5); //we want to generate noise points for each layer for (int layer = 0; layer < layerCount; layer++) { //The number of noise points we need per layer is a function of the layer resolution (implied by the layer ID) //At each successive layer, we halve our amplitude and double our frequency. This becomes important. //At the lowest resolution, 0, we need at least a 3x3 grid of points. (2+1) //At resolution 1, we need at least a 5x5 grid of noise points. (4+1) //At resolution 2, we need at least a 9x9 grid of noise points, etc. (8+1) //We can generalize this to F(x) = 2^(x+1) + 1; //where X is the layer resolution. int arraySize = (int)Math.Pow(2, layer+1) + 1; ret[layer] = new float[arraySize, arraySize]; //For each X/Y point in the noise grid for our current layer, let's generate a random number //which is a function of our amplitude. for (int y = 0; y < arraySize; y++) { for (int x = 0; x < arraySize; x++) { ret[layer][x, y] = (float)(r.NextDouble() * amplitude); } } //Now, we halve our amplitude and repeat for the next layer. amplitude /= 2.0f; } return ret; } Usage and Examples

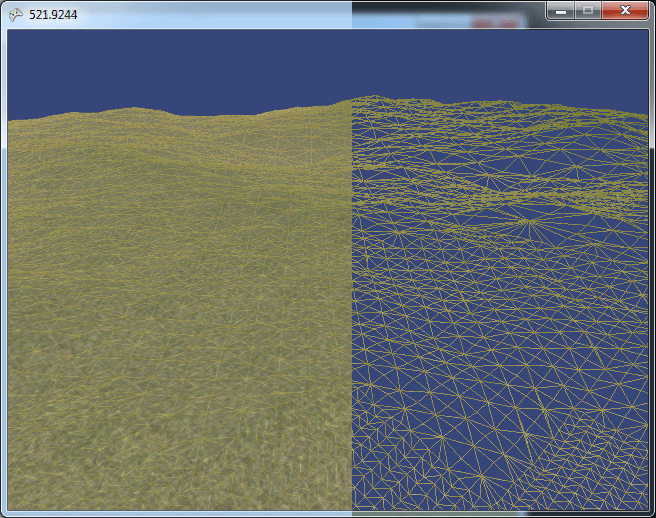

Terrain: I am using the noise technique to procedurally generate the height maps for terrain. My terrain uses GeoMipMapping for calculating Level of Detail (LOD). Although I haven't tried to implement it yet, I could use the various noise layers as a natural LOD for terrain. As the camera gets closer and closer to the terrain, the number of layers being merged into the final noise product causes the terrain detail to increase automatically.

Clouds: Clouds can be very easily created with 2D noise. Option 1: If you switch to 3D noise and let the Z axis represent time, you can create the illusion of clouds changing shape over time. Option 2: If you pre-generate a bunch of cloud textures with low opacity levels, you can layer them on top of each other and let the layers create an illusion of a change in cloud shape. Distribution patterns: You can also use noise to procedurally figure out distribution patterns for things such as trees, grass, shrubs, etc. Since your noise is continuous and ranges from 0.0 -> 1.0, you can arbitrarily decide "All values between 0.25 and 0.35 indicate where on the terrain shrubs shall be positioned". Density maps: You can also use noise maps to determine the density of "stuff", whatever that happens to be. Height maps are actually just a type of density map, where it describes the density of dirt above sea-level. Texturing: You can tweak various values of the final noise map to procedurally generate some very interesting textures (marble, wood, fire, etc).

See also

While we've talked about Perlin Noise being a very good algorithm for creating noise with continuity, other alternatives exist: 1) Fractals - Fractals are a viable alternative to noise algorithms for solving particular problems. The challenge is finding a suitable fractal and tweaking its values to generate the desired results. 2) Simplex Noise - It looks so complicated, I'm not even going to touch it. It's worth mentioning since it's supposedly better in terms of CPU and memory performance.

References

(1) Ken Perlins noise (1988) (2) Hugo Elias variation on Perlin noise (3) Riemers version (HLSL) (4) DigitalErr0r GPU version (5) Bilinear Interpolation

Very good article.

Let me suggest this one I wrote about the subject, I think people here could find it useful:

http://www.codeproject.com/Articles/785084/A-generic-lattice-noise-algorithm-an-evolution-of