(Wish I could bump an old thread because I'm having the same issue as someone but I can't so let's start a new thread)

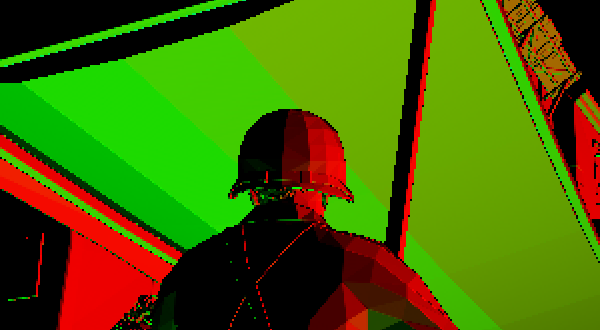

I adapted and modified some SSAO code to my engine (well it's an injector that adds effects to existing game engines actually). It works well but I noticed some strange edges artifacts. So I decided to have a closer look at it and output the normals only :

This is a portion of a 1680x1050 (my native res) screenshot. Notice how aliased the edges are ? Somehow it looks as if my depth buffer was output at half the resolution and then upscaled to my native res. (the backbuffer is fine and aliasing free)

I think this is the same issue shown here. Yet I use the method described by MJP in that very same topic to generate normals directly from hardware depth buffer since I'm able to reconstruct view space position from my depth. Could it be that the ddx/ddy operators (found in ssao_Main()) generate these artifacts ? (I'm a AMD user)

extern float FOV = 75;

static float2 rcpres = PIXEL_SIZE // (1.0/Width / 1.0/Height)

static float aspect = rcpres.y/rcpres.x;

static const float nearZ = 1;

static const float farZ = 1000;

static const float2 g_InvFocalLen = { tan(0.5f*radians(FOV)) / rcpres.y * rcpres.x, tan(0.5f*radians(FOV)) };

static const float depthRange = nearZ-farZ;

// Textures/Samplers definitions omitted for clarity

struct VSOUT

{

float4 vertPos : POSITION0;

float2 UVCoord : TEXCOORD0;

};

struct VSIN

{

float4 vertPos : POSITION0;

float2 UVCoord : TEXCOORD0;

};

VSOUT FrameVS(VSIN IN)

{

VSOUT OUT;

float4 pos=float4(IN.vertPos.x, IN.vertPos.y, IN.vertPos.z, 1.0f);

OUT.vertPos=pos;

float2 coord=float2(IN.UVCoord.x, IN.UVCoord.y);

OUT.UVCoord=coord;

return OUT;

}

float readDepth(in float2 coord : TEXCOORD0)

{

float posZ = tex2D(depthSampler, coord).r; // Depth is stored in the red component (INTZ)

return (2.0f * nearZ) / (nearZ + farZ - posZ * (farZ - nearZ)); // Get eye_z

}

float3 getPosition(in float2 uv : TEXCOORD0, in float eye_z : POSITION0) {

uv = (uv * float2(2.0, -2.0) - float2(1.0, -1.0));

float3 pos = float3(uv * g_InvFocalLen * eye_z, eye_z );

return pos;

}

float4 ssao_Main(VSOUT IN) : COLOR0

{

float depth = readDepth(IN.UVCoord);

float3 pos = getPosition(IN.UVCoord, depth);

float3 norm = cross(normalize(ddx(pos)), normalize(ddy(pos)));

return float4(norm, 1.0); // Output normals

}

technique t0

{

pass p0

{

VertexShader = compile vs_3_0 FrameVS();

PixelShader = compile ps_3_0 ssao_Main();

}

}

The depthSampler has its MinFilter set to POINT. I tried to change it to LINEAR out of curiosity but it doesn't change anything

While searching around the site I found a post explaining that "after converting a z-buffer depth into a world position, then you will run into continuity problems along edges, simply because of the severe change in Z.". Could it be because of that ? Any way to work around that ?