[context]

A Phenomenological Scattering Model for Order-Independent Transparency

Translucent objects such as fog, smoke, glass, ice, and liquids are pervasive in cinematic environments because they frame scenes in depth and create visually compelling shots. Unfortunately, they are hard to simulate in real-time and have thus previously been rendered poorly compared to opaque surfaces in games.

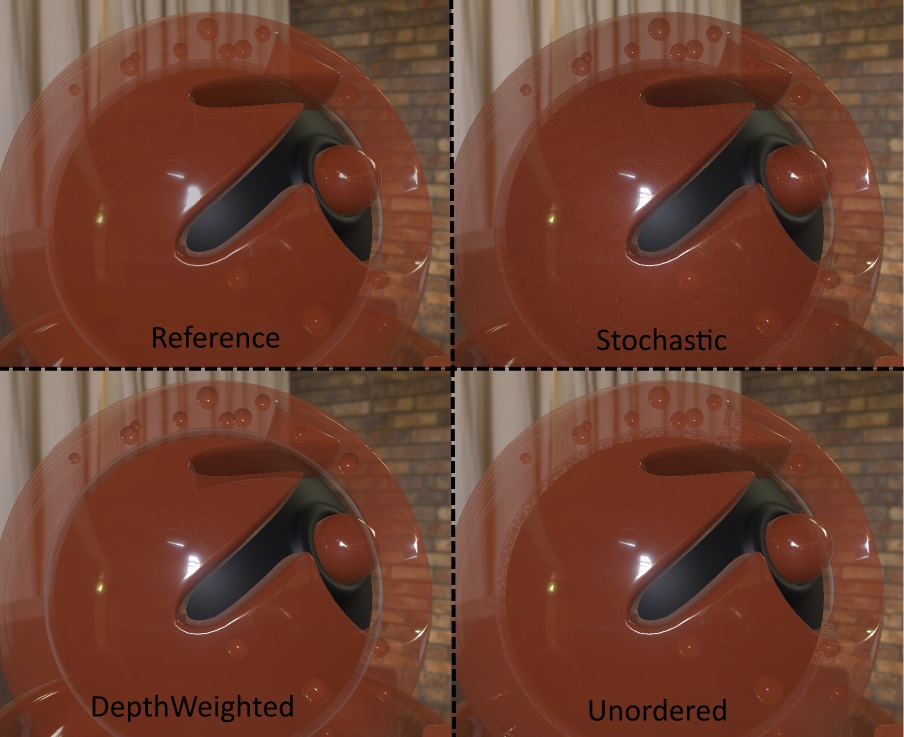

This paper introduces the first model for a real-time rasterization algorithm that can simultaneously approximate the following transparency phenomena: wavelength-varying ("colored") transmission, translucent colored shadows, caustics, partial coverage, diffusion, and refraction. All render efficiently on modern GPUs by using order-independent draw calls and low bandwidth. We include source code for the transparency and resolve shaders.

[Split off from another thread]

This is completely off topic, but as for sorting and intersections, I can't wait to try out McGuire's phenomenological scattering model that was also published recently. It seems like a complete hack that should never work, but the presented results do look very robust... TL;DR is that you don't sort at all, use a magic fixed function blend mode into a special MRT a-buffer, then at the end average the results into something that magically looks good and composite over the opaques.

[edit]

So far in my game, I've been using an improved version of stippled deferred translucency, which supports the same phenomena (except the shadow-map based parts of the above)... but the analysis/pattern-selection and compositing passes are kinda costly, it relies on deferred (and I'd like to move to Forward+), it only works with 4 layers, and getting it to work anti-aliasing is really painful...

On the other hand, "a PSM for OIT" is really simple (simpler than should be possible!) and works with Forward+ fine, so I'm intrigued. I remember seeing earlier versions of this work in previous years, but the results weren't that convincing so I dismissed it out of hand... but this year's publication shows fairly artifact free results.