Hey Guys and Gals!

I just purchased a 4k UHD TV and it is awesome! How long do you think it will take until game developers are utilizing the amazing picture quality?

Hey Guys and Gals!

I just purchased a 4k UHD TV and it is awesome! How long do you think it will take until game developers are utilizing the amazing picture quality?

Just a guess: when they start selling 16k TV sets, some people have 8k and 95% of people own a 4k. Just like people still keep watching SD TV on their Full HD TV.

I just purchased a 4k UHD TV and it is awesome! How long do you think it will take until game developers are utilizing the amazing picture quality?

You can play most PC games at 4K resolutions today, provided you have a beast of a graphics card.

Consoles certainly won't support 4K until the next generation, which based on history, is likely the better part of 5 years away. Even then, whether or not they adopt 4K depends heavily on how wide the adoption of 4K TVs is, and how widely other content providers have bought into the technology.

The jump from SD to HD is massive and is immediately noticeable at most screens sizes and viewing distances.

The jump from HD to 4k is much less noticeable unless you're either very close or your tv is massive.

Basically, I don't think you'll see a comparable level of uptake on 4k as we did with hd.

As swiftcoder said, you can game at 4k right now, but you'll need a seriously kitted out PC.

Given that the current generation of consoles aren't even outputting 1080p for most games, they'll need a significant increase in graphical horsepower to do 4k. OTOH, that's exactly the kind of selling point that could shift a new generation.

Assuming your new tv can stream videos, you can get a preview of 4k gaming with this Homeworld trailer

And this is why we don't game at 4K. So many glitches in those particle-ribbon trails. So many.Assuming your new tv can stream videos, you can get a preview of 4k gaming with this Homeworld trailer

The jump from SD to HD is massive and is immediately noticeable at most screens sizes and viewing distances.

The jump from HD to 4k is much less noticeable unless you're either very close or your tv is massive.

Basically, I don't think you'll see a comparable level of uptake on 4k as we did with hd.

As swiftcoder said, you can game at 4k right now, but you'll need a seriously kitted out PC.

Given that the current generation of consoles aren't even outputting 1080p for most games, they'll need a significant increase in graphical horsepower to do 4k. OTOH, that's exactly the kind of selling point that could shift a new generation.

Assuming your new tv can stream videos, you can get a preview of 4k gaming with this Homeworld trailer

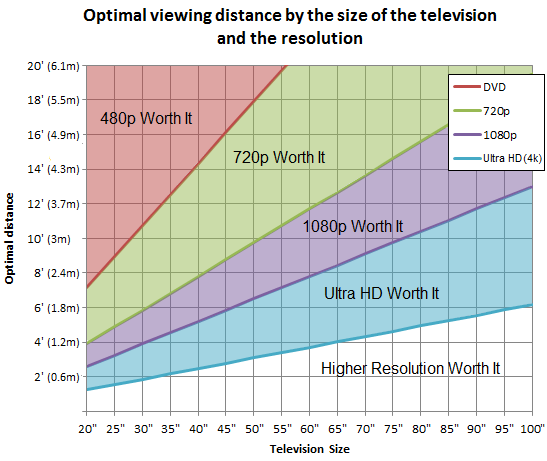

This chart is... how do I put this... bullshit (in my opinion). I am going to borrow a comment I made elsewhere on the internet, specifically the last paragraph.

Okay, let's start with normal viewing distances. It's true that there are "optimum viewing distance" recommendations, however this is not how real people's houses work. What normal people do, I've found, is arrange their furniture based on what makes sense physically in their house, then buy a TV that is as large as possible given other constraints (physical and financial). What that distance works out to be depends on your house, but I'd say maybe 8 feet on the low end, 16 on the high end. My comment is primarily with respect to the lower end of that. Call it 8-12 feet. I have no contention with these numbers, though I suspect that most people's screens are not "optimum". Most people are not trying to build home cinemas.

Now there's also a recommendation of what resolution is needed for a certain size screen and viewing distance. Here is a summary of the typical recommendation there:http://www.engadget.com/2006/12/09/1080p-charted-viewing-distance-to-screen-size/It's these numbers that I disagree with, and it's my contention that these numbers are derived from fundamental misunderstandings of how human vision works. The numbers come from determining that humans can resolve X lines of resolution within 1 arc-second of our visual range, then multiply that number through how many arc-seconds the screen occupies and what the resolution comes out to. This is not at all how human vision works, and furthermore I'll argue that people will consistently prefer a (true) 4K image at 10 feet on a 60"+ screen even if they don't know why. (Note that the chart claims this isn't even close enough to see full 1080p. BS!) The reasoning comes down to metrics of quality that are affected by resolution but not a simple X lines per arc-second. Edge aliasing, contrast, fine pattern rendering, gradation, image noise, etc are all visible very differently in 4K even though they don't follow a simple "resolution of the human eye" formula.

That said, there is a further complication. The comment I wrote was discussing 4K video footage recorded by a camera, not rendered graphics. Renders are significantly higher resolution than camera recordings for the same nominal resolution. Indeed this is actually a real hassle for 'filmic' outputs, whether real time or offline renders. Consider this comment from a Pixar employee, taken from this article:

"We do what is essentially MSAA. Then we do a lens distortion that makes the image incredibly soft (amongst other blooms/blurs/etc). Softness/noise/grain is part of film and something we often embrace. Jaggies we avoid like the plague and thus we anti-alias the crap out of our images," added Pixar's Chris Horne, adding an interesting CG movie perspective to the discussion - computer generated animation is probably the closest equivalent gaming has in Hollywood.

"In the end it's still the same conclusion: games oversample vs film. I've always thought that film res was more than enough res. I don't know how you will get gamers to embrace a film aesthetic, but it shouldn't be impossible."

Actually that whole article is worth reading. It's a tricky problem. Personally reading swiftcoder's comment, I am reminded of seeing Firefly in 1080p for the first time. I'm sorry but that wonderful series looks awful when you can see that well. Things look blatantly like props, a criticism that has been leveled more recently against the Hobbit series of films.

The jump from SD to HD is massive and is immediately noticeable at most screens sizes and viewing distances.

The jump from HD to 4k is much less noticeable unless you're either very close or your tv is massive.

Basically, I don't think you'll see a comparable level of uptake on 4k as we did with hd.

As swiftcoder said, you can game at 4k right now, but you'll need a seriously kitted out PC.

Given that the current generation of consoles aren't even outputting 1080p for most games, they'll need a significant increase in graphical horsepower to do 4k. OTOH, that's exactly the kind of selling point that could shift a new generation.

Assuming your new tv can stream videos, you can get a preview of 4k gaming with this Homeworld trailer

This chart is... how do I put this... bullshit (in my opinion). I am going to borrow a comment I made elsewhere on the internet, specifically the last paragraph.

Okay, let's start with normal viewing distances. It's true that there are "optimum viewing distance" recommendations, however this is not how real people's houses work. What normal people do, I've found, is arrange their furniture based on what makes sense physically in their house, then buy a TV that is as large as possible given other constraints (physical and financial). What that distance works out to be depends on your house, but I'd say maybe 8 feet on the low end, 16 on the high end. My comment is primarily with respect to the lower end of that. Call it 8-12 feet. I have no contention with these numbers, though I suspect that most people's screens are not "optimum". Most people are not trying to build home cinemas.

Now there's also a recommendation of what resolution is needed for a certain size screen and viewing distance. Here is a summary of the typical recommendation there:http://www.engadget.com/2006/12/09/1080p-charted-viewing-distance-to-screen-size/It's these numbers that I disagree with, and it's my contention that these numbers are derived from fundamental misunderstandings of how human vision works. The numbers come from determining that humans can resolve X lines of resolution within 1 arc-second of our visual range, then multiply that number through how many arc-seconds the screen occupies and what the resolution comes out to. This is not at all how human vision works, and furthermore I'll argue that people will consistently prefer a (true) 4K image at 10 feet on a 60"+ screen even if they don't know why. (Note that the chart claims this isn't even close enough to see full 1080p. BS!) The reasoning comes down to metrics of quality that are affected by resolution but not a simple X lines per arc-second. Edge aliasing, contrast, fine pattern rendering, gradation, image noise, etc are all visible very differently in 4K even though they don't follow a simple "resolution of the human eye" formula.

That said, there is a further complication. The comment I wrote was discussing 4K video footage recorded by a camera, not rendered graphics. Renders are significantly higher resolution than camera recordings for the same nominal resolution. Indeed this is actually a real hassle for 'filmic' outputs, whether real time or offline renders. Consider this comment from a Pixar employee, taken from this article:

"We do what is essentially MSAA. Then we do a lens distortion that makes the image incredibly soft (amongst other blooms/blurs/etc). Softness/noise/grain is part of film and something we often embrace. Jaggies we avoid like the plague and thus we anti-alias the crap out of our images," added Pixar's Chris Horne, adding an interesting CG movie perspective to the discussion - computer generated animation is probably the closest equivalent gaming has in Hollywood.

"In the end it's still the same conclusion: games oversample vs film. I've always thought that film res was more than enough res. I don't know how you will get gamers to embrace a film aesthetic, but it shouldn't be impossible."

Actually that whole article is worth reading. It's a tricky problem. Personally reading swiftcoder's comment, I am reminded of seeing Firefly in 1080p for the first time. I'm sorry but that wonderful series looks awful when you can see that well. Things look blatantly like props, a criticism that has been leveled more recently against the Hobbit series of films.

I'm actually more skeptical at how much people will notice 4k ultra HD and how much it'll catch on. First off, how many people would be able to afford a 4k 60" TV? Those things aren't very cheap (even the cheapest is close to $1600, which isn't really high quality) and then there's the fact that most people can't fit a 60" TV into their homes. I don't think most people would notice the difference.

Moreover, going from SD to HD was massive. I don't think that going from HD to UHD will be as massive even if you notice the difference. But maybe that's just me and I'm wrong. Regardless, my first point stands.

People have been using HD, or near-HD, computer monitors for awhile now even before the 1080p TV craze. I think computer gamers are interested in 4K monitors, once the prices become reasonable (significant price drops on them are starting to happen this year, though they're still too expensive for me).

For myself, I'm more interested in the screen real-estate of a 4K monitor for development purposes, though I hear that some applications and OS interfaces have difficulty at that resolution. Better OS support for window snapping would be nice too.

Many of us have at one time or another run multi-monitor setups at or above 4K. 3x monitors in 2560x1440 portrait is slightly above 4K, and I've seen quite a few enthusiasts game on setups like that.

My major argument against a single 4K monitor is that it will be impossible to use that resolution optimally. Either you make a relatively small monitor, and waste a lot of detail you can't see, or you make a large monitor, and have to sit so far back you can't see the detail anyway... Perhaps curved monitors will alleviate the issue, but I have my doubts.