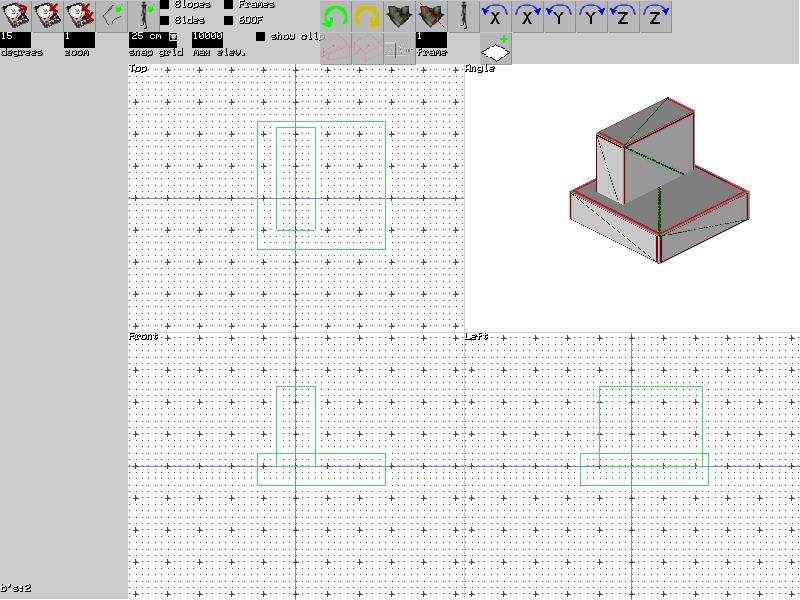

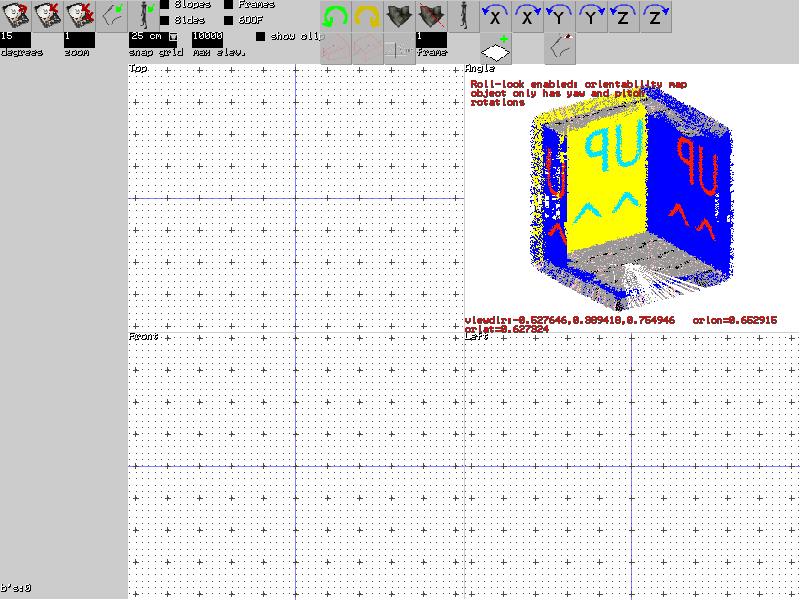

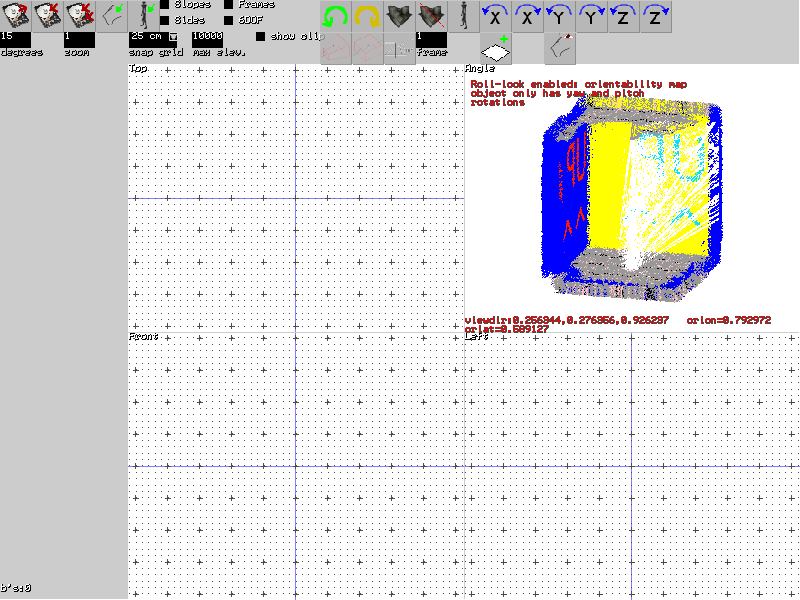

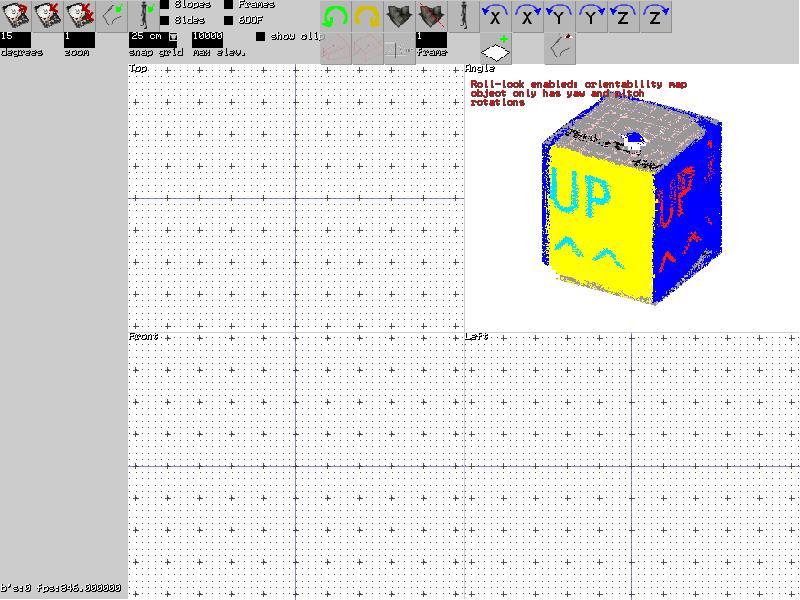

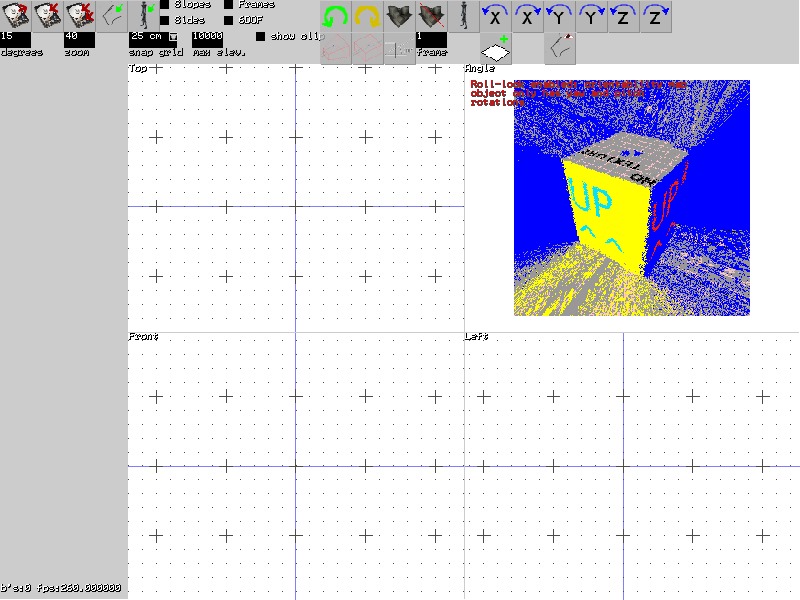

I will also briefly describe how the orientability maps are generated and how they are used.

http://store.steampowered.com/app/468900/3D_Sprite_Renderer/

http://steamcommunity.com/games/468900/announcements/detail/246974483987671222

http://steamcommunity.com/games/468900/announcements/detail/246972580417829715

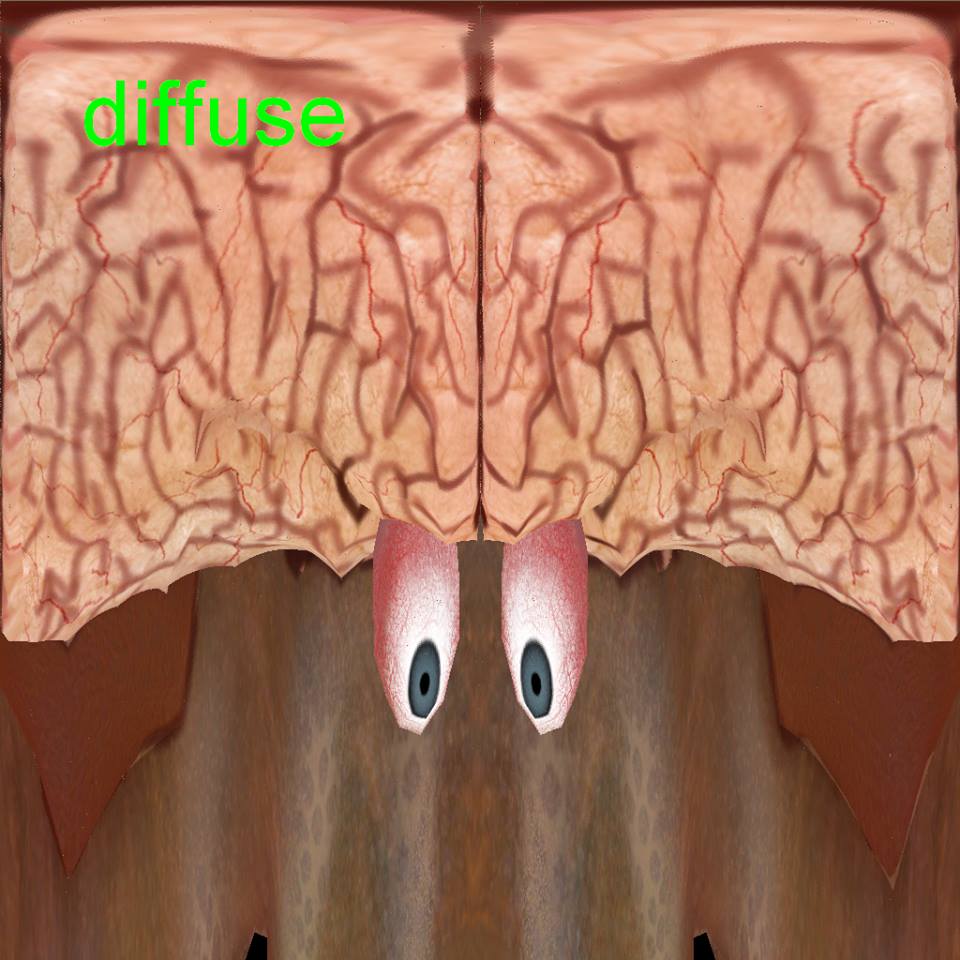

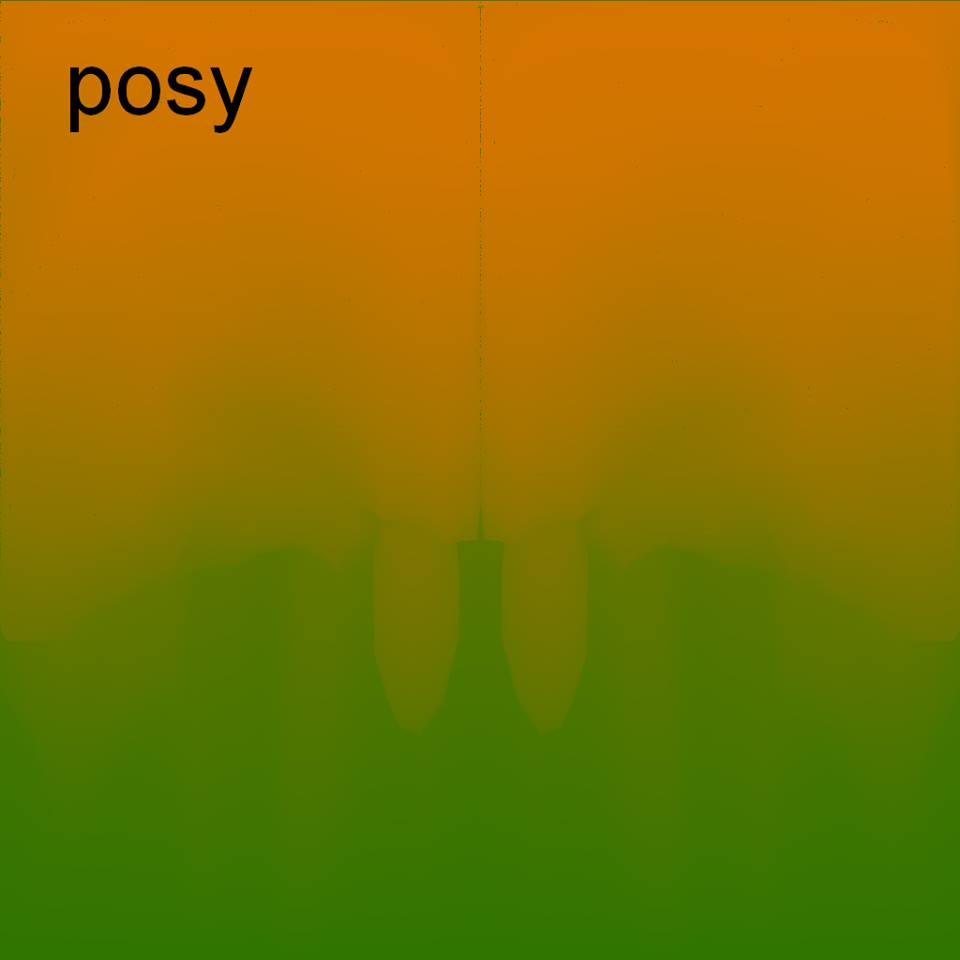

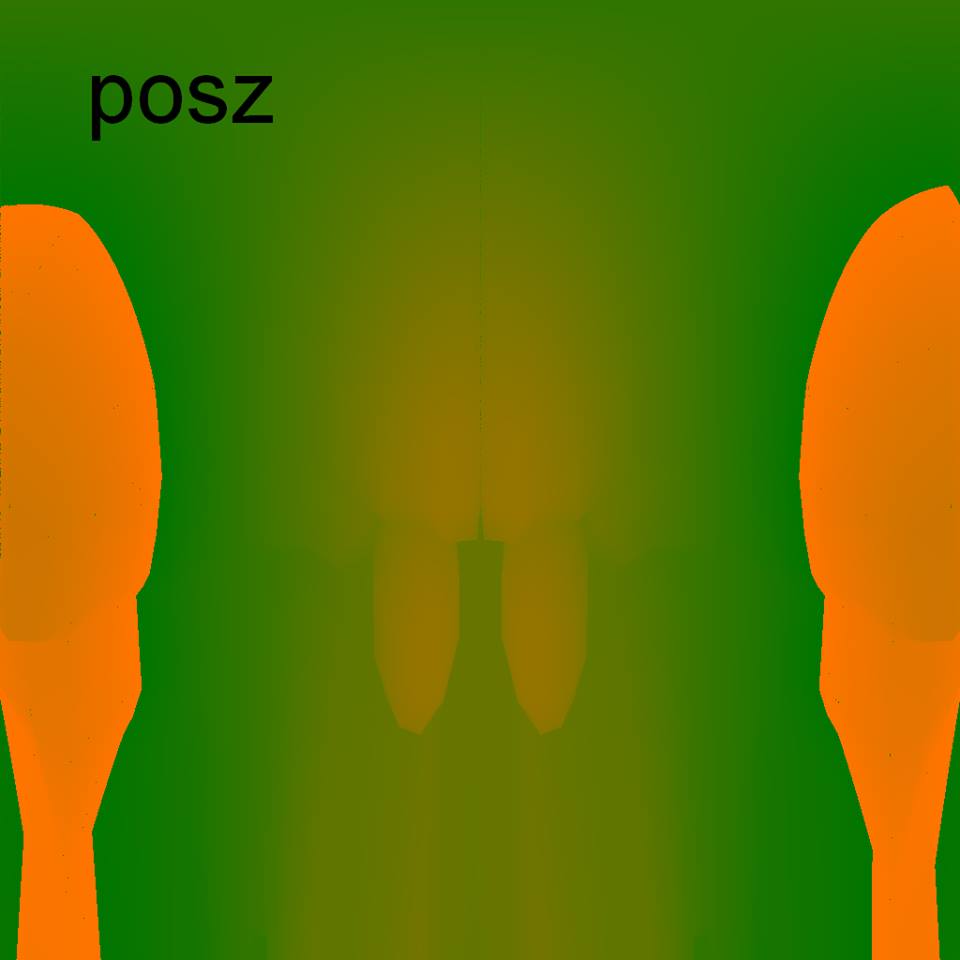

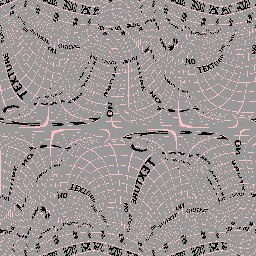

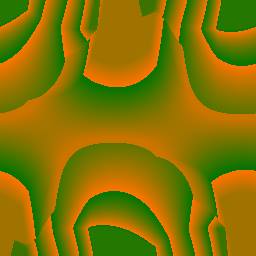

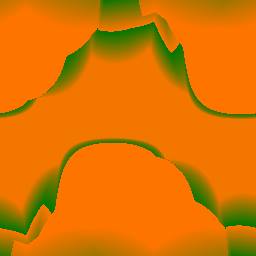

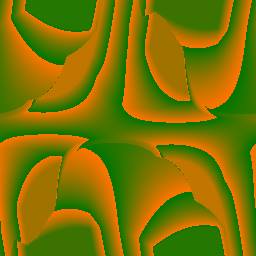

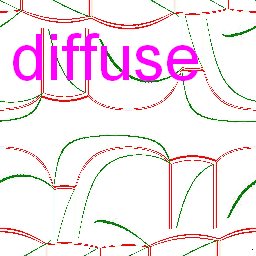

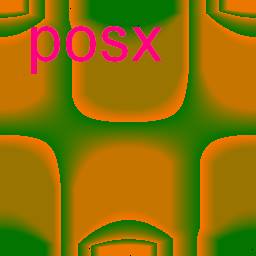

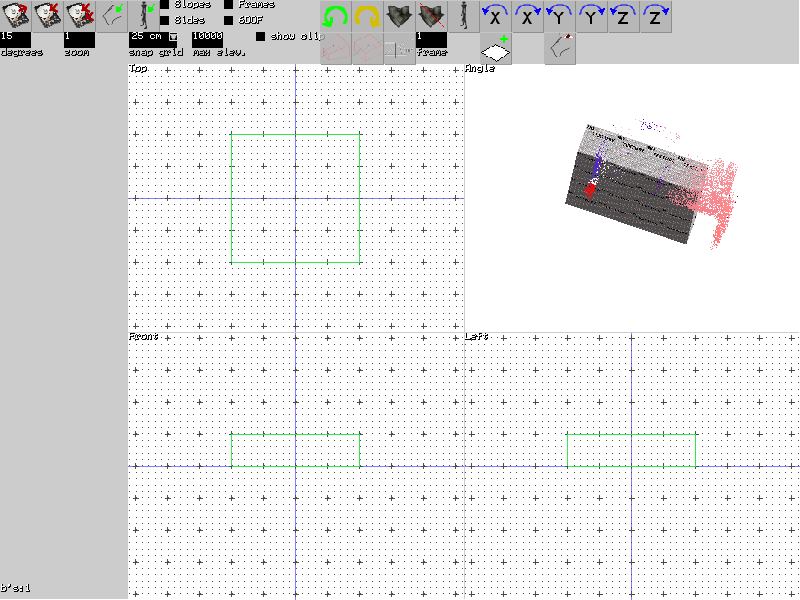

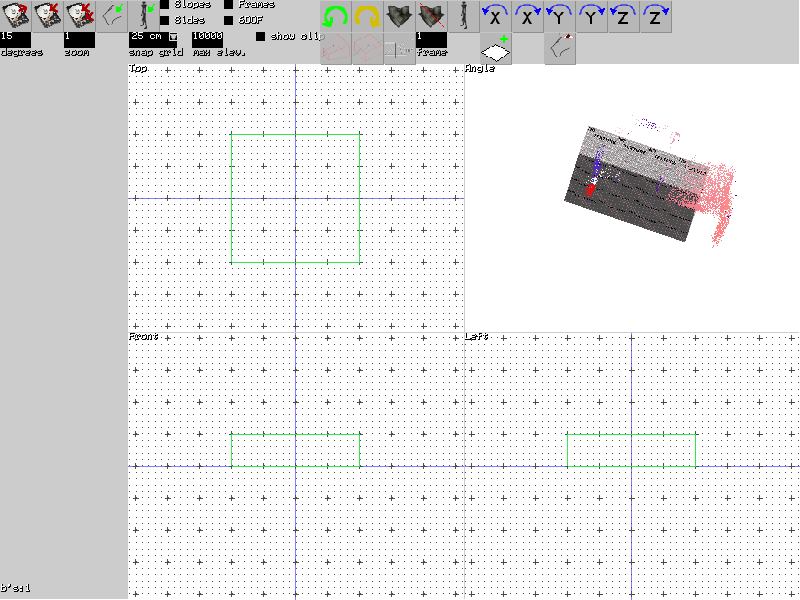

To generate an orientability map, a model, which is not supposed to have any holes or inner chasms where there is a detatched inner part (although that's supposed to be clipped and removed in the process of generation), is unwrapped into a sphere. Then, it is as if a laser scanner scans it from every angle, using polar coordinates into a texture, for the colors, and the x, y, z, coordinates of the original model from those points, using RGB encoding (3 textures for the coordinates).

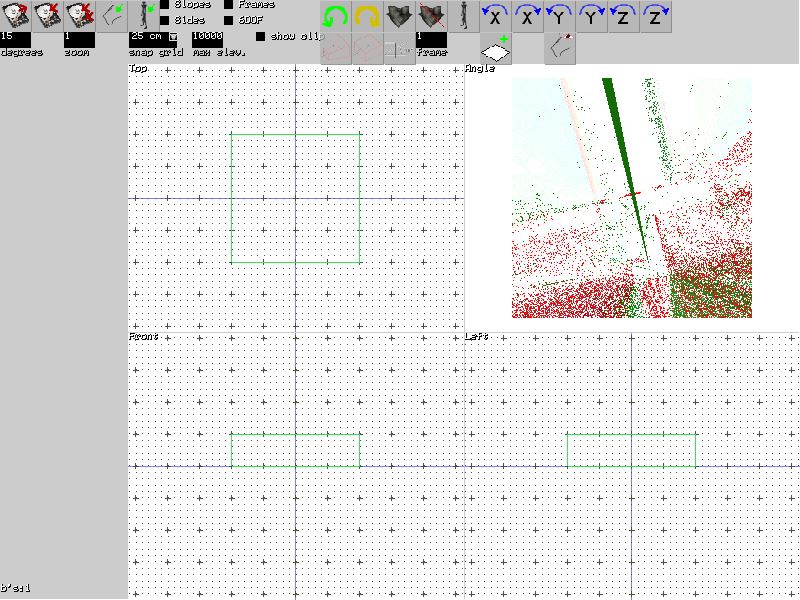

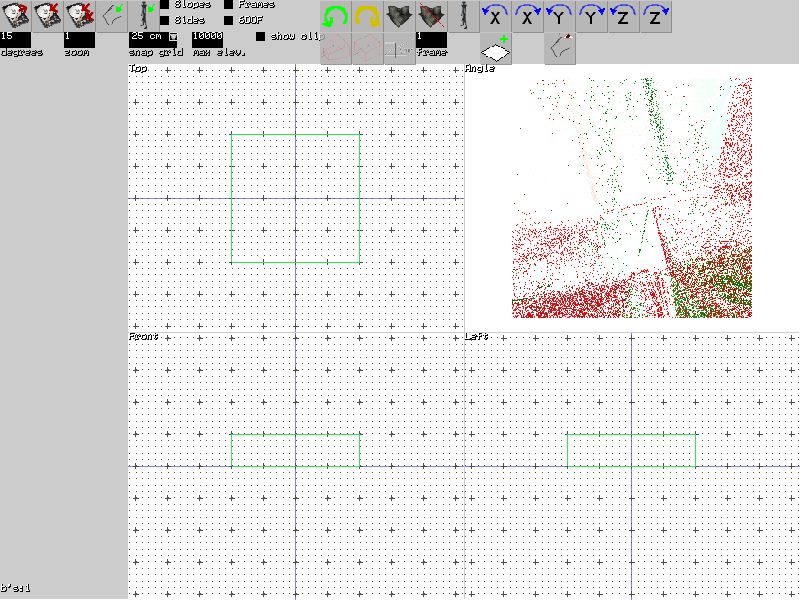

I tried different methods of unwrapping, unsuccessfully until the third method.

The correct method was, for a point of a triangle marked as being hidden, with a ray going from the center to that point, intersecting some point along that line farther out, to take the normal along that triangle or line, with the normal pointing toward the same direction as the normal of the triangle, and to move it a tiny distance that way, to give it room, so that when that hidden point or points are moved outward spherically, to a similar radius as the non-hidden point, it will have room and not end up behind it. tet->neib[v]->wrappos = tet->neib[v]->wrappos + updir * 0.01f + outdir;

( Line 12334 in https://github.com/dmdware/sped/blob/master/source/tool/rendertopo.cpp#L12334 )

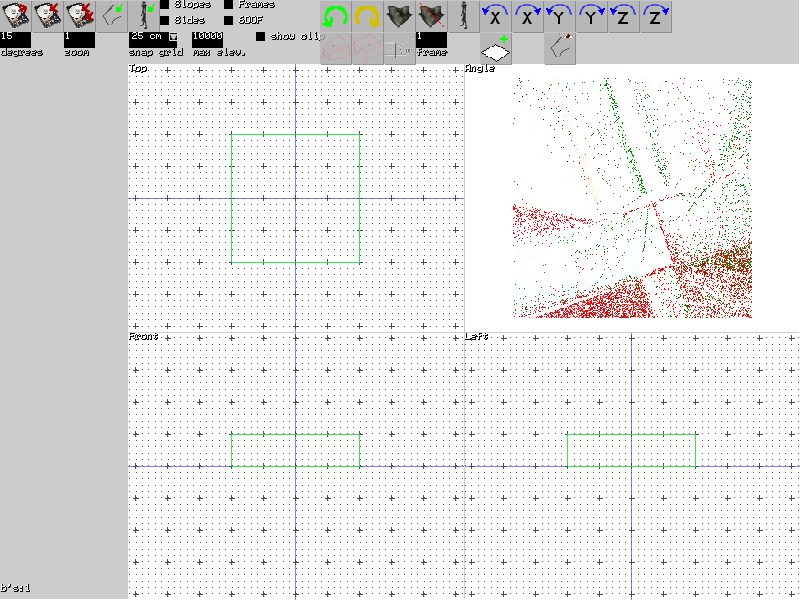

And the rest are the work leading up to the correct rendering methods:

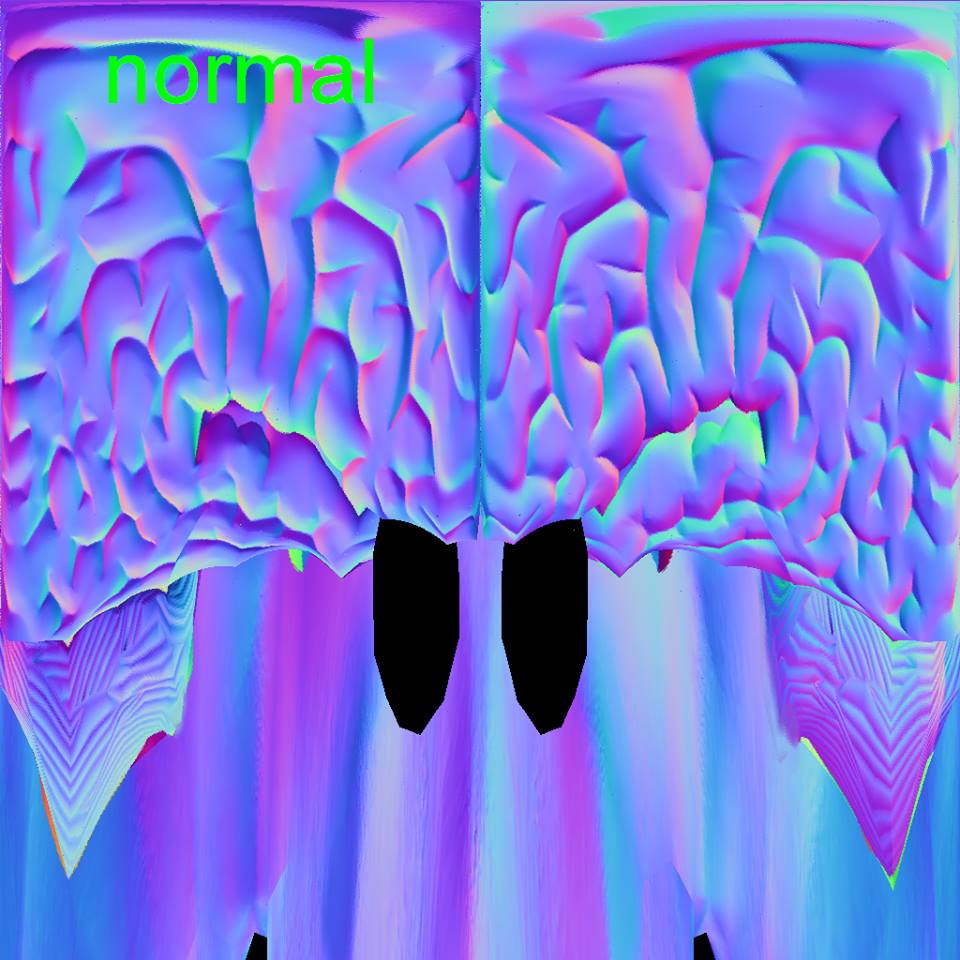

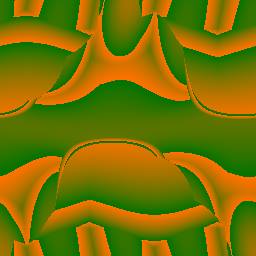

In the shaders there is a lot of left-over code, from eg shadow mapping and normal mapping, that can be removed, and will probably make it faster. At each step in the search for the right pixel data, in the fragment shader, it will update the next texture jump size in pixels, at a decreasing rate, then the "upnavc" and "sidenavc" coordinates after the jump are calculated, from the previous "jumpc", for the coordinate and color textures, the 3-d coordinates of those pixels are obtained, they are then transformed based on the orientation of the model, its translation, and the camera orientation and translation, the "upnavoff" and "sidenavoff" 3-d coordinates are obtained thusly, for the "offset" or "not correct" position (as opposed to the position that would be obtained if the exact match for the pixel was found in the model), the "offsets" are then literally turned into offsets from the previous 3-d position "offpos", and normalized, and then the "offvec" is calculated by subtracting from outpos2 (obtained by projecting a ray from the camera's near plane, or in the case of perspective, from the point origin of the eye, to the plane of the quad being rendered), subtracting the "offpos" 3-d coordinate of the current position of search, and only the camera-aligned x and y coordinates are used, (the camera up and right vectors), by decomposing the "offvec" using the camera's up and right vectors, using the "sidenavoff" and "upnavoff" actual offsets along the textures based on the pixel jumps 1 jump right and 1 jump up, to give the next texture jump "texjump" offset along the texture to get closer to "outpos2" (on only the camera-aligned right and up vectors, ignoring depth), and the new x-,y-,z-coordinates are obtained and an offset length from the required "outpos2" are calculated, so that if it is too high, at the end of the search, it is discarded, if it is perhaps on a place on the screen where there shouldn't be any part of the model.

The perspective projection of orientability maps works the same way except, the "viewdir" must be calculated individually for each screen fragment, for use with the search, and instead of "outpos2" on the screen being used to get the right up- and right-vector camera-aligned coordinates, a ray is drawn through that fragment from the camera origin, and a point is searched in 3-d space closest to the ray.

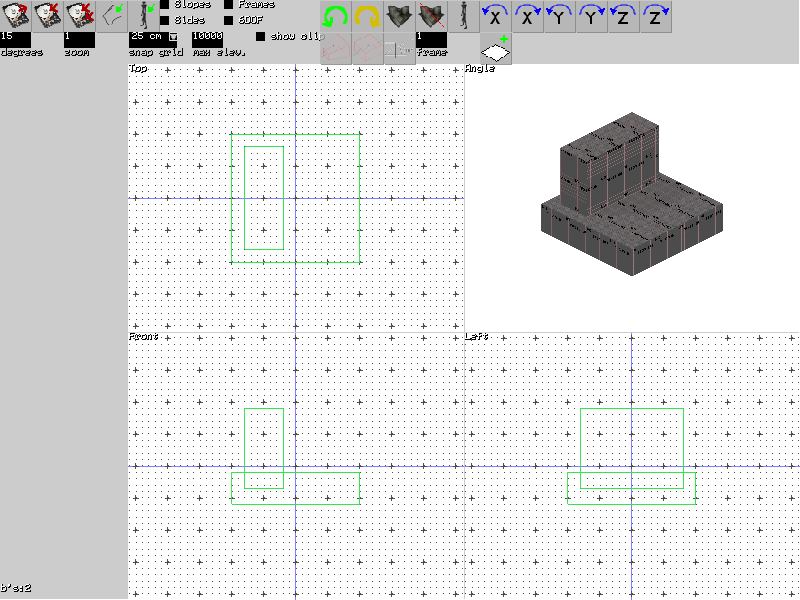

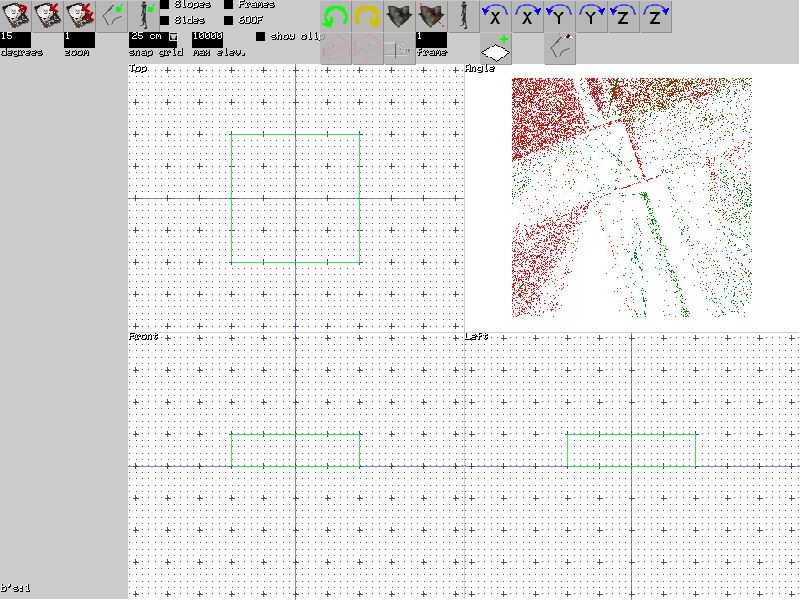

The "islands" are pre-generated by the editor based on all combinations of angles and offsets along the up- and right- vectors (ie the pixels there). You can also simplify the rotation functions in the shaders and tool when rendering to not use so much padding and modification of the angles, e.g. adding or subtracting half or full pi and multiplying or dividing by two.

An improvement can be made to avoid the edge cases where part of the back side is rendered, to check if the normal at that point is pointing backwards, and if it is, to jump back to the previous place, but still decrease the next jump size and to increment the step counter.