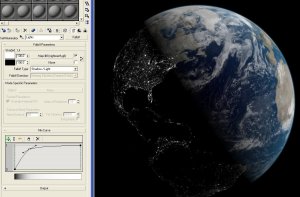

Notice the lights on the dark side. These are actually two separate textures, one for the dark side, other for the light. How can I do this?

Thanks

Notice the lights on the dark side. These are actually two separate textures, one for the dark side, other for the light. How can I do this?

Thanks

how does one do this?

Hey,

Notice the lights on the dark side. These are actually two separate textures, one for the dark side, other for the light. How can I do this?

Thanks

Notice the lights on the dark side. These are actually two separate textures, one for the dark side, other for the light. How can I do this?

Thanks

Notice the lights on the dark side. These are actually two separate textures, one for the dark side, other for the light. How can I do this?

Thanks

Notice the lights on the dark side. These are actually two separate textures, one for the dark side, other for the light. How can I do this?

Thanks

Sample the first ("daylight") texture.

Sample the second ("night") texture.

Compute diffuse lighting factor (n-dot-l). Perform a linear interpolation between the first and second colors.

Assuming the night texture is mostly dark, you might not even need to bother with actually applying lighting. This might not generate the most desirable results at the "seams" of the night/dark boundary, you might have to play with the way you combine or select the colors and the make-up of the actual texture data.

Sample the second ("night") texture.

Compute diffuse lighting factor (n-dot-l). Perform a linear interpolation between the first and second colors.

Assuming the night texture is mostly dark, you might not even need to bother with actually applying lighting. This might not generate the most desirable results at the "seams" of the night/dark boundary, you might have to play with the way you combine or select the colors and the make-up of the actual texture data.

The obvious way would be lerp between to textures (on daytime and one night-time) based on your N.L result in your pixel shader. I.e. somethin

BTW what app is that its not from SGI by any change is it ?

float lightVal=dot(normal,lightDirec);return lerp(daytimeColor, nighttimeColor, lightVal);BTW what app is that its not from SGI by any change is it ?

Understood. Thanks for the answers!

One more thing: do you see any fixed (non-shader) way to do this?

its max... got the shot from here

One more thing: do you see any fixed (non-shader) way to do this?

Quote:Original post by griffin2000

The obvious way would be lerp between to textures (on daytime and one night-time) based on your N.L result in your pixel shader. I.e. somethin

<source>

float lightVal=dot(normal,lightDirec);

return lerp(daytimeColor, nighttimeColor, lightVal);

</source>

BTW what app is that its not from SGI by any change is it ?

its max... got the shot from here

The FFP is quite capable of multi-texturing (called texture blending, in DX documentation).

Back in the day, this would be done by rendering the geometry twice, changing texture in between, using appropriate alpha-blending factors for each pass. However, hardware has long been capable of doing it in a single pass.

You may have noticed that when you 'set' a texture, you have to pass the value '0'. This tells the API to assign the texture to the first (index 0) sampler. Depending on your graphic card, you can use up to 16 samplers simultaneously, each with their own texture and state.

To use several samplers, you'll need to change your vertex declaration to accept more parameters. Often, supplying an extra set of texture coordinates is all you need in order to blend two textures, but the possibilities are diverse.

Take a look at Toymaker/NeHe or whatever site you like for a tutorial.

Regards

Admiral

Back in the day, this would be done by rendering the geometry twice, changing texture in between, using appropriate alpha-blending factors for each pass. However, hardware has long been capable of doing it in a single pass.

You may have noticed that when you 'set' a texture, you have to pass the value '0'. This tells the API to assign the texture to the first (index 0) sampler. Depending on your graphic card, you can use up to 16 samplers simultaneously, each with their own texture and state.

To use several samplers, you'll need to change your vertex declaration to accept more parameters. Often, supplying an extra set of texture coordinates is all you need in order to blend two textures, but the possibilities are diverse.

Take a look at Toymaker/NeHe or whatever site you like for a tutorial.

Regards

Admiral

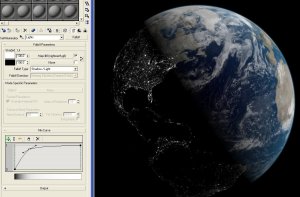

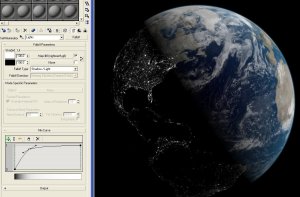

k, i think I got it somewhat right:

however, notice the lighting isnt very good on the lit side. How can I integrate some decent lighting with the shaders I have to handle this two texture thing? Now, my output color is:

lerp(daytimecolor, nighttimecolor, lightval)

how do I had some decent diffuse, or even spec to this?

thanks again

however, notice the lighting isnt very good on the lit side. How can I integrate some decent lighting with the shaders I have to handle this two texture thing? Now, my output color is:

lerp(daytimecolor, nighttimecolor, lightval)

how do I had some decent diffuse, or even spec to this?

thanks again

First, generate vertex normals for the mesh, then use them in the usual Lambertian lighting model for a diffuse effect (specular lighting would look awful without some very subtle and finely-tuned bump-mapping).

The basic idea is to take the dot product of the normalised light vector with the normalised normal. After clamping the result to [0, 1] you have the diffuse lightness factor. You'll find tutorials all over the web.

Toymaker, for Direct3D.

Lighthouse3D, for OpenGL.

Regards

Admiral

The basic idea is to take the dot product of the normalised light vector with the normalised normal. After clamping the result to [0, 1] you have the diffuse lightness factor. You'll find tutorials all over the web.

Toymaker, for Direct3D.

Lighthouse3D, for OpenGL.

Regards

Admiral

Quote:Original post by TheAdmiral

First, generate vertex normals for the mesh, then use them in the usual Lambertian lighting model for a diffuse effect (specular lighting would look awful without some very subtle and finely-tuned bump-mapping).

The basic idea is to take the dot product of the normalised light vector with the normalised normal. After clamping the result to [0, 1] you have the diffuse lightness factor. You'll find tutorials all over the web.

Toymaker, for Direct3D.

Lighthouse3D, for OpenGL.

Regards

Admiral

k, but if im passing a color to the vertex in the vertex shader, won't it be overwritten by the frag color in the fragment shader?

Quote:if im passing a color to the vertex in the vertex shader, won't it be overwritten by the frag color in the fragment shader?

Not if your fragment shader uses its colour input.

It is indeed possible to write a pixel/fragment shader that outputs a colour regardless of what the vertex data say, but the default FFP-equivalent pixel shader modulates (pointwise-multiply) its sampled colour with the vertex-interpolated colour. This is how textured geometry can be lit per-vertex. Just tell the pixel shader to accept a COLOR parameter and float4-multiply by that.

Regards

Admiral

This topic is closed to new replies.

Advertisement

Popular Topics

Advertisement