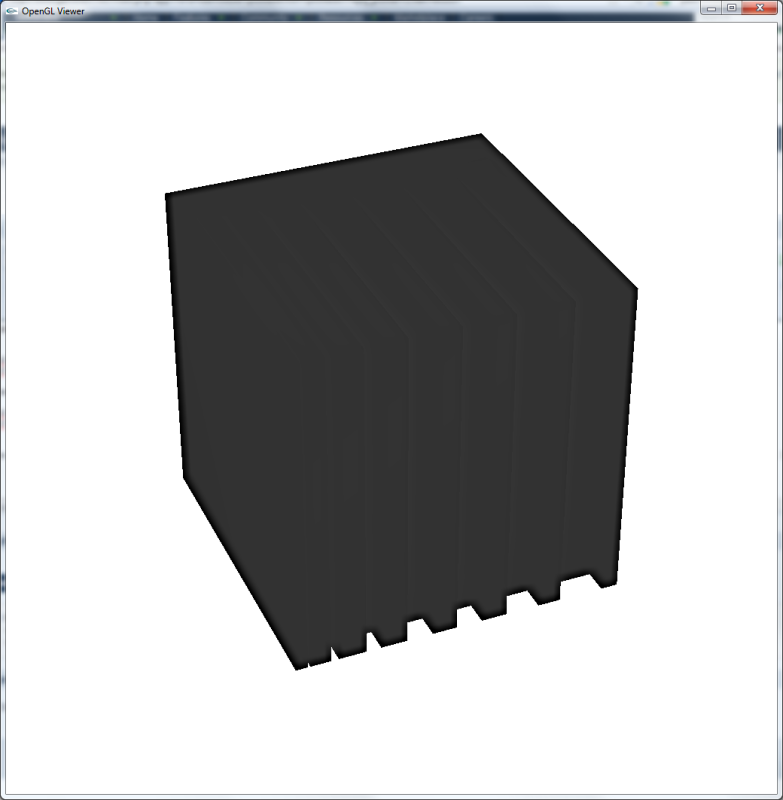

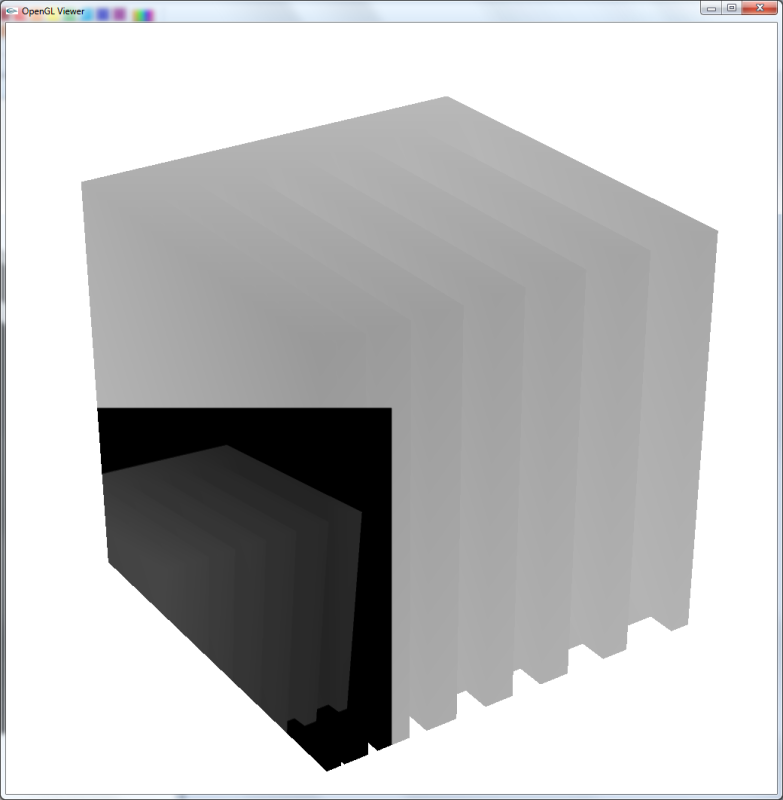

That all seems to be working well, so I moved on to unsharp masking the depth texture (the next step). I wrote a shader to blur the depth texture and display the blurred version, but I'm getting a weird artifact that I can't track down. The render only appears in the lower left quadrant:

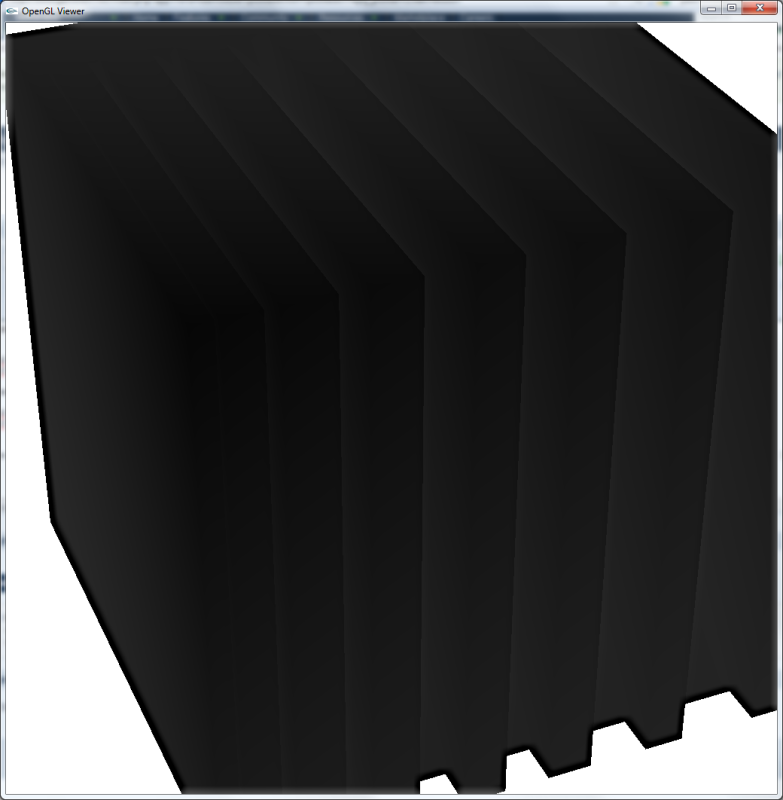

Here's the fragment shader code for the first depth image (the second image):

uniform sampler2D depthValues;

void main()

{

gl_FragColor = texture2D(depthValues, gl_FragCoord.xy/1024.0);

}

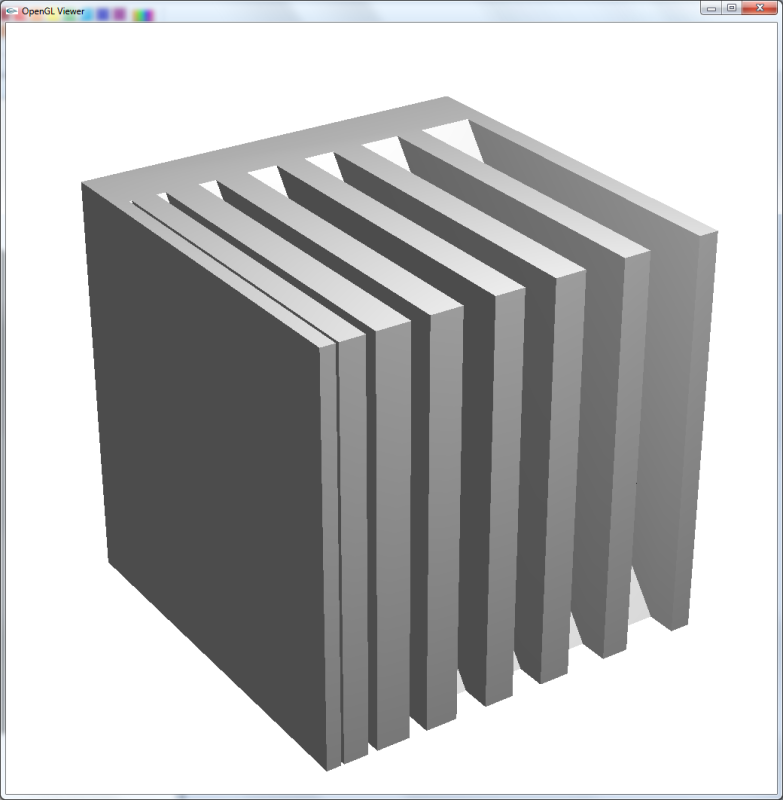

And here's the fragment shader code for the shader that's misbehaving (third image):

uniform sampler2D depthValues;

float[25] gauss = float[25] (0.0030, 0.0133, 0.0219, 0.0133, 0.0030,

0.0133, 0.0596, 0.0983, 0.0596, 0.0133,

0.0219, 0.0983, 0.1621, 0.0983, 0.0219,

0.0133, 0.0596, 0.0983, 0.0596, 0.0133,

0.0030, 0.0133, 0.0219, 0.0133, 0.0030);

const float kernelDimension = 5.0;

const float screenDimension = 1024.0;

void main()

{

vec4 sum = vec4(0,0,0,0);

int iter = 0;

int i = int(gl_FragCoord.x);

int j = int(gl_FragCoord.y);

int maxX = i + int(floor(kernelDimension/2.0));

int maxY = j + int(floor(kernelDimension/2.0));

float sampX;

float sampY;

for (int x = i - int(floor(kernelDimension/2.0)); x < maxX; x++)

{

for (int y = j - int(floor(kernelDimension/2.0)); y < maxY; y++, iter++)

{

sampX = (gl_FragCoord.x + float(x)) / screenDimension;

sampY = (gl_FragCoord.y + float(y)) / screenDimension;

if (sampX >= 0.0 && sampX <= 1.0 && sampY >= 0.0 && sampY <= 1.0)

{

sum += texture2D(depthValues, vec2(sampX, sampY)) * gauss[iter];

}

}

}

gl_FragColor = texture2D(depthValues, gl_FragCoord.xy / screenDimension) - sum;

}

The second shader blurs the depth texture with a 5x5 Gaussian kernel (gauss). If you know why I'm getting these results, please help me solve the issue.