I'm currently trying to localize my IBL probes but have come across some issues that I couldn't find any answers to in any paper or otherwise.

1. How do I localize my diffuse IBL (irradiance map) ? The same method as with specular doesn't really seem to work.

The locality does not really seem to work for the light bleed.

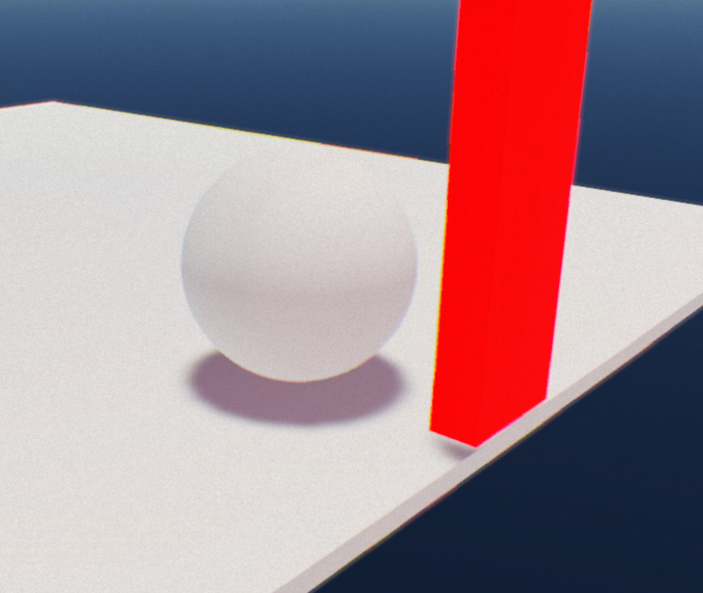

As you can see here the light bleed is spread over a large portion of the entire plane even if the rectangular object is very thin.

Also the red'ish tone doesn't really become increasingly stronger the closer the sphere is moved to the red object.

If I move the sphere further to the side of the thin red object the red reflection is still visible. So there's no real locality to it.

2. How do I solve the case for objects that aren't rectangular or where there's objects not entirely at the edge of the AABB that I intersect ? (or am I'm missing a line or two to do that ?)

EXAMPLE_PICTURE:

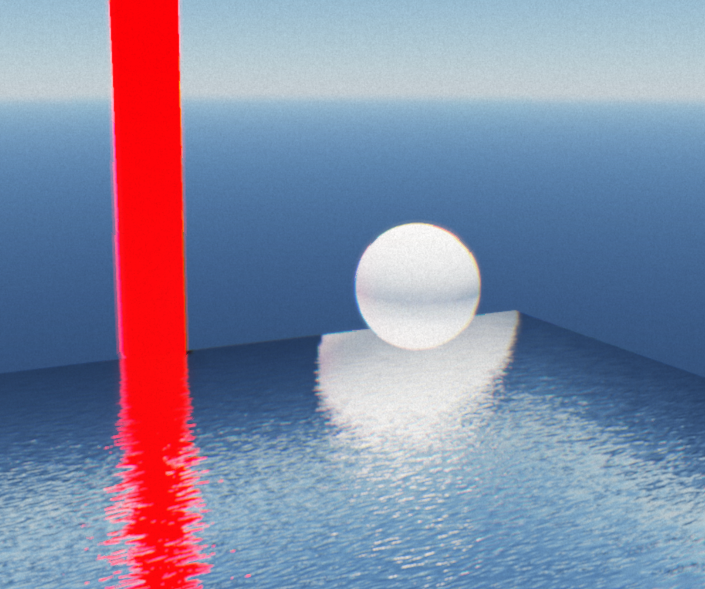

As you can see here the rectangular red object reflection works perfectly (but then again only if its exactly at the edge of the AABB).

If an object is like a sphere or moved closer to the probe (so not at the edge) the reflection will still be completely flat and projected to the side of the AABB.

Here's the code snippet how I localize my probe reflection vector...

CODE_SAMPLE:

float3 LocalizeReflectionVector(in float3 R, in float3 PosWS,

in float3 AABB_Max, in float3 AABB_Min,

in float3 ProbePosition,

{

// Find the ray intersection with box plane

float3 FirstPlaneIntersect = (AABB_Max - PosWS) / R;

float3 SecondPlaneIntersect = (AABB_Min - PosWS) / R;

// Get the furthest of these intersections along the ray

float3 FurthestPlane = max(FirstPlaneIntersect, SecondPlaneIntersect);

// Find the closest far intersection

float distance = min(min(FurthestPlane.x, FurthestPlane.y), FurthestPlane.z);

// Get the intersection position

float3 IntersectPositionWS = PosWS + distance * R;

return IntersectPositionWS - ProbePosition;

}