I'm presented with a problem in my framework right now, which has to do with storing and reading assets in my own format in order to create a data driven asset pipeline which won't feel bad to work with. I'm at a stage where loading models by file name has far overstayed its welcome, going through the whole preprocessing setup, setting materials and parameters in code etc. every time and wasting my CPU/HDD whenever I change anything, recompile and restart… you get the point. I got pretty far building a lot of UI for the editor, some custom file format to define my project and all that but now I'm stuck.

I'm trying to serialize my assets to a binary format. I'm also trying to have an editor that can lazy load them when I open one for editing. Think, similar to UE4 content browser, but at a scope of a single (confused) developer. Some work on serialization shown below (no versioning or endianess code, this is still an early prototype):

struct MemChunk

{

std::unique_ptr<char[]> _ptr;

UINT _size;

MemChunk() : _size(0) {};

MemChunk(std::unique_ptr<char[]> ptr, UINT size) : _ptr(std::move(ptr)), _size(size) {}

MemChunk(UINT size) : _ptr(std::unique_ptr<char[]>(new char[size]())), _size(size) {}

template <typename Datum>

inline void add(const Datum* datum, UINT&amp;amp; offset)

{

write(datum, sizeof(Datum), offset);

}

template <typename VecData>

inline void add(const std::vector<VecData>&amp;amp; data, UINT&amp;amp; offset)

{

write(data.data(), sizeof(VecData) * data.size(), offset);

}

void write(const void* data, UINT cpySize, UINT&amp;amp; offset)

{

UINT newSize = offset + cpySize;

if (newSize > _size)

{

char errStr[150];

sprintf(errStr,

"Serializing overflow. Available: %d; \n"

"Attempted to add: %d \n"

"For a total of: %d \n",

_size, cpySize, newSize);

exit(7645); // Let's pretend I actually have an error code table

}

memcpy(_ptr.get() + offset, data, cpySize);

offset = newSize;

}

bool isFull(UINT offset)

{

return (offset == _size);

}

};

class SerializableAsset

{

public:

virtual MemChunk Serialize() = 0;

};MemChunk is similar to a small stack allocator of sorts, it is a byte array wrapped into a unique_ptr. Every serializable asset class (currently model, mesh, texture, animation, skeleton, material) overrides the Serialize method of SerializableAsset. Whether it's necessary to use inheritance here - no, but I think it's just a nice interface to have since it expresses intention and possibility that the class can do this. You might be wondering “where's your read method?” and you'd be right, I don't have one yet. Now, here's that code above applied to a concrete type, SkeletalModel:

MemChunk Serialize() override

{

UINT numMeshes = _meshes.size();

UINT numAnims = _anims.size();

UINT skelIndex = 0u;

UINT headerSize = 3 * 4 + 64;

UINT meshIndex = 0u;

UINT animIndex = 0u;

UINT dataSize = (numMeshes + numAnims) * 4;

UINT totalSize = headerSize + dataSize;

UINT offset = 0u;

MemChunk byterinos(totalSize);

byterinos.add(&amp;amp;numMeshes, offset);

byterinos.add(&amp;amp;numAnims, offset);

byterinos.add(&amp;amp;skelIndex, offset);

for (int i = 0; i < numMeshes; ++i)

byterinos.add(&amp;amp;meshIndex, offset);

for (int i = 0; i < numAnims; ++i)

byterinos.add(&amp;amp;animIndex, offset);

return byterinos;

}How do people handle this? I've read some threads here but mostly it seems like there's some sort of array for every object type that you can index. I can't wrap my head around this. In a game, allocating everything in their own array could make sense and I guess is good for preventing fragmentation and all that (I'm aware of, and have used, memory pools and I could get behind this), however…

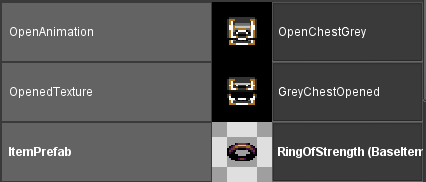

If we are in editor environment, objects aren't even serialized yet, they might not be available (since we are just importing the bunch). Serializing components first seems like a solution, so that's ok. But how do you refer to them, by the asset name, some id (hash, incremental…). I feel names might be the best way during editing.

I actually started doing this from higher level static functions which could order all assets and move things around but the per class approach seemed cleaner (after all, serializing private members is more natural that way), now I think I still need something higher level to serialize such “aggregate” assets which depend on other assets and fix up these indices after the fact.

My main problem is I'm not sure the system which holds all these assets in a lazy-load limbo between the engine being aware of them but not necessarily having them in memory. It really looks like a database of project assets would be a great fit for this but do people use them? If I knew what kind of system is used by editors in general to access other assets I feel like this would be a lot easier to understand. Do I give assets names, that are not dependent on file path (I really would like it to not break on files moving, even if that move has to be done from the editor only), and keep a table of asset name → path pairs? And do you think my serialization function is too bare and I'll need more params (It feels a little too simple the way it is but I don't have a feel yet).