Anybody working on metaverse stuff? Defined, minimally, as a shared 3D world with substantial user-created content.

Anybody doing real metaverse stuff.?

@undefined That's my eventual goal, although I've been mostly working on the scale issues, and the final game is very far off. I pian to support many planets which are mostly procedurally generated, but allow for “overrides". The whole thing is smooth voxel based, mainly marching cubes and an extended version that allows for sharp corners. The current code is in C++ and Direct X11, but I am now debugging a DX12 version, with a much improved graphics interface.

Voxel systems seem to scale well. Dual Universe is a good example of what you're talking about.

@undefined My impression is their planets are actually quite small. That's likely a byproduct of the fact they want to allow for a lot of editing, which means you have to store full terrain data. This isn't a criticism, It's just a design decision.

I'm trying to support earth sized planets and many of them, which means you simply can't store all the data on disk. In my case an unmodified planet will take near zero disk space even while you are standing on it, but is rather generated and LOD refined as you move around. My last demo is in my blog, but it's pretty crude. My next demo will have some physics. This uses what I call JIT (Just In Time) terrain. The physics geometry is completely separate from the graphics geometry and is generated right around the player and other objects that need collision.

If you want big planets, that's a way to do it.

You might look at Nanite in UE5. Nanite is a way to represent meshes in a way such that a mesh can contain internal instances of the same submesh, repeated many times. So it's not the size of the area covered by the mesh that matters, it's the total amount of unique information. And level of detail is local; you can have a huge mesh but the near parts are at high detail. If you need planet sized terrain, this might be useful.

Nanite has a file format, and in time there will probably be non-Epic tools for editing it and displaying it. Modders will need them.

@undefined One problem is UE5 doesn't yet support 64 bit coordinates. It would also need camera centric rendering to go with it on the GPU. That's probably why Dual Universe used Unigine.

Right, a planet sized Nanite mesh is not going to work. Even more than a few hundred meters will have 32-bit float problems, since you're allowed to zoom way in on a Nanite mesh.

Nagle said:

Right, a planet sized Nanite mesh is not going to work.

Well, you could subdivide your mesh into smaller chunks, where each chunk has enough precision to represent it's detail, then only the transforms would eventually require higher precision but not the meshes.

But the problem to create large planets or landscapes is more likely the compromised visuals from composing your scene from a limited set of meshes.

For their demos they used scans, but can you assemble those few scans to blend different biomes, whole mountain ranges, landscapes, planets? The repetition of instances becomes obvious, and also the patchwork misses to capture natural and seamless flows.

I don't say it does not work - pretty every current game works by kit bashing instances. But the increased detail on high frequencies as shown in the UE5 demos may make the tricks and limitations more obvious than with low poly models. Their demos only showed very uniform landscapes. Detailed, but the same stuff everywhere.

The other option - generating worlds procedurally on the client can solve this. No storage problem, so we can create any detail on the fly, without constraints to deal with repetition and seams.

But there is no way to generate pleasing content this way. The simulation times are too long to allow this. So we use noise functions instead erosion, etc. And what we get looks like No Mans Sky, but not really good or interesting.

I think the solution would be to generate the worlds offline, so simulation costs are acceptable.

But how to solve the storage problem? It really seems storage is the hardest limit we're facing on our crusade to make worlds bigger and more detailed.

Maybe we can still use instancing in form of material samples, similar to textures, but could be somehow volumetric.

Then, some background task on the client generates high detail from those samples and a coarse definition of simulated data.

I don't know yet how to do this exactly. Related examples are texture synthesis and machine learning to generate landscapes from samples.

There must be a way to do procedural generation right…

(Has nothing to do with metaverse ofc.)

JoeJ said:

The other option - generating worlds procedurally on the client can solve this. No storage problem, so we can create any detail on the fly, without constraints to deal with repetition and seams.

But there is no way to generate pleasing content this way. The simulation times are too long to allow this. So we use noise functions instead erosion, etc. And what we get looks like No Mans Sky, but not really good or interesting.

The devil is in the details. You can do a LOT with noise and other functions if you combine them in the right ways. You don't have to use the same function everywhere on a planet. You can blend one into the next for various regions.

One problem with standard voxels is IMO they aren't particularly good for planets. This is for a couple reasons. The obvious one is that your voxels will be oriented in different directions depending on what part of the planet you are on. And this can effect how your geometry looks. It will certainly effect your meshing. This isn't a killer however. A lot of people might not even notice it unless they turned on wireframe which would make it obvious.

The bigger problem relates to how planets are constructed. For the most part they are height mapped spheres. Beyond some distance you don't see caves, overhangs and things like that. Therefor you can generate much of a planet with height-mapping functions, and only worry about caves and stuff when you are zoomed in. On a flat world this is great since your voxels will always be oriented in the same direction. However on a spherical planet you can't assume that, which forces you to do a lot more calculations for even the height-mapped part of the function since very few voxel edges will be vertical to the surface.

This is the reason why for planets I chose prism voxels that all face out from the core. That way I can still cache a lot of values for the height mapped part and avoid doing that calculation on every voxel corner. I can basically do it for an entire column of voxel corners. I have my data structure set up to do this. This could also be made to work with cube topology voxels. However the actual voxels would be way more disordered in places since you are extruding a cube instead of an icosahedron. But it might still work OK.

Gnollrunner said:

You can do a LOT with noise and other functions if you combine them in the right ways. You don't have to use the same function everywhere on a planet. You can blend one into the next for various regions.

You have infinite options by combining noise, fbm, etc., but because those functions do not interact, lack cause and effect, the outcome can not resemble nature. To do so, we either have to simulate to imitate nature, or merging natural samples.

Inigo Quilez latest work on procedural terrain, made from noise driven sdf primitives:

It's detailed, has structure on multiple scales, but it's not natural. It's still a form of uniform noise and not very interesting.

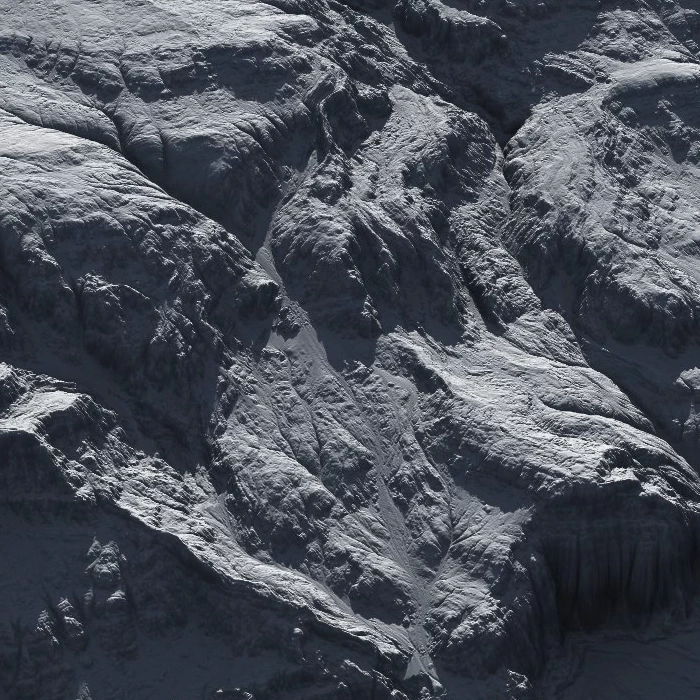

Contrary, some image form the Gaea height map simulator:

Even lacking texture, the sediment flows are natural and interesting to follow.

That's what we want, but the problem is compression. Some 64k^2 heightmap still gives no close up detail, so we use tiled textures and triplanar mapping to add it. We may also place instances of rocks on the surface.

But the result is still far from what we saw from UE5 demos, which show awesome close up detail, but lack interesting structure on larger scales due to compromise of instancing.

So what? Procedural enhancement of such heightmaps? Maybe, but combining noise functions won't cut it, no matter how creative we are at it. Nature shows such causes and effects at all scales.

Ideally we have samples of those natural effects, e.g. a crack in rock, and then we can blend those samples over the surface so the crack becomes a seamless network of many cracks.