Gtadam said:

In my new implementation all my shapes have a list of vertices, for a triangle i.e. {v1,v2,v3}. I'm a bit confused at the moment. Should the entities still have an X and Y coordinate?

I would rephrase the question to ‘Should / can we divide our world into a set of objects?’

Usually the answer is yes, but the larger our models become, the more it shows the answer is actually no.

To show the problem, let's consider two functions we might want per object: Collision detection and level of detail.

And let's say our model is static but complex terrain with caves and cliffs.

Eventually we divide our terrain into many convex cells like Quake did for all it's static geometry making up a level.

The problems we might encounter are:

A dynamic object might slide between two convex cells and get stuck, although there is no empty space between them. In the real world this could not happen, because there is no artificial segmentation of connected matter into a set of cells. It is just solid matter and a dynamic objects can not get inside.

To maintain high frame rate, we may display distant cells from our terrain with lower geometric resolution. (In a 3D game ofc.)

We will face the problem of cracks between cells that do not have the same level of detail, because geometry does not match.

To solve the problem we can ensure all our cells have a geometrical boundary around the whole object. So instead seeing cracks and holes, we now see internal cell boundaries, which is better.

But this also means we can now see the partial inside of objects, and we also waste loads of triangles just to show interior segmentation boundaries which should not be visible at all. And we might have problems with lighting those interior surfaces, which would be totally occluded and not lit at all in the real world.

So there are some potential technical problems and limitations with the idea to create entire worlds from ‘small objects’.

The limitations also affect content creation. For example, i can scan some rocks and display them at insane detail in UE5. It's awesome if i look at it close up. But if i look at it from some distance, at a larger scope, i realize i can not model global processes such as eroded valleys, landslides, or natural mountain peaks with copy pasting a set of rocks, even if those rocks appear so realistic up close. And i realize with a frown: I'm still limited to faking facades and working with smoke and mirrors.

This dilemma applies to 2D games too. And likely it's this dilemma which gives you some subconscious doubts about the idea to create worlds from ‘objects’.

So it's good to keep that in mind and try to be aware about it. It is how almost all games work, but we are not totally happy about it.

Gtadam said:

Should i take the center point of the mesh or triangle (centroid) as the X and Y?

It's really up to you. Some options:

Use the center of the bounding rectangle of the object. (Or just the top left corner of the rectangle, whatever you prefer.)

That's probably good especially if you quantize objects to snap on a grid. E.g. a rock model may take exactly 4 x 3 grid cells, but not 1.7 * 3.3 cells. The easy alignment and placement can speed up the process of creating levels, and make some other things easier too.

Use the area center of the object, which would be the analogue to the center of mass we would use for 3D rigid bodies.

That's maybe good if you don't use quantization. E.g. you can draw a spline, and then your tools place rock / soil / grass models along the spline. Such ways of modeling became more popular after the tiled hardware limitations of early HW was no more issue.

It should not matter much, but you have to find some option that works for your current goal.

Gtadam said:

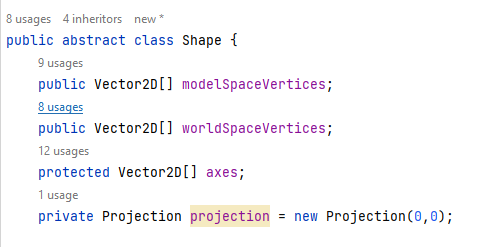

Or should i ignore it and simply manipulate the vertices with a transformation matrix when i set x and y?

A transform per object is likely a good idea. You can rotate and scale gradually, eventually even sheer the objects.

You can also go beyond that and allow to bend objects a long a spline for example.

But you always need some center and reference orientation for your objects anyway, independent from such additional flexibility.