Fixed one more thing - the reason texcoord1 was being seen as a float was because my offsets were slightly wrong ( one short short):

It was fixed by adding additional offset evaluating to the length of a float:

Offset = OriginalVertexElements(OriginalVertexElements.Length - 1).Offset + SizeOfFloat

However, the second texture coordinate was evaluating to (0,0) for all instances, so I chose to replace it instead ( starting my World Transform matrix at TEXCOORD1 instead of TEXCOORD2 )

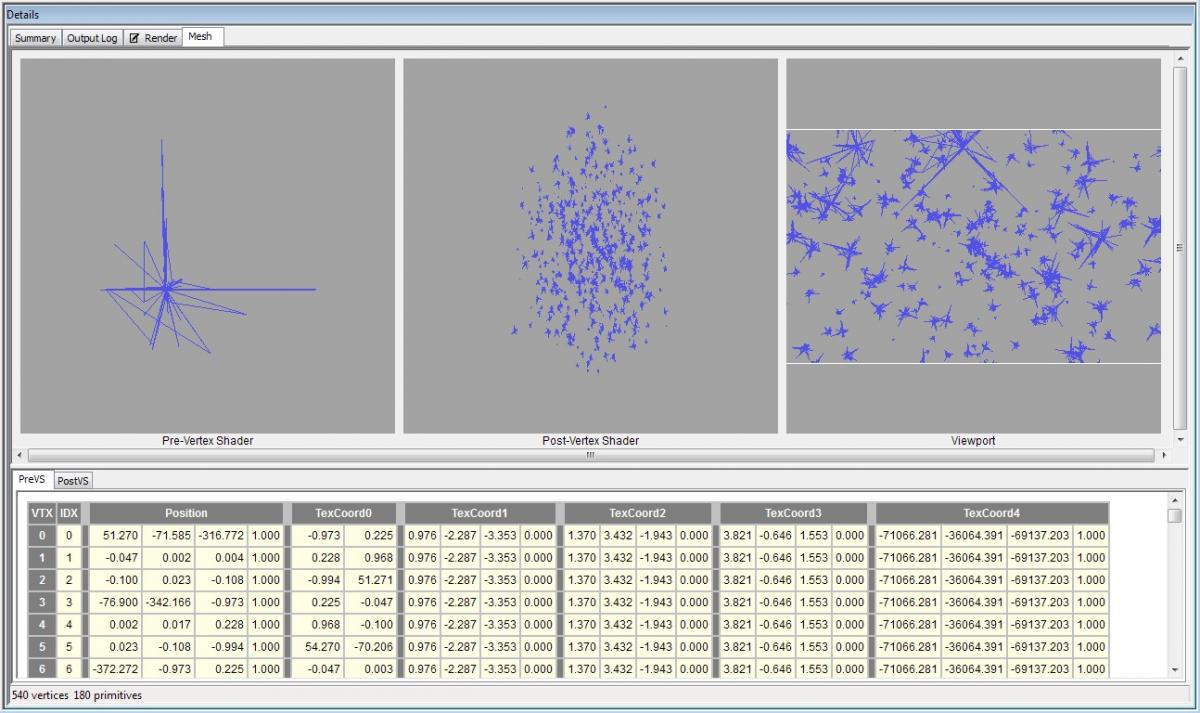

I can now also verify my world transforms are working, as each instance ( there are 1001 positions in this screenshot ), is positioned correction ( random scale applied o each instance, and random orientation )

Your help here has helped me step foreward considerably on this issue, so far. Thank m8.

All that remains now is figuring out why my geometry is broken. I now have a clear understanding of what was wrong with everything else except my broken geometry problem. =(

I'm hoping someone will know of an article that correctly explains how I should have initialized my geometry so the rat nests I am rendering now can be fixed - because it seems simply copying the Vertices and Indices doesn't work the way I expected it to.

Game Render:

[attachment=17190:1.jpg]

Pix ( which doesn't show the skybox because my skybox uses a separate rendertarget )

[attachment=17195:2.jpg]

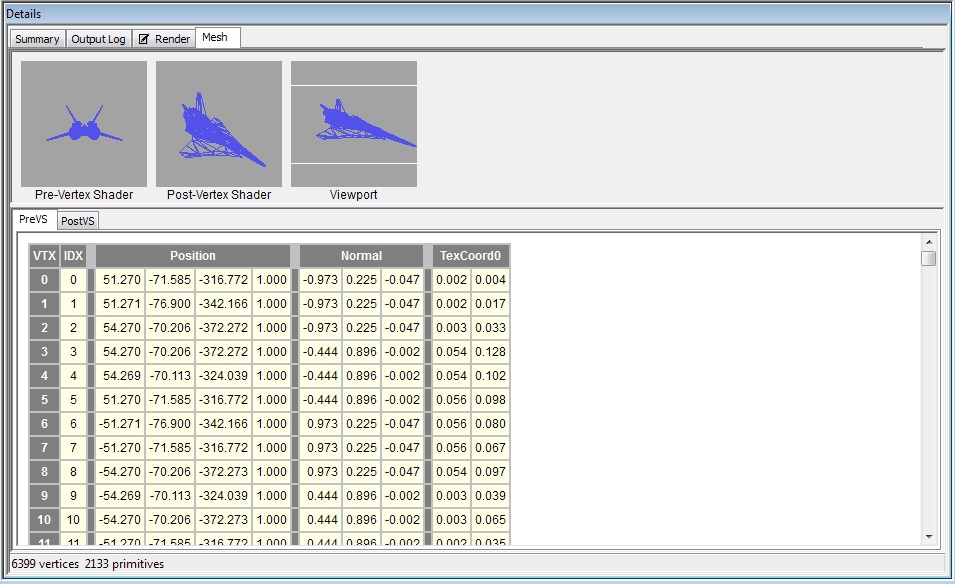

what the mesh looks like in pix:

[attachment=17191:3.jpg]

Wireframe:

[attachment=17193:4.jpg]

Edit: Corrected screenshots