Siggraph is scheduled every year at the start of August and I don't know whose brilliant idea it was to

meet in New Orleans in August. I spoke with several locals who thought we were crazy for visiting the city at this time of year and the heat and humidity didn't disappoint. Between the

sweltering heat and humidity and the icy air conditioning indoors, it felt like we were continually jumping between the lava and snow worlds in a game.

Siggraph is scheduled every year at the start of August and I don't know whose brilliant idea it was to

meet in New Orleans in August. I spoke with several locals who thought we were crazy for visiting the city at this time of year and the heat and humidity didn't disappoint. Between the

sweltering heat and humidity and the icy air conditioning indoors, it felt like we were continually jumping between the lava and snow worlds in a game.

Tuesday morning, I got to meet with the conference chair, Ronen Barzel who described some of the initiatives for this year's conference. The goal was to move the field forward. Ronen highlighted several aspects of the conference that were new this year, specifically in three unique areas including music and audio, games and information aesthetics. Presentations and performances around each of these areas were planned during the conference.

In further describing the games aspect of the conference, Ronen highlighted how games have become pervasive in society and how film and games have grown up separate from each other and each has a unique view. He also mentioned that over the last several years, the games industry has started its own conference, called the Sandbox Symposium, as an academic venue for presenting research in the field of games. This conference was scheduled as a parallel conference to Siggraph four years ago and over the years, it has melded into Siggraph until this year, when it has become officially part of the Siggraph conference.

To address this inclusion of games within Siggraph, the conference has included the presenting of several game papers along with published proceedings. The conference chairs also asked Will Wright to be a keynote speaker due to his affiliation with the games industry as a noted designer and as one who could inspire and entertain the attendees. Another key focus for gamers was the inclusion of real-time rendering in the animation festival.

When asked why New Orleans was chosen as the host city, Ronen commented that the decision was made 4-5 years ago. New Orleans is a good backdrop with a festive flavor, which aligns with Siggraph's "festival of knowledge." He also mentioned that this was one of the first major conferences to return to New Orleans after the devastating damage from the Katrina hurricane.

The Siggraph conference committee also offered several ways to give back to the city through its various outreach programs. These included several charitable organizations that attendees to donate to including the Louis Armstrong Summer Camp program; a mentoring program for local multimedia students; and a multimedia laboratory for the local students established by software and hardware donations from several companies involved in Siggraph.

Sessions and Interviews

Interview with AutodeskGetting a Job in 3D and Games

Fun with Maya

Will Wright Keynote Address

True 3D Gaming Panel

Building Story in Games: No Cut Scenes Required

Making a Feature-Length Animated Movie with a Game Engine

Game Papers

Interview with Autodesk

Autodesk had some great announcements during the show, with education being one of the key pushes. They also announced several initiatives designed to help the industry weather the current recession.The first major announcement was for Maya 2010 and SoftImage 2010. Maya 2010 has condensed it offerings to a single package. This gives all Maya Complete and Maya Unlimited users a single package for a single price of $3495. This includes all the features found in Maya Unlimited along with a full-featured compositor and Match Mover. Maya 2010 also includes five additional mental ray licenses and Backburner for batch network rendering. Softimage 2010 includes Face Robot. SoftImage 2010 also includes a new Giga III architecture for better performance and an expanded ICE, with the ability to export to Maya. Both of these packages are great values and offer cross upgrade paths.

Another key value announcement is the new Autodesk Suites that enable users to save as much as 35% over the standard pricing. The Autodesk Entertainment Creation Suite includes Maya or 3ds Max bundled with MotionBuilder and Mudbox. Another suite is the Autodesk Real-Time Animation Suite, which includes Maya or 3ds Max and MotionBuilder.

Along with new versions of Maya and SoftImage, Autodesk also announced new versions of MotionBuilder 2010 with expanded physics and Mudbox 2010 with better Photoshop interoperability, an SDK and FBX support.

An update version of 3ds Max 2010 was announced earlier this year at GDC, but subscribers can download 3ds Max Connection Extension, which enables better import and export to other Autodesk products along with support for SKP and SAT formats and better exporting to the OpenEXR format.

Included in the education initiatives is a new Education Suite 2010, which includes key Autodesk products at competitive educational pricing. There is also a new Animation Academy package focused for 11 to 17 year old students.

Autodesk has also made versions of 3ds Max and Maya available free to user for 90 days through their new assistance program. This program is designed to allow 3d users to keep their skills current while out of work.

More on all Autodesk Siggraph announcements can be found on the Autodesk Area web site.

Getting a Job in 3D and Games

One of the early panels focused on "Getting a Job in 3D and Games." The panel was well attended and included several key hiring managers from several visual effects and game studios. The panelists included Ken Murayama from Sony Pictures Imageworks; Hannah Acock from Double Negative in London; Karen Sickles from Digital Domain; Jason from Microsoft Game Studios; Rob from LucasArts; Lori from Industrial Light and Magic; Emma McGonigle from Moving Pictures in London and others. The panel moderator was Rob Pieke of Moving Pictures.Finding a job in the CG and Games industry involves completing an internship, having passion and experience, creating an effective demo reel and portfolio. Another good point is to know the company that you are applying with. Several panelists mentioned an internship as an important step.

It is helpful to match your portfolio to the type of job that you are applying for. So, if a company is looking for animator, then include your best animation pieces and not so many modeling projects. When making a demo reel, resist the temptation to pack too much into the demo reel. The manager will have about 15 seconds to evaluate your reel, not 3 to 4 minutes.

The stuff in your resume is also important. Try to keep your content so it is grounded in reality. One panelist responded that seeing a character walk is often better to see that some wild unique science fiction image. When asked what content makes a poor response, the panelists responses included anything with teapots, lens flares, or chess pieces. Also avoid anything that has incorrect lighting, even if built by a team. Make your reel so it shows that you have a understanding for the basic fundamentals.

If your reel includes content developed by a team, be sure to include a project breakdown that describes exactly what part of the project that you did.

If you post your resume on a web site, make the site easy to navigate and fast. Hiring managers don't want to wait to see your reel. If you post your resume online, make it downloadable using PDF or Word. If you make the resume available using any other method, then it will need to be cut and pasted to be distributed and the resume won't look too good when viewed by others in the company.

For resumes, be sure to include a three sentence paragraph describing your mission statement that describes just what you want to do. Be sure to include in your resume the keywords that the company can search on. This is especially important for technical programming positions where the toolset is more key than a portfolio. Try to keep your resume to a single page.

When asked about hiring generalists versus specialist, the panelists said that broad skills are important, but it is skill in a specific area that will typically get you hired and that the general skills are the versatility that will keep you working.

Another key point is to be willing to collaborative with a team. Most CG positions work with a team and it is important to be a team player.

It is often helpful to network with others within the industry. So often it is "who you know" that will help you get a job. There are plenty of ways to network including emailing other students that are working where you want to work; contact with others through user groups and web sites such as LinkedIn, Twitter, and Facebook; and conferences such as Siggraph are also helpful. After making contacts, it is critical that you follow-up. Be sure to respond to all the people that you meet and collect business cards from at the conference.

In response to the poor economy, several of the panelist responded that it could be tough to get into these companies at the current time, but the two panelists from London responded that they are actually busier than ever.

If you can't get hired directly into a company, another way to try is to get hired for an internship or in the test department. Within most of the CG and game companies, there is a high demand for Creature TDs, Rigging and Technical Artists.

Fun with Maya

Duncan Brinsmead is one of the Maya specialists at Autodesk and he usually presents at the Autodesk User Group meeting at Siggraph, but since there wasn't a user group meeting this year, Duncan presented his latest work at a Exhibitor session located in the back of the exhibition hall. The tiny make-shift room was packed with a crowd standing around to catch a glimpse.

Duncan showed off a number of interesting techniques that mimicked real-world phenomenon such as getting arrows to stick to the objects they hit, creating a snake from cloth, making a worm move, simulating a lathe and creating confetti that spins.

Many of these techniques use features that are new to Maya, but some use older features in new ways. The plasma ball showed an easy way to get electric arcs to be attracted to a hand touching the ball's surface. Other dynamic based examples included a water wheel, a bag of microwave popcorn, a tornado, a lava lamp, dripping wax, and a dividing cell.

All of Duncan's examples are showcased on the Autodesk Area site in a section called Duncan's Corner.

Will Wright Keynote Address

Will Wright presented the keynote address entitled, "Playing with Perception." The entire presentation was typical Will Wright with his motor-mouthed descriptions and his brilliant connections.

Will started his talk by talking about his cat, Argon. He posted a picture of his cat on kittenwar.com and noticed the pattern of winning and losing cats. This site compares the cuteness of cats based on voting. The detected patterns are that winning cats are smaller and fit within a shoe. From this example, Will extrapolated that design fields are divided into several different areas including Environmental, Aesthetic, Functional, Storytelling, Psychology, etc. There are also several different entertainment silos including TV, music, books that are all tending to bleed together into a single field that could be called the Interdisciplinary Field Theory, which is similar to the Unified Field Theory.

When looking at the entertainment landscape, we often don't know why we want it, but since games are just pixels that respond to the eyes, then game designers are like drug dealers. Within the continuous entertainment landscape, sports feed into the Internet, and dance feeds into hobbies and toys. This leads to a three interconnected circles for Play, Story and Voyeurism. The question for each discipline is where does it flow to?

Another way to look at this is to look at the platform landscape. Will compared his I-phone screen to the IMAX screen which is 100,000 times larger. Does this mean that the IMAX is 100,000 times better an experience? But, actually consumers don't care. Just like the equation that 3D divided by 2D equals 1.5 times better, which isn't true.

Perception also deals with our senses. Is a 3D concert a better experience than a 3D film? If this is the case, then what should we put on the screen? The ear can actually distinguish between earthquake tremors and nuclear blasts better than can be done using a visual display or graph. Each of our senses have a different bandwidth of data that can be processed with 10M for eyes, 1M for touch, 100K for sound and smell and 100 for taste.

The bandwidth limitations for some senses can lead to perceptual blindness. A good example of this is placing two headlights on a motorbike to help people to make motorcycles safer. A negative example is that using a cell phone while driving is often worse than a drunk driver.

You can also look at the Information Absorption rate as a function of age, so if a person can process 3600 bits of data per second, then a 10 year old could process 360 bytes per second. For the average age of the attendees, this would equate to about 4 slides per minute or 240 slides per hour.

When looking at a random image of dots, it becomes difficult to pick out any patterns. This similar effect happens when looking at captured versus synthetic images. When mapping reality and imagined images verses captured and synthetic images, then the middle point of the graph is where some interesting blending occurs. A good example of imagined images that are synthetically created are the images from the project, Life After People, which could also be called the "watch the world slowly rot" project. These images show popular landscapes after hundreds of years of neglect.

The camera is training wheels for the eyes and helps seeing uncommon perceptions. One way to look at this is to measure the amount of data generated within a lifetime. A simple list of ancestors accounting for about 1kb was common for ancestors, maybe a journal was kept for 100kb, then letters became common for about 1MB, but with digital cameras, 10 GB of data is common and our children will easily generate 1TB of data during their lifetime. Another interesting look is to measure what the half-life of this data is.

Perception is based on what we see. Imagine walking through a jungle and seeing a tiger or imagine being in the same jungle and seeing a picture of tiger. How will we react to these images? We can quickly classify the results to determine what we do. It is interesting how a 5 year old child can immediately distinguish between a cat and a dog, but an advanced computer cannot.

We can learn schema from others via a story or from experience with a toy via play. For kids, playing video games is using the scientific method. They try something and adjust their play based on whether they succeed or fail at a task. Through continual playing they form a play model of the game in their head. Another example is the creation of miniature sets to show such a similar mapping. This can also be created by using Tilt-Split photography to make real-world scenes appear like miniature sets.

One aspect for controlling perception mapping that games use is a force multiplier.

Another aspect of games is the social side and to determine how to entertain the hive mind, or a collection of connected brains. The hive mind has an increased speed of processing enhanced by instant communication. One way is to entertain the hive mind is to grow the community. A good example of this is the fans of the TV show, Lost. Lost fans have created the Lostpedia, which have gone to such lengths as to translate the hieroglyphs in the background of the show's scenes. In fact, one minor character on the show has more Wikopedia pages than President Obama. Casual viewers can look into this depth of information to enhance their experience.

In games, a similar pattern emerged for Sims where over time a large volume of content was created for the game. The largest portion of the content created for Sims had a lower quality and the best pieces being quite a bit less. The idea with Spore was to increase the amount of quality content by means of tools.

For Spore, the team set a goal to achieve 100K worth of posted content via the Creature Creator game in time for the launch. This goal was actually met in 22 hours after its availability. Today there are over 100M in content posted. The Sims reached 100K worth of posted content after 2 years and with Spore's Make and Share tools, there's 100 times the Sims content available. The results are that the additional data makes for more player experiences.

Spore also has developed an API that others can use to create their own games using the Spore data. There is also a Maya import/export features to drive even more content. The key to this entertainment extension is the data.

True 3D Gaming Panel

One of the key trends in this year's conference was the success and acceptance of stereoscopic 3D, which has been released in numerous films this year including Coraline, Pixar's Up and Dreamwork's Monsters vs. Aliens. This same technology has also started to find its way into the gaming world.On Thursday, a panel discussion entitled, "The Masters Speak: Game Developers Weigh in on True 3D Gaming," was presented. This panel showcased three studios that have successfully used stereoscopic 3D in their game development. The panelist included Andrew Oliver from Blitz Games, Habib Zargarpour from EA, and Nicholas Schultz from Crytek.

Andrew presented first and gave an overview of the stereo 3D technologies. Current systems are divided into three groups: Active displays such as the DLP systems that uses expensive glasses to modulate the left and right channels; Passive displays such as the Real3D system that uses polarized glasses; and Auto-Stereoscopic systems that don't required any glasses, but are limited in that you need to stand directly in front of the display.

One of the biggest challenges for Blitz Games was to build the game so that it worked on all the available 3D televisions. Andrew also mentioned that one of the main challenges is caused by the confusion over the various hardware and input formats. A standard would make adoption much easier.

He then showed off Invincible Dragon, a side-scroller martial arts fighting game in 3D to be released on Xbox Live. One of the motivations behind this game was to prove that it could be done. The side scroller game was chosen on purpose because the slow left and right pans would allow the player's eyes to adjust to the 3D. Also, the player maintains a focus on the main character which allows the eyes to rest. The game is displayed at 1080p and 60 frames per second one for each channel. During development, the team found that they actually had to model more background items to fill the space. Overall, Andrew felt that adding stereo 3D to the game required about a 10 to 15% additional work.

Nicholas was second panelist to present and showed off a version of Crysis running in stereoscopic 3D. The effect was amazing. At one point, Nicholas moved his player into a river and shot a rocket into the opposite cliff causing pieces of rock to fly in all directions including a large chunk that flew right over the player's shoulder and directly into the player's face.

Nicholas commented that the way the Crytek engine was built, it was relatively easy to implement this new display mode and that it took less than 2 days to implement. His system was displayed using a duel output to a DLP projector.

Nicholas, like Andrew, also commented that the lack of a standard was a serious problem. He also sees this as a big help for serious game applications and architectural visualizations.

Habib was the last presenter and he showed off a version of Need for Speed: Shift that also used stereo 3D. With the moving cars moving in and out of the scene in 3D, the results were very realistic and immersive.

Habib stated that in building the game for 3D, many of the common 2D cheats, such as camera-facing planar trees and bump maps wouldn't work and had to be redesigned. Another challenge was to keep the motion subtle and not to be tempted to rush objects at the camera. Finally, when EA releases a game, it simultaneously releases 65 different versions to cover all the different consoles and 12 different languages. If varying stereoscopic 3D standards require another 5 different options, then this creates a huge problem.

Building Story in Games: No Cut Scenes Required

In this presentation, Bob Nicoll of Electronic Arts and Danny Bilson of THQ talked about the aspects of story writing for games. Bob presented first and his presentation was titled, "Story in Real-Time Structure and Framework."Bob started by describing the typical 3-act structure used by storytellers. The first act tells the back story. It is also used to setup the character. The second act includes the confrontation and the climax. It is a roller coaster of emotions where the tension rises and falls. The third act has the resolution. It is used to tie up all the loose ends. During the third act, the tension should dissipate and the goal is to finish the story and get out.

At the end of acts 1 and 2 are plot points that are major events that turn the actor. Plot point 1 should drive the character to take on the problem. Good stories start at plot point 1. Plot point 2 is the climax for the story.

Story structure can be further simplified into two parts: an inciting incident that creates the problem and the principle action that resolves the problem.

Within games, story telling is unique because the player is the hero and you don't always have control over what the player is going to do. For games, the story is all act 2. Games are often based on an internal story where the player describes the story as, "I beat the dragon." This is different from external stories, such as Moby Dick, where the story is known.

Considering design and emotion, it is a common principle that "form follows function," but for games it doesn't work this way. For example, in industrial design, the function that makes the item work is surrounded by the emotional aspects that makes the person want the item. However, for entertainment, the emotional aspect is the core and it is surrounded by the functional design.

Danny's presentation was titled, "Screenwriting in Gamespace." He started by describing environmental storytelling where the game environment does a lot to tell the story. He then showed an example of a game, Homefront, currently in development at THQ. Through the game's various environments, you could quickly detect the back story for the game without using intrusive cut scenes.

For game players, the story is immersive and subversive. "It is the player's story," commented Danny where actions speak louder than words. Danny then gave some examples. While playing Everquest, a low-level player fell down a well to a level with higher-level monsters. Although probably not intended by the game designers, this created a compelling story for the player who discussed it with other players. Eventually, the player was escorted out by some higher-level players, but the story was unique and memorable.

Another example, from World of Warcraft, was that a player stumbled upon a wrecked ship with all different kinds of different lifeforms. The story was that an alien ark crash landed on the island and it was established without any dialogue. Another good example is Knights of the Old Republic and Fallout 3 where the player has the choice to choose a faction to defend.

Danny then posed a question on which is more important, gameplay or story. The answer is that gameplay is critically more important. He then presented a quote that "I'd like to watch a movie while playing a video game about as much as I'd like to play a video game while watching a movie." He then stated that cut scenes are the lowest form of storytelling.

Since game playing is an active medium, you need to ask yourself, "what are the verbs?," and "why do I care?" The key is to tell a story through objectives and rewards. You need to write the story in the non-player characters (NPCs) and not into the player. A good example of this was an NPC that convinced Danny to follow him into a cave to get a treasure, but Danny found an ambush instead. This created an emotional response for this NPC and the next time he was encountered in the game, Danny took action.

Making a Feature-Length Animated Movie with a Game Engine

This presentation showed how a team at Delacave used a game engine to create a feature-length animated feature. It was presented by Alexis Casas and Pierre Augeard. The feature is the True Story of Puss in Boots, an 85-minute animated feature consisting of 900 shots.

The entire feature was animated using keyframe animation with MotionBuilder and rendered using RenderBox, a game engine. The game engine with its real-time abilities enabled the team to work side by side with the director. The shots were rendered in several passes including render passes for the diffuse, occlusion and normal channels.

Another key benefit of using the RenderBox game engine was that the feature had no lighting department. The ability to place lights within the engine eliminated the common duel between the compositor and the lighting departments since a single artist did both. They were also able to bake lighting into all static scenes and typical render updates were about 10 seconds to render.

The pipeline involved rendering work for the day during the night so that the results could be viewed the next morning. The feature didn't require an expensive render farm. All content was input into the engine using FBX format and output as EXR files. The RenderBox engine was adapted to be able to render in layers with passes, but otherwise the off-the-shelf engine was used.

Through the experience, the team learned that the game engine was not a magic potion and they still had problems including anti-aliasing, transparency and poly flickering. There were also memory issues and some of the heavier scenes needed to be split for rendering. The game engine in some ways couldn't compete with a software renderer and posed limitation such as no GI, true reflection or subsurface scattering. The director was aware of these limitations and tailored the story to not be constrained by these limitations.

The project marked the long sought-after convergence of video games and film. The game engine allowed a flexibility that could handle last minute changes to the film. In fact, some changes were made only two days before the film was released. This gave a lot of control to the director. Overall, the decision to use a game engine saved millions of dollars on the project by allowing the project to be rendered on typical workstations with requiring a render farm and eliminating a separate lighting department.

Game Papers

With the inclusion of the Sandbox Symposium into Siggraph, there were several sessions of game papers where game research was presented. I was able to attend many of these sessions and to review the paper proceedings. The various papers were diverse and covered a broad range of topics. Some of the research was valuable and accessible.The game papers were divided into several discrete categories covering social play, visuals, game mechanics and kinesthetic movements.

One of the more interesting papers was presented by Scott Kircher and Alan Lawrance of Volition. It showed a technique that speeded dynamic scene lighting for real-time rendering called inferred lighting. The process works by using a discontinuity sensitive filtering (DSF) algorithm to compute lights and shadows based on a lower resolution approximation of the scene.

Another interesting game paper presented by Kaveh Kardan of the University of Hawaii. It showed a system for automatically editing cinematics based on a defined shot list. The system also includes the ability to add stylistic edits. The team compared some movie clips with those created by the system and although interesting, the system still deviated from the clips in several ways.

In another paper, Michiel Roza showed a visual profiler called GamePro that he has developed. This profiler allows developers to investigate frame drop in their game by means of a visual interface that makes it easy to quickly see the methods where the frame rate and memory leak problems occur. The profile is available from Kalydo and more information is online at www.kalydo.com.

Karthik Raveendran presented work by two teams on an augmented reality game called Art of Defense. This game is a board game with physical pieces that the players place on a table. Then by looking through handheld PSP systems, they can see where enemies are attacking the towers of defense that they've place on the table using tiles. This game concept is unique because it combines traditional tabletop board games with a virtual component. The game was also designed to be collaborative so players work together to beat their virtual foes.

Another new and interesting game concept was presented by Floyd Mueller. Floyd defined a category of games called exertion games that are designed to require physical effort to play such as DDR and several Wii sports games. Floyd and his team worked to create a game called "Remote Impact." The game consisted of a wall-sized framework consisting of layers of plastic and foam onto which the outline shadow of another player could be projected. The shadows were captured using a camera and projected onto the opponent's screen. Two networked screens allow players to shadow box with one another. The edges of the framework detected stresses applied to the screen so that the position and intensity of the player's hit was used to determine the strength of the blow. The benefits of this game included exercise for fitness and social interaction between players.

Rene Weller of Clausthal University showed a new algorithm for collision detection that would enable correct feedback for haptic devices. Correct haptic feedback requires rates of 1000 frames per second and BVHs and Voxels are too computationally expensive to be used for such. The new algorithm based on inner sphere trees computes the collision surface based on non-overlapping bounding volumes that are recursive and fast.

These are just a sampling of the available game papers. The entire game papers can be found with the Sandbox 2009 proceedings.

Exhibition

The exhibition floor was considerably scaled back from previous years. This was due to two main factors including the rough economy and the conference's location outside of California. Several key companies were missing in action including HP, Adobe, Disney, etc. Other companies were present, but had a scaled back booth.Happily, Pixar was still strong at Siggraph 2009 with a booth that resembled the house in their latest film, Up. Throughout the week attendees lined up to get Up posters and collectible wind-up teapots.

Autodesk also had a strong presence at the show including a booth that demoed their software throughout the week.

The exhibition floor also showcased several new and interesting technologies including this video graphics board that could simultaneously display hundreds of video streams at once.

The Fraunhofer group showed a virtual mirror that could project an image onto a shirt. The image bent and moved with the cloth. You could also change the design and color of the shirt and the image in the mirror was updated to show the changes.

3DVia was also present showing off a broad array of technologies including this cool application that let a digital character move and dance along the edge of a box being held. As the box is tilted, the character slides to the edge of the box and if the box is quickly moved, the character jumps to regain its footing.

Several digital schools also had booths and one school was showing off its DJ Hero interface and game that is similar to Guitar Hero, except it uses a proprietary turntable to scratch discs. You can learn more about this interesting interface at www.sultansofscratch.com.

Emerging Technologies

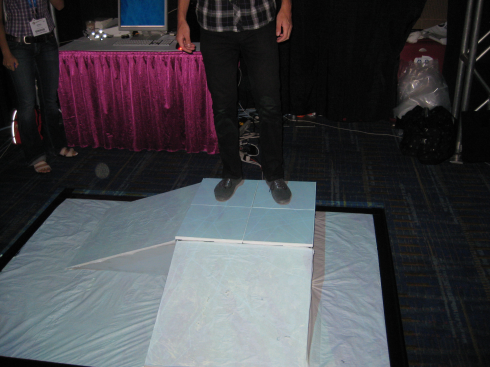

The Emerging Technologies venue is a great place to look into future technologies and the next great game interface. This year's exhibition showed several unique and diverse technologies ranging from haptics, robots, and alternative interfaces to crazy displays and several technologies that were just plain cool.The platform shown below was able to detect the pressure of people walking on its surface. For the demonstration, participants could walk across an icy surface and see virtual cracks appear on the platform and hear the cracking sound of ice as pressure was applied.

Another exhibition used programmed robots to fold laundry. With a simple interface, you could define exactly how you wanted the clothes folded and the robot would figure out how to do the folds.

This display was a simple sled simulator and it saves a trip to the emergency room. It also included a force feedback system for bumps and turns.

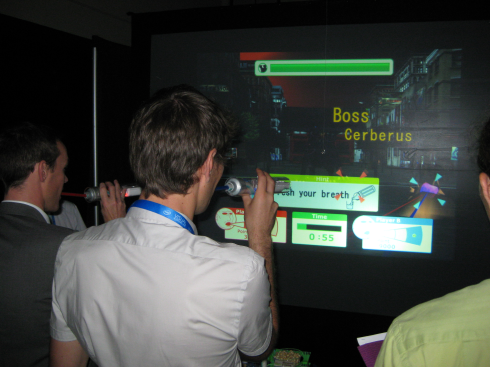

One of the most unique game interfaces was this team who used a blowing device to shoot at monsters within the game. A Wii remote was used for directing the shots and a breath analyzer could detect the type of breath odor you were emitting. By trying different flavored snacks, you could change the effectiveness of the shots, so garlic could really be used to kill a vampire. One suggested use was to create a game to encourage children to eat the healthy foods they dislike.

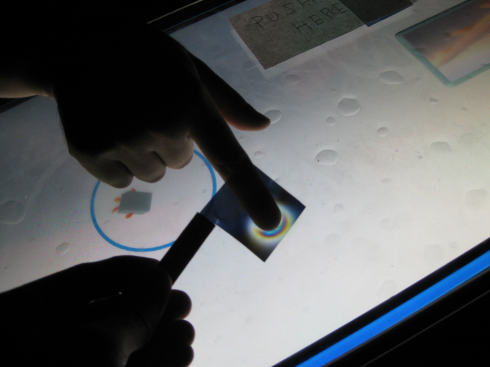

Another unique input device was a thick sheet of plastic that could detect pressure. This pressure sensing device could be used in gameplay or could be used to detect the surface of an object.

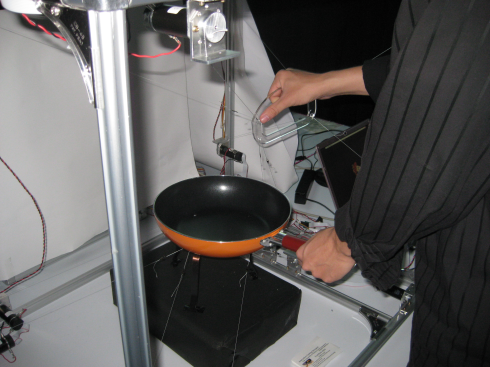

Another displayed showed a cooking interface for games that didn't require any heat. It used a typical pan and spatula interface hooked with wires to provide feedback to the player.

This interface used an umbrella, called the Funbrella, and simulated the effect of different weighted objects falling on the top of the umbrella.

GameJam! and Conference Games

A popular activity at Siggraph is the FJORG animation challenge. This activity lets teams of animators compete for a single 24-hour period to produce the best animated short in the given time. Prizes are awarded by a jury.

This year also featured Game Jam, where teams of game developers also got to compete to create games in a 24-hour period. There were two different categories: 2D games built using Flash and 3D games built using Panda 3D. Prizes for the competition were provided by donations from sponsoring companies and included passes for next year's conference, software from Autodesk and books. Each winner was also given a medal, which was a set of beads around a classic NES game cartridge. Winners were also invited to be part of the Reception parade held later that night.

The 2D Game Crowd Favorite was awarded to the creators of Invasion of Zaltor.

The 2D Game Best of Show was awarded to the team that created the game, Falling for Siggraph.

Dumpster Dave was recognized as the Most Creative 3D Character.

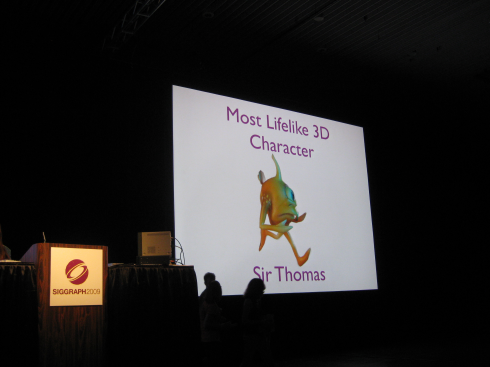

The Most Lifelike 3D Character was Sir Thomas.

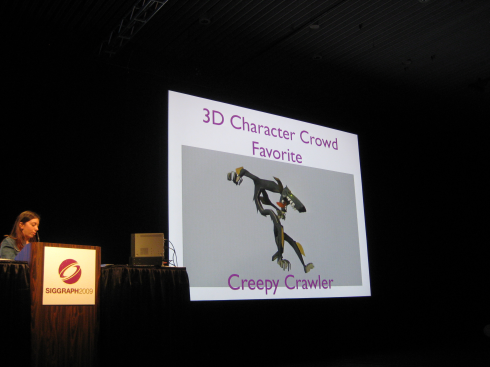

Creepy Crawler was the 3D Character Crowd Favorite.

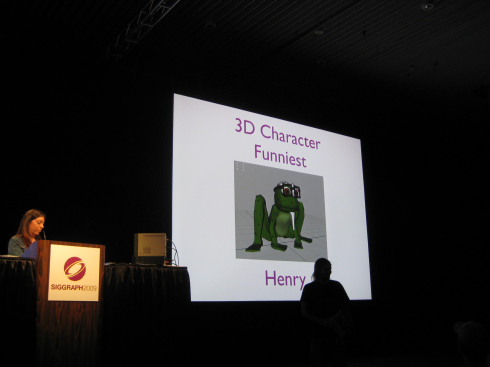

The Henry character was the 3D Funniest Character.

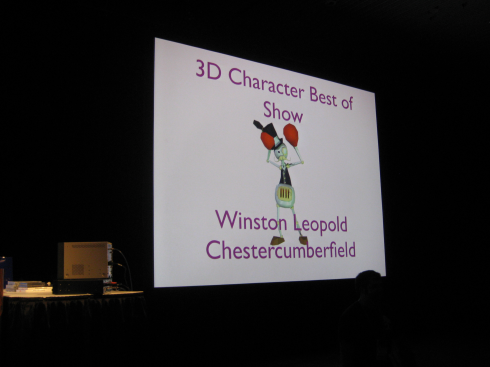

Finally, the 3D Character Best of Show was awarded to the team that created Winston Leopold Chesterchumberfield.

The conference also held two conference wide games. The first, called Encounter, was a scavenger hunt game where teams of participants were given clues and questions via a mobile device or a custom I-phone app. The event had over 300 teams with over 1200 simultaneous players.

Another conference wide game involved collecting business cards to create a virtual company.

Animation Theater

The Animation Theater showcases the best animated pieces submitted for the last year including game cinematics, commercials, film clips and animated shorts. This year's festival also included a select group of pieces that were rendered in real-time. Four real-time pieces were shown as part of the evening theater. These pieces were also available for playing in the Sandbox area.The real-time pieces that were highlighted included Flower for the PS3. This game was created by ThatGameCompany and demonstrated in real-time as a player played through several levels of the game highlighting its detailed, beautiful graphics.

The second piece was an application developed in Japan at Soka University called DT4 Identity SA. This application used a camera to capture a picture of the person in front of the camera and then used a number of different rendering styles to display the camera's image.

The Froblins demo was created by a team at AMD. It showcased the graphics capabilities of the latest ATI graphics card. The Froblins are small frog-like creatures that wander about a scene seeking gold, food or rest. The entire world is rendered in real-time and features several thousands autonomous high-resolution characters. Despite the heavy amount of content, the resulting animation are smooth and detailed.

The final real-time demonstration showed a Fight Night 4 fight between Mike Tyson and Will Wright. After a nail-biting couple of rounds, Will Wright's character was able to knockout Mike Tyson. The real-time graphics use advanced physics to create a sense of realism.

Sandbox Game Showcase

Within the Emerging Technologies venue was an area showcasing games. Some of these games were commercially-available games such as Flower and Fight Night 4, but others were indie-developed.One such game let players drawn their own terrain level using a scanned white board marker. This drawing was then used as the game map.