Annotation

The article will familiarize developers of application software with tasks set before them with the mass introduction of 64-bit multi-core processors symbolizing revolutionary increase of computing power available for an average user. It will also touch upon the problems of effective use of hardware resources for solving everyday applied tasks within the limits of Windows x64 operating system.

Information for readers

On default by operating system in the article Windows is meant. By 64-bit systems x86-64 (AMD64) architecture is understood. By the development environment - Visual Studio 2005/2008. You may download demo sample which will be touched upon in the article from this address: [url="http://www.Viva64.com/articles/testspeedexp.zip"]http://www.Viva64.com/articles/testspeedexp.zip.

Introduction[/url]

Parallel computing and large RAM size are now available not only for large firmware complexes meant for large-scale scientific computing, but are being used also for solving everyday tasks related to work, study, entertainment and computer games.

The possibility of paralleling and large RAM size, on the one hand, make the development of resource-intensive applications easier, but on the other hand, demand more qualification and knowledge in the sphere of parallel programming from a programmer. Unfortunately, a lot of developers are far from possessing such qualification and knowledge. And this is not because they are bad developers but because they simply haven't come across such tasks. This is of no surprise as creation of parallel systems of information processing has been until recently carried out mostly in scientific institutions while solving tasks of modeling and forecasting. Parallel computer complexes with large memory size were used also for solving applied tasks by enterprises, banks etc, but until recently they have been rather expensive and very few developers were able to get acquainted with the peculiarities of developing software for such systems.

The authors of the article managed to take part in the development of resource-intensive software products related to visualization and modeling of physical processes and to learn the specificity of development, testing and debugging of such systems by themselves. By resource-intensive software we mean program code which uses efficiently abilities of multiprocessor systems and large memory size (2GB and more). That's why we'd like to bring some knowledge to developers who may find it useful while mastering modern parallel 64-bit systems in the nearest future.

It will be fair to mention that problems related to parallel programming have been studied in detail long ago and described in many books, articles and study courses. That's why this article will devote most attention to the sphere of organizational and practical issues of developing high-performance applications and to the use of 64-bit technologies.

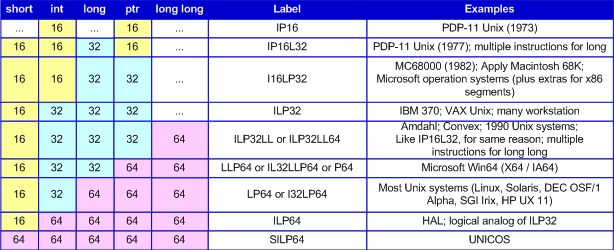

While talking about 64-bit systems we'll consider that they use LLP64 data model (see table 1). It is this data model that is used in 64-bit versions of Windows operating system. But information given here may be as well useful while working with systems with a data model different from LLP64.

Table 1. Data models and their use in different operating systems.

Be brave to use parallelism and 64-bit technology

Understanding the conservatism in the sphere of developing large program systems, we would like, though, to advise you to use those abilities which are provided by multicore 64-bit processors. It may become a large competitive advantage over similar systems and also become a good reason for news in advertisement companies.

It is senseless to delay 64-bit technology and parallelism as their mastering is inevitable. You may ignore all-round passion for a new programming language or optimizing a program for MMX technology. But you cannot avoid increase of the size of processed data and slowing down of clock frequency rise. Let's touch upon this statemzent in detail.

Parallelism is becoming the basis of productivity rise and this is related to the slowing down of the tempo of modern microprocessors' clock frequency rise. While the number of transistors on a dice is increasing, a sharp fall of clock frequency rise speed was outlined after 2005 (see picture 1). There is an article on this topic which is rather interesting: "The Free Lunch Is Over. A Fundamental Turn Toward Concurrency in Software" [[url="http://www.gotw.ca/publications/concurrency-ddj.htm"]1].

Picture 1. Rise of clock frequency and the number of transistors on a dice. During last 30 years productivity has been determined by clock frequency, optimization of command execution and cache enlarging. In next years it will be determined by the number of cores. Development of parallel programming means will become the main direction of programming technologies' development.

Parallel programming will allow not only to solve the problem of slowing down of clock frequency rise speed but in general come to creation of scalable software which will use fully the increase of number of computational nodes in the processor. That is, software will gain productivity not only through the increase of the microprocessor's clock frequency, but through the rise of number of cores as well. Such systems are the future of software. And one who will master the new technologies quicker will be able to shift the software market for one's own benefit.

Although the use of 64-bit technologies doesn't look so impressive in comparison to parallelism, however, it provides a lot of new abilities too. Firstly, it is free 5-15% productivity rise. Secondly, large address space solves the problem of RAM fragmentation while working with large data sizes. The search for solution of this task has caused a lot of troubles for many developers whose programs abort because of memory shortage after several hours of work. Thirdly, this is an opportunity to easily work with data arrays of several GB. Sometimes it results in amazing rise of productivity through means of excluding access operations to the hard disk.

If all said above doesn't convince you of advantages of 64-bit systems look closer what your colleagues or yourself are working at. Does somebody optimize code, raising the function's productivity in 10%, although this 10% can be got by simple recompilation of a program for the 64-bit architecture? Does somebody create his own class for working with arrays, loaded from files because there is no enough space for these arrays in memory? Do you develop your own manager of memory allocation in order not to fragment memory? If you give "Yes" answer at least for one question, you should stop and think for a while. Perhaps, you fight in vain. And perhaps, it would be more profitable to spend your time on porting your application on a 64-bit system where all these questions will disappear at once. Still, sooner or later you will have to spend your time on this.

Let's sum up all said above. It is senseless to waste time on trying to gain last profits from the 32-bit architecture. Save your time. Use parallelism and 64-bit address space to increase productivity. Give a new informational occasion and take the lead over your rivals while developing the market of high-performance applications.

Provide yourself with good hardware[/url]

So, you decided to use parallelism and 64-bit technologies in your program developments. Perfect. So, let's at first touch upon some organizational questions.

Despite that you have to face the development of complex programs processing large data sizes, still the company management often doesn't understand the necessity of providing the developers with the most high-performance computers. Although your applications may be intended for high-performance workstations, you are likely to test and debug them on your own machine. Of course all programmers need high-performance computers even if their programs don't process many GB of data. All have to compile them, launch heavy auxiliary tools and so on. But only a developer of resource-intensive software can feel deeply all the troubles caused by the lack of RAM memory or slow speed of the disk subsystem.

Show this part of the article to your manager. Now we'll try to explain why it is profitable to invest money into your tools - computers.

This may sound trivial but a fast processor and a fast disk subsystem may speed up the process of applications' compilation greatly! It seems to you that there is no difference between two minutes and one minute of compilation of some part of the code, doesn't it? The difference is enormous! Minutes turn out into hours, days, months. Ask your programmers to count how much time they spend awaiting the code compilation. Divide this time at least by 1.5 and then you can calculate how quickly the investment into the new machinery will be repaid. I assure you that you will be amused.

Keep in mind one more effect which will help you to save working time. If some action takes 5 minutes one will wait. If it is 10 minutes one will go to make coffee, read forums or play ping-pong, what will take more than 10 minutes! And not because he is an idler or wants coffee very much - he will be just bored. Don't let the action be interrupted: push the button - get the result. Make it so that those processes which took 10 minutes take less than 5 now.

I want you once again to pay your attention that the aim is not to occupy a programmer with a useful task in his leisure-time but to speed up all the processes in general. Installation of a second computer (dual-processor system) with the purpose that the programmer will switch over to other tasks while waiting is wrong at all. A programmer's labor is not that of a street cleaner, who can clear a bench from snow during a break while breaking ice. A programmer's labor needs concentration on the task and keeping in mind a lot of its elements. Don't try to switch over a programmer (this try will be useless), try to make it so that he could continue to solve the task he's working at as soon as possible. According to the article "Stresses of multitask work: how to fight them" [2] to go deeply into some other task or an interrupted one a person needs 25 minutes. If you don't provide continuity of the process, half the time will be wasted on this very switching over. It doesn't matter what it is - playing ping-pong or searching for an error in another program.

Don't spare money to buy some more GB of memory. This purchase will be repaid after several steps of debugging a program allocating large memory size. Be aware that lack of RAM memory causes swapping and can slow down the process of debugging from minutes to hours.

Don't spare money to provide the machine with RAID subsystem. Not to be a theorist, here you are an example from our own experience (table 2).

Configuration (pay attention to RAID) Time of building of an average project using large number of exterior libraries. AMD Athlon(TM) 64 X2 Dual Core Processor 3800+, 2 GB of RAM,2 x 250Gb HDD SATA - RAID 0 95 minutes AMD Athlon(TM) 64 X2 Dual Core Processor 4000+, 4 GB of RAM,500 Gb HDD SATA (No RAID) 140 minutes Table 2. An example of how RAID influences the speed of an application's building. Dear managers! Trust me that economizing on hardware is compensated by delays of programmers' work. Such companies as Microsoft provide the developers with latest models of hardware not because of generosity and wastefulness. They do count their money and their example shouldn't be ignored.

At this point the part of the article devoted to managers is over, and we would like to address again creators of program solutions. Do demand for the equipment you consider to be necessary for you. Don't be shy, after all your manager is likely just not to understand that it is profitable for everybody. You should enlighten him. Moreover, in case the plan isn't fulfilled it is you who will seem to be guilty. It is easier to get new machinery than try to explain on what you waste your time. Imagine yourself how can your excuse why you have been correcting only one error all the day long sound: "It's a very large project. I launched the debugger, had to wait very long. And I have only one GB of memory. I can't work at anything else simultaneously. Windows began to swap. Found an error, corrected it, but I have to launch and check it again...". Perhaps, your manager won't say anything but will consider you just an idler. Don't allow this.

Your major task while developing a resource-intensive application is not projecting a new system and even not the study of theory, this task is to demand to buy all the necessary hardware and software in good time. Only after that you may begin to develop resource-intensive program solutions efficiently. It is impossible to write and check parallel programs without multicore processors. And it is impossible to write a system for processing large data sizes without necessary RAM memory size.

Before we switch over to the next topic, we would like to share some ideas with you which will help you to make your work more comfortable.

You may try to solve the problem of slow building of a project by using special tools of parallel building of a system similar, for example, to IncrediBuild by Xoreax Software ([url="http://www.xoreax.com/"]http://www.xoreax.com). Of course, there are other similar systems which may be found in the Web.

The problem of testing applications with large data arrays (launch of test packs) for which usual machines are not productive enough, may be solved by using several special high-performance machines with remote access. Such an example of remote access is Remote Desktop or X-Win. Usually simultaneous test launches are carried out only by few developers. And for a group of 5-7 developers 2 dedicated high-performance machines are quite enough. It won't be the most convenient solution but it will be rather saving in comparison to providing every developer with such workstations.

Use a logging system instead of a debugger[/url]

The next obstacle on your way while developing systems for processing large data sizes is that you are likely to reconsider your methodology of work with the debugger or even refuse to use it at all.

Some specialists offer to refuse the debugging methodology because of some ideological reasons. The main argument is that the use of the debugger is the use of cut and try method. A person noticing the incorrect behavior of the algorithm at some step of its execution brings corrections into it without examining why this error occurred and without thinking about the way he corrects it. If he didn't guess the right way of correction he will notice it during the next execution of the code and bring new corrections. It will result in lower quality of the code. And the author of this code is not always sure that he understands how it works. Opponents of debugging method offer to replace it with more strict discipline of algorithm development, with the use of functions as small as possible so that their working principles are clear. Besides, they offer to pay more attention to unit-testing and to use logging systems for analysis of the program's correct work.

There are some rational arguments in the described criticism of debugging systems but, as in many cases, one should weigh everything and not run to extremes. The use of the debugger is often convenient and may save a lot of effort and time.

Causes why the debugger is not so attractive

Bad applicability of the debugger while working with systems processing large data sizes is unfortunately related not to ideological but practical difficulties. We'd like to familiarize the readers with these difficulties to save their time on fighting with the debugging tool when it is of little use, and prompt them to search for alternative solutions.

Let's study some reasons why alternative means should be used instead of a traditional debugger (for example, one integrated into Visual C++ environment).

1) Slow program execution

Execution of a program under a debugger processing millions and billions of elements may become practically impossible because of great time costs. Firstly, it is necessary to use debugging code variant with optimization turned off and that already slows down the speed of algorithm's work. Secondly, in the debugging variant there occurs allocation of larger memory size for control of going out of the arrays' limits, memory fill during allocation/deletion etc. This slows down the work even more.

One can truly notice that a program may be debugged not necessarily at large working data sizes and one may manage with testing tasks. Unfortunately, this is not so. An unpleasant surprise consists in that while developing 64-bit systems you cannot be sure of the correct work of algorithms, testing them at small data sizes instead of working sizes of many GB.

Here you are another simple example demonstrating the problem of necessary testing at large data sizes.

#include #include #include #include int main(int argc, char* argv[]) { std::ifstream file; file.open(argv[1], std::ifstream::binary); if (!file) return 1; boost::filesystem::path fullPath(argv[1], boost::filesystem::native); boost::uintmax_t fileSize = boost::filesystem::file_size(fullPath); std::vector buffer; for (int i = 0; i != fileSize; ++i) { unsigned char c; file >> c; if (c >= 'A' && c <= 'Z') buffer.push_back(C); } std::cout << "Array size=" << buffer.size() << std::endl; return 0; } This program reads the file and saves in the array all the symbols related to capital English letters. If all the symbols in the output file are capital English letters we won't be able to put more than 2*1024*1024*1024 symbols into the array on a 32-bit system, and consequently to process the file of more than 2 GB. Let's imagine that this program was used correctly on the 32-bit system - with taking into consideration this limit and no errors occurred. On the 64-bit system we'd like to process files of larger size as there is no limit of the array's size of 2 GB. Unfortunately, the program is written incorrectly from the point of view of LLP64 data model (see table 1) used in the 64-bit Windows version. The loop contains int type variable whose size is still 32 bits. If the file's size is 6 GB, condition "i != fileSize" will never be fulfilled and an infinite loop will occur.

This code is mentioned to show how difficult it is to use the debugger while searching for errors which occur only at a large memory size. On getting an eternal loop while processing the file on the 64-bit system you may take a file of 50 bites for processing and watch how the functions works under the debugger. But an error won't occur at such data size and to watch the processing of 6 billion elements under the debugger is impossible.

Of course, you should understand that this is only an example and that it can be debugged easily and the cause of the loop may be found. Unfortunately, this often becomes practically impossible in complex systems because of the slow speed of the processing of large data sizes.

To learn more about such unpleasant examples see articles "Forgotten problems of 64-bit program development" [[url="http://www.viva64.com/articles/Forgotten_problems.html"]3] and "20 issues of porting C++ code on the 64-bit platform" [4].

2) Multi-threading

The method of several instruction threads executed simultaneously for speeding up the processing of large data size has been used for a long time and rather successfully in cluster systems and high-performance servers. But only with the appearance of multicore processors on market, the possibility of parallel data processing is being widely used by application software. And the urgency of the parallel system development will only increase in future.

Unfortunately, it is not simple to explain what is difficult about debugging of parallel programs. Only on facing the task of searching and correcting errors in parallel systems one may feel and understand the uselessness of such a tool as a debugger. But in general, all the problems may be reduced to the impossibility of reproduction of many errors and to the way the debugging process influences the sequence of work of parallel algorithms.

To learn more about the problems of debugging parallel systems you may read the following articles: "Program debugging technology for machines with mass parallelism" [5], "Multi-threaded Debugging Techniques" [6], "Detecting Potential Deadlocks" [7].

The difficulties described are solved by using specialized methods and tools. You may handle 64-bit code by using static analyzers working with the input program code and not demanding its launch. Such an example is the static code analyzer Viva64 [8].

To debug parallel systems you should pay attention to such tools as TotalView Debugger (TVD) [9]. TotalView is the debugger for languages C, C++ and Fortran which works at Unix-compatible operating system and Mac OS X. It allows to control execution threads, show data of one or all the threads, can synchronize the threads through breakpoints. It supports also parallel programs using MPI and OpenMP.

Another interesting application is the tool of multi-threading analysis Intel(R) Threading Analysis Tools [10].

Use of a logging system[/url]

All the tools both mentioned and remaining undiscussed are surely useful and may be of great help while developing high-performance applications. But one shouldn't forget about such time-proved methodology as the use of logging systems. Debugging by logging method hasn't become less urgent for several decades and still remains a good tool about which we'll speak in detail. The only change concerning logging systems is growing demands towards them. Let's try to list the properties a modern logging system should possess for high-performance systems:

- The code providing logging of data in the debugging version must be absent in the output version of a software product. Firstly, this is related to the increase of performance and decrease of the software product's size. Secondly, it doesn't allow to use debugging information for cracking of an application and other illegal actions.

- The logging system's interfaces should be compact not to overload the main program code.

- Data writing should be as fast as possible in order to bring minimal changes into time characteristics of parallel algorithms.

- The output log should be understandable and analyzable. There should be a possibility to divide information gained from different threads and also to vary the number of its details.

We won't give a particular example of a logging system's code in this article. It is hard to make such a system universal as it depends on the development environment very much, as well as the project's specificity, the developer's wishes and so on. Instead, we'll touch upon some technical solutions which will help you to create a convenient and effective logging system if you need it.

The simplest way to carry out logging is to use the function similar to printf as shown in the example:

int x = 5, y = 10; ... printf("Coordinate = (%d, %d)\n", x, y); Its natural disadvantage is that the information will be shown both in the debugging mode and in the output product. That's why we have to change the code as follows: #ifdef DEBUG_MODE #define WriteLog printf #else #define WriteLog(a) #endif WriteLog("Coordinate = (%d, %d)\n", x, y); This is better. And pay attention that we use our own macro DEBUG_MODE instead of a standard macro _DEBUG to choose how the function WriteLog will be realized. This allows to include the debugging information into Release-versions what is important when carrying out debugging at large data size. Unfortunately, when compiling the un-debugging version in Visual C++ environment we get a warning message: "warning C4002: too many actual parameters for macro 'WriteLog'". We may turn this message off but it is a bad style. We can rewrite the code as follows:

#ifdef DEBUG_MODE #define WriteLog(a) printf a #else #define WriteLog(a) #endif WriteLog(("Coordinate = (%d, %d)\n", x, y)); This code is not smart because we have to use double pairs of brackets what is often forgotten. That's why let's bring some improvement: #ifdef DEBUG_MODE #define WriteLog printf #else inline int StubElepsisFunctionForLog(...) { return 0; } static class StubClassForLog { public: inline void operator =(size_t) {} private: inline StubClassForLog &operator =(const StubClassForLog &) { return *this; } } StubForLogObject; #define WriteLog \ StubForLogObject = sizeof StubElepsisFunctionForLog #endif WriteLog("Coordinate = (%d, %d)\n", x, y);

- This code looks complicated but it allows to write single brackets. When the DEBUG_MODE is turned off this code doesn't matter at all and you can safely use it in critical code sections.

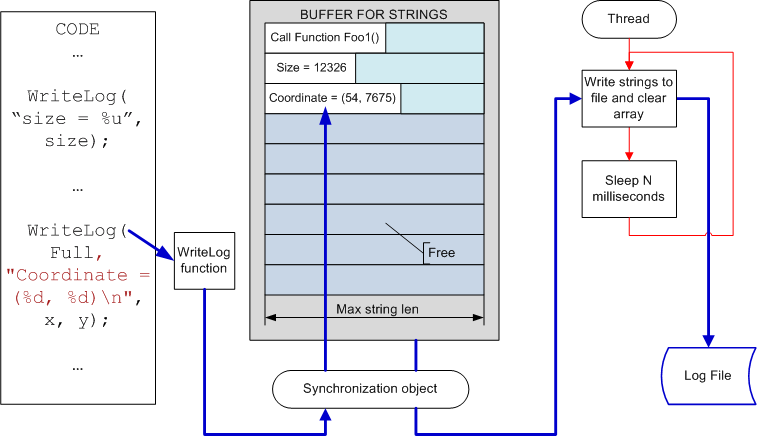

enum E_LogVerbose { Main, Full }; #ifdef DEBUG_MODE void WriteLog(E_LogVerbose, const char *strFormat, ...) { ... } #else ... #endif WriteLog (Full, "Coordinate = (%d, %d)\n", x, y); This is convenient in that way that you can decide whether to filter unimportant messages or not after the program's shutdown by using a special utility. The disadvantage of this method is that all the information is shown - both important and unimportant, what may influence the productivity badly. That's why you may create several functions of WriteLogMain, WriteLogFull type and so on, whose realization will depend upon the mode of the program's building. We mentioned that the writing of the debugging information must not influence the speed of the algorithm's work too much. We can reach this by creating a system of gathering messages, the writing of which occurs in the thread executed simultaneously. The outline of this mechanism is shown on picture 2.

Picture 2. Logging system with lazy data write. As you can see on the picture the next data portion is written into an intermediate array with strings of fixed length. The fixed size of the array and its strings allows to exclude expensive operations of memory allocation. This doesn't reduce the possibilities of this system at all. You can just select the strings' length and the array's size with spare. For example, 5000 strings of 4000 symbols will be enough for debugging nearly any system. And memory size of 20 MB which is necessary for that is not critical for modern systems, I think you'll agree with me. But if the array's overflow occurs anyway, it's easy to provide a mechanism of anticipatory writing of information into the file.

The described mechanism provides practically instant execution of WriteLog function. If there are offloaded processor's cores in the system the writing into the file will be virtually transparent for the main program code.

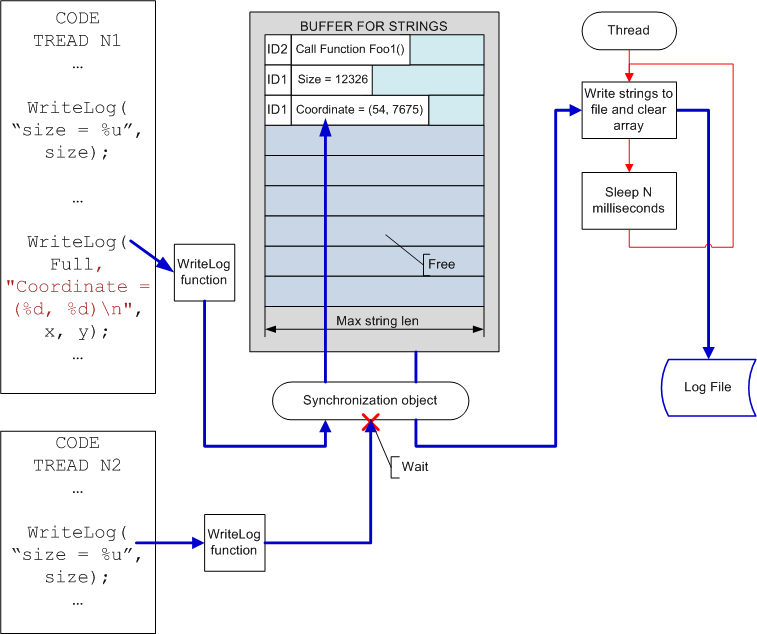

The advantage of the described system is that it can function practically without changes while debugging the parallel program, when several threads are being written into the log simultaneously. You need just to add a process identifier so that you can know later from what threads the messages were received (see picture 3).

Picture 3. Logging system while debugging multithread applications. The last improvement we'd like to offer is organization of showing the level of nesting of messages at the moment of functions' call or the beginning of a logical block. This can be easily organized by using a special class that writes an identifier of a block's beginning into the log in the constructor, and an identifier of the block's end in the destructor. Let's try to show this by an example.

Program code:

class NewLevel { public: NewLevel() { WriteLog("__BEGIN_LEVEL__\n"); } http://images.gamede...nseAppNewLevel() { WriteLog("__END_LEVEL__\n"); } }; #define NEW_LEVEL NewLevel tempLevelObject; void MyFoo() { WriteLog("Begin MyFoo()\n"); NEW_LEVEL; int x = 5, y = 10; printf("Coordinate = (%d, %d)\n", x, y); WriteLog("Begin Loop:\n"); for (unsigned i = 0; i != 3; ++i) { NEW_LEVEL; WriteLog("i=%u\n", i); } } Log's content: Begin MyFoo() __BEGIN_LEVEL__ Coordinate = (5, 10) Begin Loop: __BEGIN_LEVEL__ i=0 __END_LEVEL__ __BEGIN_LEVEL__ i=1 __END_LEVEL__ __BEGIN_LEVEL__ i=2 __END_LEVEL__ Coordinate = (5, 10) __END_LEVEL__ Log after transformation: Begin MyFoo() Coordinate = (5, 10) Begin Loop: i=0 i=1 i=2 Coordinate = (5, 10) I think that's all for this topic. We'd like to mention at last that the article "Logging In C++" [[url="http://www.ddj.com/cpp/201804215"]11] may be of use for you too. We wish you successful debugging.

The use of right data types from the viewpoint of 64-bit technologies[/url]

The use of base data types corresponding to the hardware platform in C/C++ languages is an important point of creating quality and high-performance program solutions. With the appearance of 64-bit systems new data models have been used - LLP64, LP64, ILP64 (see table 1) and this changes the rules and recommendations concerning the use of base data types. To such types int, unsigned, long, unsigned long, ptrdiff_t, size_t and pointers can be referred. Unfortunately, there are practically no popular literature and articles which touch upon the problems of choosing types. And those sources which do, for example "Software Optimization Guide for AMD64 Processors" [12], are seldom read by application programmers.

The urgency of right choice of base types for processing data is determined by two important causes: the correct code's work and its efficiency.

Due to the historical development the base and the most often used integer type in C and C++ languages is int or unsigned int. It is accepted to consider int type the most optimal as its size coincides with the length of the processor's computer word.

The computer word is a group of RAM memory bits taken by the processor at one call (or processed by it as a single group) and usually contains 16, 32 or 64 bits.

The tradition to make int type size equal to the computer word has been seldom broken until recently. On 16-bit processors int consisted of 16 bits. On 32-bit processors it is 32 bits. Of course, there existed other correlations between int-size and the computer word's size but they were used seldom and are not of interest for us now.

We are interested in that fact that with the appearance of 64-bit processors the size of int type remained 32-bits in most systems. Int type has 32-bit size in data models LLP64 and LP64 which are used in 64-bit Windows operating system and most Unix systems (Linux, Solaris, SGI Irix, HP UX 11).

It is a bad decision to leave int type size of 32-bit due to many reasons, but it is really a reasonable way to choose the lesser of two evils. First of all, it is related to the problems of providing backward compatibility. To learn more about the reasons of this choice you may read the blog "Why did the Win64 team choose the LLP64 model?" [[url="http://blogs.msdn.com/oldnewthing/archive/2005/01/31/363790.aspx"]13] and the article "64-Bit Programming Models: Why LP64?" [14].

For developers of 64-bit applications all said above is the reason to follow two new recommendations in the process of developing software.

Recommendation 1. Use ptrdiff_t and size_t types for the loop counter and address arithmetic's counter instead of int and unsigned.

Recommendation 2. Use ptrdiff_t and size_t types for indexing in arrays instead of int and unsigned.

In other words you should use whenever possible data types whose size is 64 bits in a 64-bit system. Consequently you shouldn't use constructions like:

for (int i = 0; i != n; i++) array = 0.0; Yes, this is a canonical code example. Yes, it is included in many programs. Yes, with it learning C and C++ languages begins. But it is recommended not to use it anymore. Use either an iterator or data types ptdriff_t and size_t as it is shown in the improved example: for (size_t i = 0; i != n; i++) array = 0.0; Developers of Unix-applications may notice that the practice of using long type for counters and indexing arrays has appeared long ago. Long type is 64-bit in 64-bit Unix systems and it looks smarter than ptdriff_t or size_t. Yes, it's true but we should keep in mind two important points. 1) long type's size remained 32-bit in 64-bit Windows operating system (see table 1). Consequently it cannot be used instead of ptrdiff_t and size_t types.

2) The use of long and unsigned long types causes a lot of troubles for developers of cross-platform applications for Windows and Linux systems. Long type has different sizes in these systems and only complicates the situation. It's better to stick to types which have the same size in 32-bit and 64-bit Windows and Linux systems.

Now let's explain by examples why we are so insistent asking you to use ptrdiff_t/size_t type instead of usual int/unsigned type.

We'll begin with an example illustrating the typical error of using unsigned type for the loop counter in 64-bit code. We have already described a similar example before but let's see it once again as this error is widespread:

size_t Count = BigValue; for (unsigned Index = 0; Index != Count; ++Index) { ... } This is typical code variants of which can be met in many programs. It is executed correctly in 32-bit systems where the value of Count variable cannot exceed SIZE_MAX (which is equal to UINT_MAX in a 32-bit system). In a 64-bit system the range of possible values for Count may be extended and in this case when Count > UINT_MAX an eternal loop occurs. The proper correction of this code is to use size_t type instead of unsigned. The next example shows the error of using int type for indexing large arrays:

double *BigArray; int Index = 0; while (...) BigArray[Index++] = 3.14f; This code doesn't seem suspicious to an application developer accustomed to the practice of using variables of int or unsigned types as arrays' indexes. Unfortunately, this code won't work in a 64-bit system if the size of the processed array BigArray becomes more than four billion items. In this case an overflow of Index variable will occur and the result of the program's work will be incorrect (not the whole array will be filled). Again, the correction of the code is to use ptrdiff_t or size_t types for indexes. As the last example we'd like to demonstrate the potential danger of mixed use of 32-bit and 64-bit types, which you should avoid whenever possible. Unfortunately, few developers think about the consequences of inaccurate mixed arithmetic and the next example is absolutely unexpected for many (the results are received with the use of Microsoft Visual C++ 2005 at 64-bit compilation mode):

int x = 100000; int y = 100000; int z = 100000; intptr_t size = 1; // Result: intptr_t v1 = x * y * z; // -1530494976 intptr_t v2 = intptr_t(x) * y * z; // 1000000000000000 intptr_t v3 = x * y * intptr_t(z); // 141006540800000 intptr_t v4 = size * x * y * z; // 1000000000000000 intptr_t v5 = x * y * z * size; // -1530494976 intptr_t v6 = size * (x * y * z); // -1530494976 intptr_t v7 = size * (x * y) * z; // 141006540800000 intptr_t v8 = ((size * x) * y) * z; // 1000000000000000 intptr_t v9 = size * (x * (y * z)); // -1530494976 We want you to pay attention that expression of "intptr_t v2 = intptr_t(x) * y * z;" type doesn't guarantee the correct result at all. It guarantees only that expression "intptr_t(x) * y * z" will have intptr_t type. The article "20 issues of porting C++ code on the 64-bit platform" [4] will help you to learn more about this problem. Now let's look at the example demonstrating the advantages of using ptrdiff_t and size_t types from the viewpoint of productivity. For demonstration we'll take a simple algorithm of calculating the minimal length of the path in the labyrinth. You may see the whole code of the program through this link: http://www.Viva64.com/articles/testspeedexp.zip.

In this article we place only the text of functions FindMinPath32 and FindMinPath64. Both these functions calculate the length of the minimal path between two points in a labyrinth. The rest of the code is not of interest for us now.

typedef char FieldCell; #define FREE_CELL 1 #define BARRIER_CELL 2 #define TRAVERSED_PATH_CELL 3 unsigned FindMinPath32(FieldCell (*field)[ArrayHeight_32], unsigned x, unsigned y, unsigned bestPathLen, unsigned currentPathLen) { ++currentPathLen; if (currentPathLen >= bestPathLen) return UINT_MAX; if (x == FinishX_32 && y == FinishY_32) return currentPathLen; FieldCell oldState = field[x][y]; field[x][y] = TRAVERSED_PATH_CELL; unsigned len = UINT_MAX; if (x > 0 && field[x - 1][y] == FREE_CELL) { unsigned reslen = FindMinPath32(field, x - 1, y, bestPathLen, currentPathLen); len = min(reslen, len); } if (x < ArrayWidth_32 - 1 && field[x + 1][y] == FREE_CELL) { unsigned reslen = FindMinPath32(field, x + 1, y, bestPathLen, currentPathLen); len = min(reslen, len); } if (y > 0 && field[x][y - 1] == FREE_CELL) { unsigned reslen = FindMinPath32(field, x, y - 1, bestPathLen, currentPathLen); len = min(reslen, len); } if (y < ArrayHeight_32 - 1 && field[x][y + 1] == FREE_CELL) { unsigned reslen = FindMinPath32(field, x, y + 1, bestPathLen, currentPathLen); len = min(reslen, len); } field[x][y] = oldState; if (len >= bestPathLen) return UINT_MAX; return len; } size_t FindMinPath64(FieldCell (*field)[ArrayHeight_64], size_t x, size_t y, size_t bestPathLen, size_t currentPathLen) { ++currentPathLen; if (currentPathLen >= bestPathLen) return SIZE_MAX; if (x == FinishX_64 && y == FinishY_64) return currentPathLen; FieldCell oldState = field[x][y]; field[x][y] = TRAVERSED_PATH_CELL; size_t len = SIZE_MAX; if (x > 0 && field[x - 1][y] == FREE_CELL) { size_t reslen = FindMinPath64(field, x - 1, y, bestPathLen, currentPathLen); len = min(reslen, len); } if (x < ArrayWidth_64 - 1 && field[x + 1][y] == FREE_CELL) { size_t reslen = FindMinPath64(field, x + 1, y, bestPathLen, currentPathLen); len = min(reslen, len); } if (y > 0 && field[x][y - 1] == FREE_CELL) { size_t reslen = FindMinPath64(field, x, y - 1, bestPathLen, currentPathLen); len = min(reslen, len); } if (y < ArrayHeight_64 - 1 && field[x][y + 1] == FREE_CELL) { size_t reslen = FindMinPath64(field, x, y + 1, bestPathLen, currentPathLen); len = min(reslen, len); } field[x][y] = oldState; if (len >= bestPathLen) return SIZE_MAX; return len; } FindMinPath32 function is written in classic 32-bit style with the use of unsigned types. FindMinPath64 function differs from it only in that all the unsigned types in it are replaced by size_t type. There are no other differences! I think you'll agree that this is an easy modification of the program. And now let's compare the execution speeds of these two functions (see table 2). Mode and function. Function's execution time 1 32-bit compilation mode. Function FindMinPath32 1 2 32-bit compilation mode. Function FindMinPath64 1.002 3 64-bit compilation mode. Function FindMinPath32 0.93 4 64-bit compilation mode. Function FindMinPath64 0.85 Table 2. Execution time of FindMinPath32 and FindMinPath64 functions.

Table 2 shows the time relative to the FindMinPath32 function's execution speed in a 32-bit system. This is made for more clearness.

In the first line the work time of FindMinPath32 function in a 32-bit system is 1. It is because we take its work time for a unit of measure.

In the second line we see that FindMinPath64 function's work time in a 32-bit system is 1 too. This is not surprising because unsigned type coincides with size_t type in a 32-bit system and there is no difference between FindMinPath32 and FindMinPath64 functions. Some deviation (1.002) means only a little time calculation error.

In the third line we see 7% productivity increase. This is an expected result of the code recompilation for a 64-bit system.

The fourth line is the most interesting. Productivity increase is 15% here. This means that the simple use of size_t type instead of unsigned allows the compiler to construct more efficient code working 8% faster!

It is a simple and clear example of how the use of data not equal to the computer word's size decreases the algorithms productivity. Simple replacement of int and unsigned types with ptrdiff_t and size_t may give great productivity increase. First of all this refers to the use of these data types for indexing arrays, address arithmetic and organization of loops.

We hope that having read all said above you will think if you should continue to write:

for (int i = 0; i !=n; i++) array = 0.0; To automate the error search in 64-bit code developers of Windows-application may take into consideration the static code analyzer Viva64 [8]. Firstly, its use will help to find most errors. Secondly, while developing programs under its control you will use 32-bit variables more seldom, avoid mixed arithmetic with 32-bit and 64-bit data types what will at once increase productivity of your code. For developers of Unix-system such static analyzer may be of interest as Gimpel Software PC-Lint [15] and Parasoft C++test [16]. They can diagnose some 64-bit errors in the code with LP64 data model which is used in most Unix-systems. To learn more about the problems of developing quality and efficient 64-bit code you may read the following articles: "Problems of testing 64-bit applications" [17], "24 Considerations for Moving Your Application to a 64-bit Platform" [18], "Porting and Optimizing Multimedia Codecs for AMD64 architecture on Microsoft Windows" [19], "Porting and Optimizing Applications on 64-bit Windows for AMD64 Architecture" [20].

Additional ways of increasing productivity of program systems[/url]

In the last part of this article we'd like to touch upon some more technologies which may be useful for you while developing resource-intensive program solutions.

Intrinsic- functions

Intrinsic-functions are special system-dependent functions which execute actions impossible to be executed on the level of C/C++ code or which execute these actions much more efficiently. As the matter of fact they allow to avoid using inline-assembler because it is often impossible or undesirable.

Programs may use intrinsic-functions for creating faster code due to the absence of overhead costs on the call of a usual function type. In this case, of course, the code's size will be a bit larger. In MSDN the list of functions is given which may be replaced with their intrinsic-versions. For example, these functions are memcpy, strcmp etc.

In Microsoft Visual C++ compiler there is a special option "/Oi" which allows to automatically replace calls of some functions with intrinsic-analogs.

Beside automatic replacement of usual functions with intrinsic-variants we can explicitly use intrinsic-functions in the code. It may be used due to the following reasons:

- Inline assembler code is not supported by Visual C++ compiler in 64-bit mode, while intrinsic-code is.

- Intrinsic-functions are simpler to use as they don't require the knowledge of registers and other similar low-level constructions.

- Intrinsic-functions are updated in compilers, while assembler code has to be updated manually.

- Inline optimizer doesn't work with assembler code, that's why you need exterior linking of the module, while intrinsic-code doesn't need this.

- Intrinsic-code is easier to port than assembler code.

- The use of intrinsic-functions in automatic mode (with the help of the compiler's key) allows to get some per cent of productivity increase free and "manual" mode even more. That's why the use of intrinsic-functions is justified.

- To learn more about the use of intrinsic-functions you may see Visual C++ group's blog [[url="http://blogs.msdn.com/vcblog/archive/2007/10/18/new-intrinsic-support-in-visual-studio-2008.aspx"]21

Data blocking and alignment[/url]

- Data alignment doesn't influence the code productivity so greatly as it was 10 years ago. But sometimes you can get a little profit in this sphere too saving some memory and productivity.

struct foo_original {int a; void *b; int c; }; This structure takes 12 bytes in 32-bit mode but in 64-bit mode it takes 24 bytes. In order to make it so that this structure takes prescribed 16 bytes in 64-bit mode you should change the sequence order of fields: struct foo_new { void *b; int a; int c; }; In some cases it is useful to help the compiler explicitly by defining the alignment manually in order to increase productivity. For example, SSE should be aligned at the border of 16 bytes. You can do this in the following way: // 16-byte aligned data __declspec(align(16)) double init_val [3.14, 3.14]; // SSE2 movapd instruction _m128d vector_var = __mm_load_pd(init_val); Sources "Porting and Optimizing Multimedia Codecs for AMD64 architecture on Microsoft Windows" [[url="http://www.amd.com/us-en/assets/content_type/DownloadableAssets/dwamd_MMCodec_amd64.pdf"]19], "Porting and Optimizing Applications on 64-bit Windows for AMD64 Architecture" [20] offer detailed review of these problems.

Files mapped into memory[/url]

With the appearance of 64-bit systems the technology of mapping of files into memory became more attractive because the data access hole increased. It may be a very good addition for some applications. Don't forget about it.

Memory mapping of files is becoming less useful with 32-bit architectures, especially with the introduction of relatively cheap recordable DVD technology. A 4 Gb file is no longer uncommon, and such large files cannot be memory mapped easily to 32-bit architectures. Only a region of the file can be mapped into the address space, and to access such a file by memory mapping, those regions will have to be mapped into and out of the address space as needed. On 64-bit windows you have much larger address space, so you may map whole file at once.

Keyword __restrict

One of the most serious problems for a compiler is aliasing. When the code reads and writes memory it is often impossible at the step of compilation to determine whether more than one index is provided with access to this memory space, i.e. whether more than one index can be a "synonym" for one and the same memory space. That's why the compiler should be very careful working inside a loop in which memory is both read and written while storing data in registers and not in memory. This insufficient use of registers may influence the performance greatly.

The keyword __restrict is used to make it easier for the compiler to make a decision. It "tells" the compiler to use registers widely.

Keyword __restrict allows the compiler not to consider the marked pointers aliased, i.e. referring to one and the same memory area. In this case the compiler can provide more efficient optimization. Let's look at the example:

int * __restrict a; int *b, *c; for (int i = 0; i < 100; i++) { *a += *b++ - *c++ ; // no aliases exist } In this code the compiler can safely keep the sum in the register related to variable "a" avoiding writing into memory. MSDN is a good source of information about the use of __restrict keyword.

SSE- instructions

Applications executed on 64-bit processors (independently of the mode) will work more efficiently if SSE-instructions are used in them instead of MMX/3DNow. This is related to the capacity of processed data. SSE/SSE2 instructions operate with 128-bit data, while MMX/3DNow only with 64-bit data. That's why it is better to rewrite the code which uses MMX/3DNow with SSE-orientation.

We won't dwell upon SSE-constructions in this article offering the readers who may be interested to read the documentation written by developers of processor architectures.

Some particular rules of using language constructions

64-bit architecture gives new opportunities for optimizing the programming language on the level of separate operators. These are the methods (which have become traditional already) of "rewriting" pieces of a program for the compiler to optimize them better. Of course we cannot recommend these methods for mass use but it may be useful to learn about them.

On the first place of the whole list of these optimizations is manual unrolling of the loops. The essence of this method is clear from the example:

double a[100], sum, sum1, sum2, sum3, sum4; sum = sum1 = sum2 = sum3 = sum4 = 0.0; for (int i = 0; i < 100; I += 4) { sum1 += a; sum2 += a[i+1]; sum3 += a[i+2]; sum4 += a[i+3]; } sum = sum1 + sum2 + sum3 + sum4;

- In many cases the compiler can unroll the loop to this form (/fp:fast key for Visual C++) but not always.

- Another syntax optimization is the use of array notation instead of pointer one.

Conclusion[/url]

Despite that you'll have to face many difficulties while creating program systems using hardware abilities of modern computers efficiently, it is worthwhile. Parallel 64-bit systems provide new possibilities in developing real scalable solutions. They allow to enlarge the abilities of modern data processing software tools, be it games, CAD-systems or pattern recognition. We wish you luck in mastering new technologies!

References.

- herb Sutter. The Free Lunch Is Over. A Fundamental Turn Toward Concurrency in Software. http://www.gotw.ca/publications/concurrency-ddj.htm

- Stresses of multitask work: how to fight them.

- http://www.euro-site.ru/webnews.php?id=4770

- Andrey Karpov. Forgotten problems of developing 64-bit programs. http://www.viva64.com/articles/Forgotten_problems.html

- Andrey Karpov, Evgeniy Ryzhkov. 20 issues of porting C++ code on the 64-bit platform. http://www.viva64.com/articles/20_issues_of_porting_C++_code_on_the_64-bit_platform.html

- V.V. Samofalov, A.V.Konovalon. Program debugging technology for machines with mass parallelism//"Issues of atomic science and technology" Series "Mathematical modeling of physical processes". 1996. Issue 4. pp. 52-56.

- Shameem Akhter and Jason Roberts. Multi-threaded Debugging Techniques // Dr. Dobb's Journal, April 23, 2007, http://www.ddj.com/199200938

- Tomer Abramson. Detecting Potential Deadlocks // Dr. Dobb's Journal, January 01, 2006, http://www.ddj.com/architect/184406395

- Viva64 Tool Overview. http://www.viva64.com/viva64.php

- The TotalView Debugger (TVD). http://www.totalviewtech.com/TotalView/

- Intel Threading Analysis Tools. http://www.intel.com/cd/software/products/asmo-na/eng/219785.htm

- Petru Marginean. Logging In C++ // Dr. Dobb's Journal, September 05, 2007, http://www.ddj.com/cpp/201804215

- Software Optimization Guide for AMD64 Processors. http://www.amd.com/us-en/assets/content_type/white_papers_and_tech_docs/25112.PDF

- Blog "The Old New Thing": "Why did the Win64 team choose the LLP64 model?". http://blogs.msdn.com/oldnewthing/archive/2005/01/31/363790.aspx

- "64-Bit Programming Models: Why LP64?" http://www.unix.org/version2/whatsnew/lp64_wp.html

- Gimpel Software PC-Lint. http://www.gimpel.com

- Parasoft C++test. http://www.parasoft.com

- Andrey Karpov. Problems of testing 64-bit applications. http://www.viva64.com/articles/Problems_of_testing_64-bit_applications.html

- John Paul Mueller. 24 Considerations for Moving Your Application to a 64-bit Platform. http://developer.amd.com/articlex.jsp?id=38

- harsha Jaquasia. Porting and Optimizing Multimedia Codecs for AMD64 architecture on Microsoft Windows. http://www.amd.com/us-en/assets/con