Part 1: Theory

[attachment=19473:Things-You'dWant-To-Know_Web.jpg]

Brain Cells Do Not Recover

Even though most of the information to follow is about making your assets more engine friendly, please don't forget that this is just an insight into how things work, a guideline to know which side to approach your work from. Saving you or your teammates time is also extremely important. An extra hundred of tris won't make FPS drop through the floor. Feeling work go smooth would make for a happier team that is able to produce a lot more a lot faster. Don't turn work into struggle for anyone including yourself. Always take concern in needs of people working with the assets you produce.Dimension 1: Vertex

Remember geometry for a second. When we have a dot we, well, we have a dot. A dot is a unit of 1-dimensional space. If we move up to a 2-dimensional space, we'd be able to operate with dots in it too. But if we take two of them, then we'd be able to define a line. A line is a building block of 2-dimensional space. But if you take a closer look, a line is simply an endless number of dots put alongside each other according to a certain rule (linear function). Now lets move a level up again. In 3-dimensional space we can operate with both dots (or vertices) and lines. But, if we add one more dot to the previous two, that defined a line, we'd be able to define a face. And that face would be a building block in 3-dimensional space, that forms shapes which we are able to look at from different angles. I'm pretty sure most of you are used to receiving a triangle count as a main guideline for creating a 3D model. And I think the fact of it being a building block of a 3-dimensional space has something to do with it. :) But that's the human way of thinking. We, humans, also operate in a decimal numeral system, but hardware processors don't. It's just 0 and 1 - binary - the most basic number representation system. In order for a processor to execute anything at all, you have to break it into the smallest and simplest operations that it can solve consecutively. So in order to display 3D graphics you also have to get down to the basics. Even though a triangle is a building block of 3-dimensional space, it is still composed of 3 lines, which in their turn are defined by 3 vertices. So basically, it's not the tris that you are saving, but vertices. Though, the less the tri count the less vertices there are, right? Totally. But unfortunately, the number of tris is not the only thing affecting your vert count. There's also some underlying process that are less obvious. A 3D model is stored in memory as a number of vertex-structure-based objects. "Structures", speaking an object oriented programming language (figuratively), are predefined groups of different types of data and functions composed together to present a single entity. There could be thouthands of instances of such entities which all share the same variable types and functions, just different values stored in them. Such entities are called "objects". Here's a simplified example of how a vertex structure could look:

Vertex structure

{

Vertex Coordinates;

Vertex Color;

Vertex Normals;

UV1 Coordinates;

UV2 Coordinates;...

};

If you think of it, it's really obvious that vertex structures should only contain necessary data. Anything redundant could become a great waste of memory when your scenes hit a couple dozen million tris.

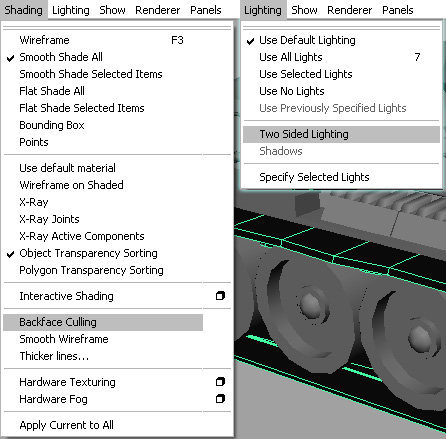

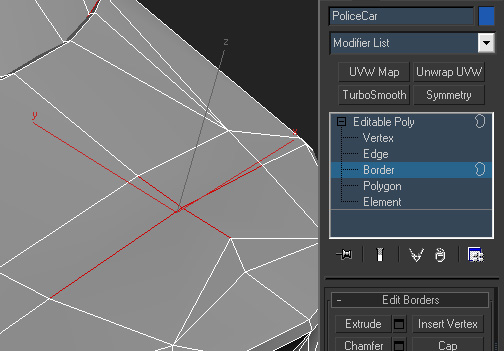

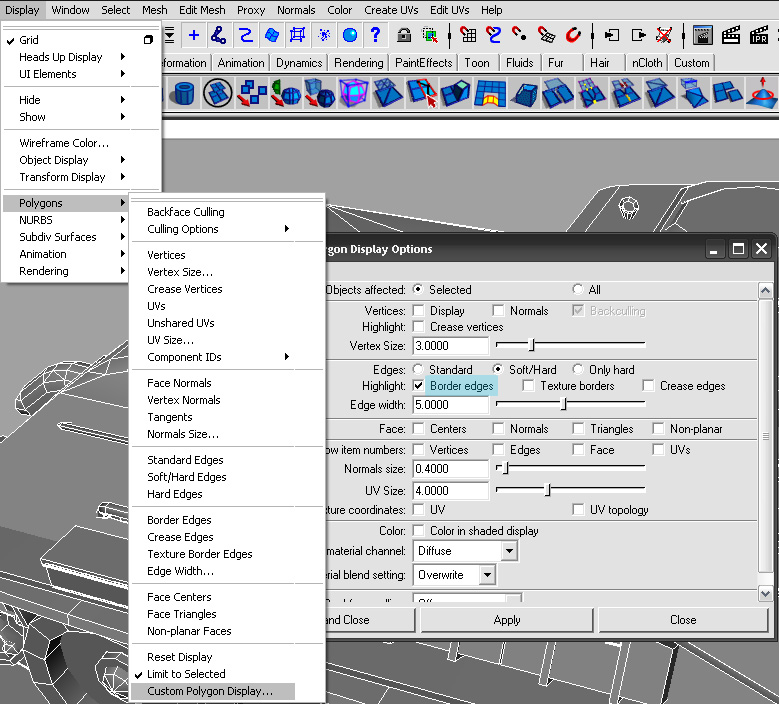

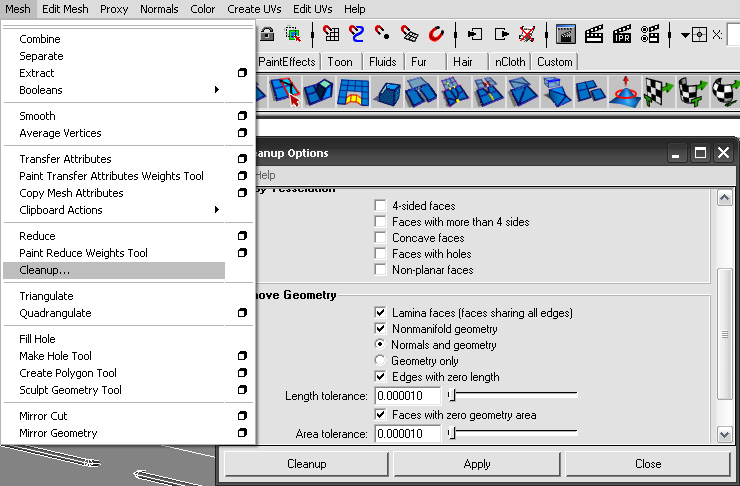

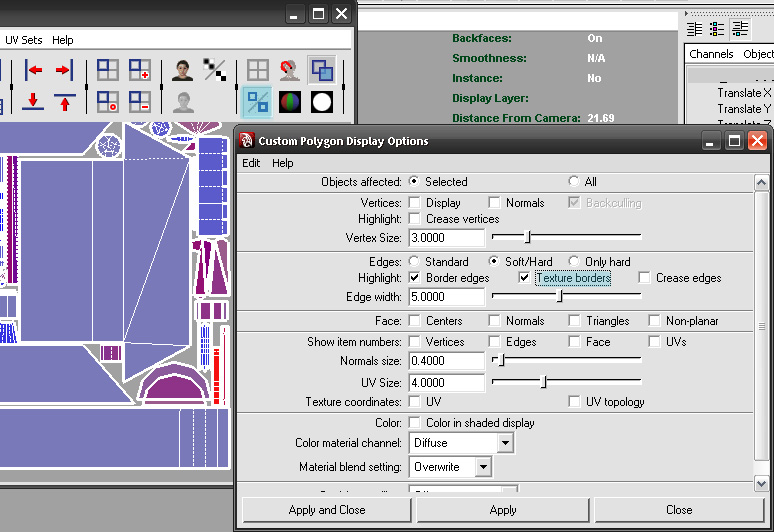

That's why a single vertex structure only holds one set of the same types of data. What does it mean for artists? It means that a vertex can't have 2 different UV positions in the same UV set, or 2 normals, or two material ID's. But we've all have seen how smoothing groups work, or applied multiple materials to objects and that didn't seem to increase the vert count. That's only in your modeling package. Your engine would treat the vertex data differently:

The easiest and most rational way to add that extra attribute to a vertex is to simply create another vertex in the exact same position.

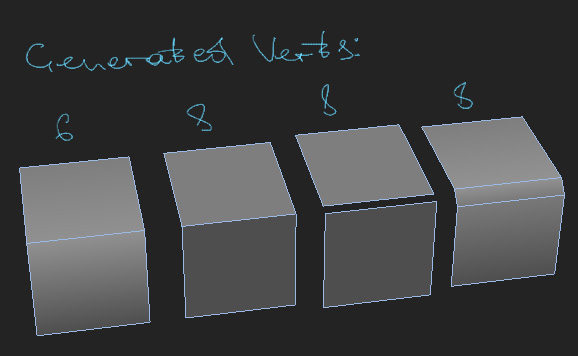

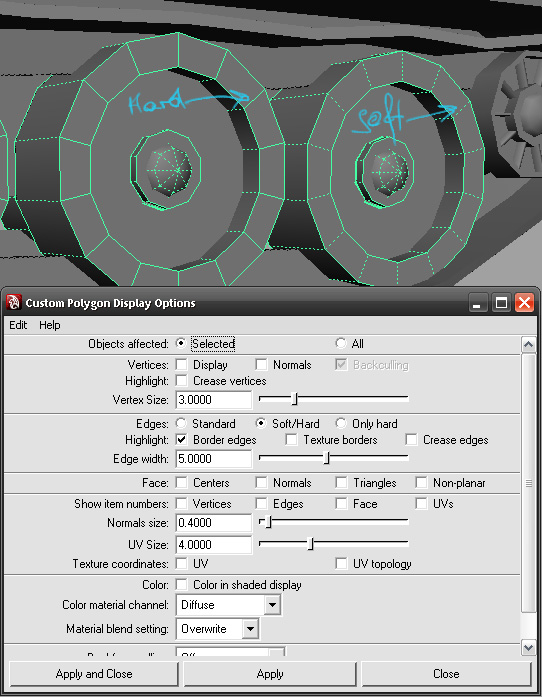

Simply put, every time you set another smoothing group for a selection of polys or make a hard edge in maya, invisibly to you, the number of border vertices doubles. The same goes for every UV seam you create. And for every additional material you apply to your model.

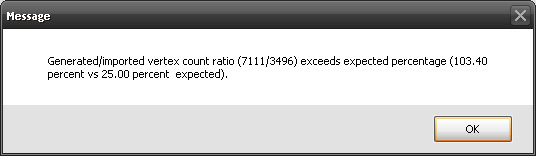

UDK used to automatically compare the number of imported vertices versus generated upon assets import and warn you if the numbers differ for more than 25 percent. Now be it 25, 50 or a gazzilion percent - doesn't really matter that much if your game runs at frame. But knowing this stuff might help you get there. Just don't be surprised if your actual vert count is 3 times what you thought it was if you set all the edges to hard and break/detach all your UV verts.

Connecting the dots

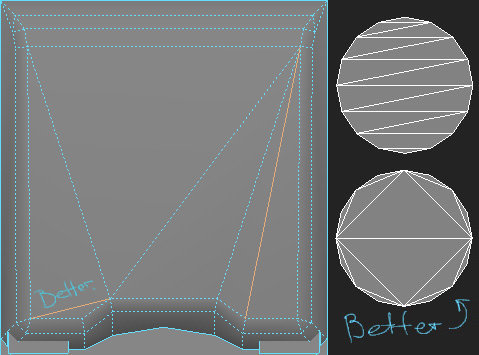

This small chapter here concerns the stuff that keeps those vertices together - the edges. The way they form triangles is important for an artist, who wants to produce efficient assets. And not only because they define shape, but because they also define how fast your triangles are rendered in a pretty non-trivial way. How would you render a pixel if it's right on the edge that 2 triangles share? You would render the pixel twice, for both triangles and then blend the results. And that leads us to a pretty interesting concept, that the tighter edge density, the more rerendered pixels you'll get and that means bigger render time. This issue should hardly affect the way you model, but knowing about it could come in handy in some other specific cases. Triangulation would be a perfect example of such a case. It's a pretty known issue, that thin tris aren't all that good to render. But talking about triangulation, if you've made one triangle thinner - you made another one wider. Imagine if we zoom out from a uniformly triangulated model: the smaller the object becomes on screen, the tighter the edge density and the bigger the chance of rerendering the same pixels. But, if you neglect uniform triangulation and worry about making every triangle have the largest area possible (thus making it incapacitate more pixels), in the end you'd get triangles with consecutively decreasing area sizes. Then once you zoom out again the amount of areas with higher edge density would be limited to a much smaller number of on-screen pixels. And the smaller the object becomes on screen, the smaller amount of potentially redrawn pixels it'll have. You could also try to work this the other way around, and start with making the triangle edges as short as possible. Now although trivial it's an interesting way to inform some of your decisions while modeling. Make sure to check out some statistics on the subject - pretty fascinatingEating in portions is better for your health

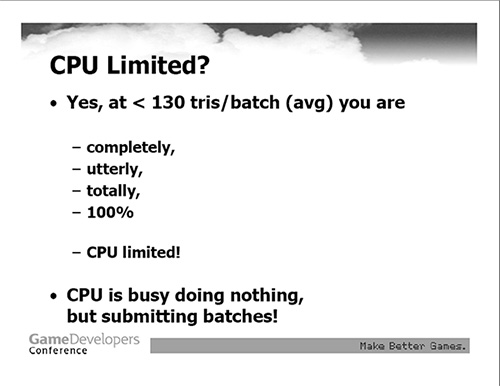

Exactly the way your engine draws your object triangle by triangle, it draws the whole scene object by object. In order for your object to be rendered a draw call must be issued. While CPU gathers information and prepares batches to be sent to GPU, GPU renders stuff. What's important for us here, is that, if CPU is unable to supply GPU with the next batch by the time it's finished with the current, the GPU has nothing to do. This means that rendering an object with a small amount of tris actually isn't all that efficient. You'll spend more time preparing for the render, then on the render itself and waste the precious milliseconds your graphics card could be working its magic. A frame from NVidia's 2005(?) GDC presentation

A frame from NVidia's 2005(?) GDC presentation

Vertex VS Pixel

If you ask an artist what is the main change in game art production we've seen in the last 10 years, I'm pretty sure that the most common answer would be the introduction of per texel shading and use of multiple textures to simulate different optical qualities of a single surface. Sure polycounts have grown, animation rigs now have more bones and procedurally generated physical movement is more widespread. But normal and spec maps are the ones contributing the most visual difference. And this difference comes at a price: in modern day engines, most of the render time is spent processing and applying all those endless maps based on the direction of the incoming lights and the cameras position. From a viewpoint of an artist, who strives to produce effective art, this means following things: Optimizing your materials is much more fruitful than optimizing vertex counts. Adding an extra 10, 20 or even 500 tris ain't nearly as stressing for performance as applying another material on an object. Shaving hundreds of tris off your model would hardly ever bring a bigger bang than deciding that your object could do without an opacity map, or glow map, or bump offset or even a secular map. You can shave 2-3 millions triangles off a level just to gain around 2-3 fps. It's not the raw tri count that affects performance the most, but more the number of draw calls and shader and lighting complexity. Then there are vertex transformation costs when you have some really complex rigs or a lot of physically controlled objects. Shader blending and lighting modes also have a lot to do with performance. Alpha blended materials cause a lot more stress than opaque ones. Vertex lit is faster and cheaper because you're going to have set vertex colors on your vertexes anyway. And if you're deffered you don't even have to worry about that, 'cause your engine is going to calculate lighting on the final frame (that has a constant amount of pixels) rather then for every object. And finally: post processing. Doing too many opeartions on your final rendered pixels could also slow your game down significantly.Things Differ (Communication is King)

As with everything in life, there's no universal recipe - things differ. And the best thing you can do is figure out what your specific case looks like. Get all the information you can from the people responsible. No one knows your engine better than the programmers. They know a lot of stuff that could be useful for artists but sometimes, due to lack of dialogue, this information remains with them. Miscommunication may lead to problems that could've been easily avoided, or be the reason you've done a lot of unnecessary work or wasted a truckload of time that could've been spent much wiser. Speak, you're all making one game after all and your success depends on how well you're able to cooperate. Asking has never hurt anyone and it's actually the best way to get an answer ;) Dalai Lama once said: "Learn your rules diligently, so you would now where to break them." And I can do nothing, but agree with him. Obeying rules all the time is the best way to not ever do anything original. All rules or restrictions have some solid arguments to back them up, and fit some general conditions. But conditions vary. If you take a closer look, every other asset could be an exception to some extent. And, ideally, having faced some tricky situation artists should be able to make decisions on their own, sometimes even break the rules if they know that the project will benefit from it, and them breaking the rules wouldn't hurt anything. But, If you don't know the facts behind the rules I doubt you would ever go breaking them. So I seriously encourage you take interest in your work. You're making games and not just art.Part 2: Practice

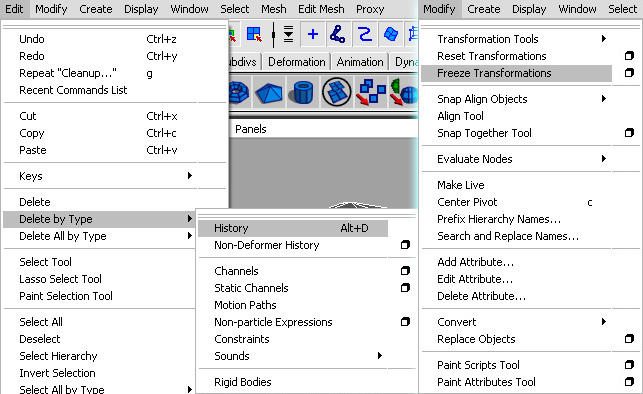

[attachment=19472:Things-You'dWant-To-Do_Web.jpg]

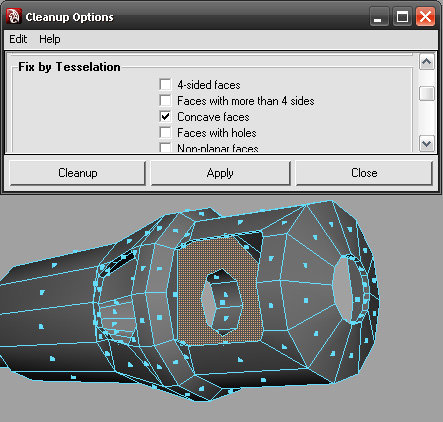

I hope this wall of text up here made some sense for you guys. All this information on how stuff works is really nice to know, but it's not exactly what you would use on a day-to-day basis. As an artist I'd love to have a place where all the "hows" are clearly stacked, without any other distracting information. And the "whys" section would serve as a reference you can turn to, in case something becomes unclear. Now lets imagine you're finally done with an asset. You'd want to make sure things are clean and engine friendly. Here's the list of things to check upon consecutively:

I couldn't have said it better myself. I have said this before but many people aspire to be great 3d modelers but when put to purpose fail to provide something of worth because of a real lack of engines and how your asset must work in accordance with them. Each engine has different requirements and those requirements very much define the parameters for your pipeline. It is hard to do but I would urge all artists to spend some serious time learning the different engines and trying to plan out all assets for these things. It will save on numerous reworks and thus save hours on your end. Try and speak with your designers to ensure you know what will be required of you. At one point I had gone through my main character model but realized I needed to add another 10 bones. This caused 2 days of rework and animation tweaking due to not planning for those bones in the original project.

Great advice here as well. When you are done with your model go over each and every line and see where you can reduce geo. I rethink most of my work over and over to ensure the best possible layout. This not only makes for a clean model it helps to reduce the issues with seems and UV mapping.

This, this, this, and then again THIS! Seriously... 100% so true.

FINAL THOUGHTS:

You have summed up a lot of what I feel should be good for a beginning artist. Great points and great thoughts. I rather enjoyed reading this article and would love to see/read more.