The equation you posted has been around forever. This is the same thing I've been using for 10 years (except for the G part) unless I missed something big.

If you're not using the G part, then you're not using Cook Torrence model. Most PBR shaders used by games seem to be Cook-Torrence / or other microfacet models.

Using traditional Blinn-Phong for the D term is not PBR -- I just used it for an example of choosing a BRDF. Traditional Blinn-Phong loses a huge amount of energy on smooth surfaces that it really should be preserving, so it doesn't behave like any real world material.

A PBR BRDF must be energy conserving (never output more than the intputs), must obey Helmholtz reciprocity, and should be able to (almost) reproduce some set of real world sampled BRDF's that have been measured using a gonioreflectometer.

Traditional Blinn-Phong (plus Shlick Fresnel) doesn't fit the bill.

Cook Torrence using Normalized Blinn-Phong (pow(dot(N,H),decode(roughness))*(decode(roughness)+1)/(2*pi)) as the D term (and other sensible functions for the F and G terms) is actually able to almost reproduce real-world BRDF measurements. If you replace that D term with a different one (Beckmann, GGX, etc), then you come closer to matching real world materials. Also, different real-world materials obtain closer reproductions with different D functions.

So PBR is basically - can I reproduce a real-world material with this function. Traditional Blinn-Phong gets a big "no, never". The models in use by current games gets an "almost, sometimes". If you want to see if you can reproduce BRDF measurements and you don't own a gonioreflectometer, you can obtain other people's samples, such as the MERL database.

So I thought N*L was being replaced completely because there really isn't 1 normal unless we zoom into a surface pretty much close to the atomic level.

N·L is still extremely important. The physical meaning of the N·L term is the projection of an incoming light beam onto a 2D surface, to measure the surface area that the incoming energy has been spread over. When N·L approaches zero, the incoming light beam is being spread over an infinitely large area, so it's energy density per area approaches zero.

It's a common misunderstanding that the Lambert diffuse BRDF is "N·L * DiffuseColor" -- when actually it's just "DiffuseColor".

N·L is actually an immutable part of the rendering equation itself, not a part of the BRDF.

Also, you don't have to get to the atomic level for something to become "optically flat", you just have to be smaller than the EM wavelength that you're using. That's a few hundred nanometres for visible light. Optical engineers can actually build 100% "optically flat" objects that behave as a plane as far as visible light is concerned.

Because a bathroom mirror has no tint, it has no fresnel effect because it already reflects everything, and for a bathroom mirror your equation breaks down futher into:

Output = cubeMap

A bathroom mirror is not a perfect mirror. It will have a small amount of roughness and it's F0 value is maybe 97%  Also, there's a layer of glass in the middle, so the most direct light rays have an air->glass, glass->silver, silver->glass, glass->air event history -- some outgoing rays will be unlucky and experience internal reflection during the glass->air step, and actually reflect back towards the silver rather than refracting into the air! Accounting for these extra reflection paths will also dim the mirror by a few percent, and create interesting behaviours at glancing angles, as the glass layer approaches 100% reflectivity in that case, greatly complicating things!

Also, there's a layer of glass in the middle, so the most direct light rays have an air->glass, glass->silver, silver->glass, glass->air event history -- some outgoing rays will be unlucky and experience internal reflection during the glass->air step, and actually reflect back towards the silver rather than refracting into the air! Accounting for these extra reflection paths will also dim the mirror by a few percent, and create interesting behaviours at glancing angles, as the glass layer approaches 100% reflectivity in that case, greatly complicating things!

Yes, a theoretical perfect mirror has zero roughness and 100% F0, so F/G do nothing, and D is a Dirac delta function (a graph that is zero everywhere, except infinity at one peak). The perfect mirror BRDF isn't very useful though -- it can only represent one theoretical material. At the other extreme is the perfect diffusing material, which is described by the Lambert BRDF. All real materials exist somewhere in the middle.

But in real physical lighting, and as I understood PBR, there is no actual L vector.

If you're evaluating any individual light, there's still an L vector.

When rendering a Lambertian surface, if you're evaluating a spherical light which is above the horizon of your surface, then using an L vector at the centre of the sphere is equivalent to individually integrating photons that are coming from many different L vectors all over the sphere -- much how in astrophysics we don't need to know about each grain, but just the centre of mass.

In any case, each photon still has it's own L vector. In a cube-map (IBL), each pixel has it's own L vector. The brute-force "ground truth" result is to treat every pixel as a small area light source. A correct IBL implementation will match that ground truth.

I've never seen an example of PBR without a cubemap and you are suggesting that is some other topic that isn't needed.

Yes, PBR is the practice of basing all of your equations of real physics, and using real world measurements (And theoretical experiments) to validate your results.

Using cube-maps for lighting is IBL, which is an orthogonal topic. You can use PBR without IBL, and IBL without PBR. Correlation != equality...

However, PBR is viral - you can't just do it do one part of your renderer and be done - every feature must be PBRified.

IBL is very popular right now, so that means that PBR games have to spend the time PBRifying their IBL code.

IBL is a great way to do ambient lighting, so it's also extremely popular these days. However that doesn't mean you don't need analytic (point/spot/directional) lights... If you put the sun into your cubemap, you can't then later on get rid of that sunlight with a shadowmap (without also getting rid of the skylight / ambient bounce light / etc). Most games use IBL for sky and bounced ambient light, and analytic lights for the sun and man-made light sources.

On the PBR trend though is giving physical volume to analytic no lights - point lights become spheres or tubes, spot lights become discs or rectangles, etc... Unreal and Frostbite have published some info on their approaches to PBR area lights.

Also, the mipmapping/blurring approach itself is an approximation used to optimize IBL so that it's feasible to do in realtime. Film renderers won't do this pre-blurring trick as it introduces a lot of small errors.

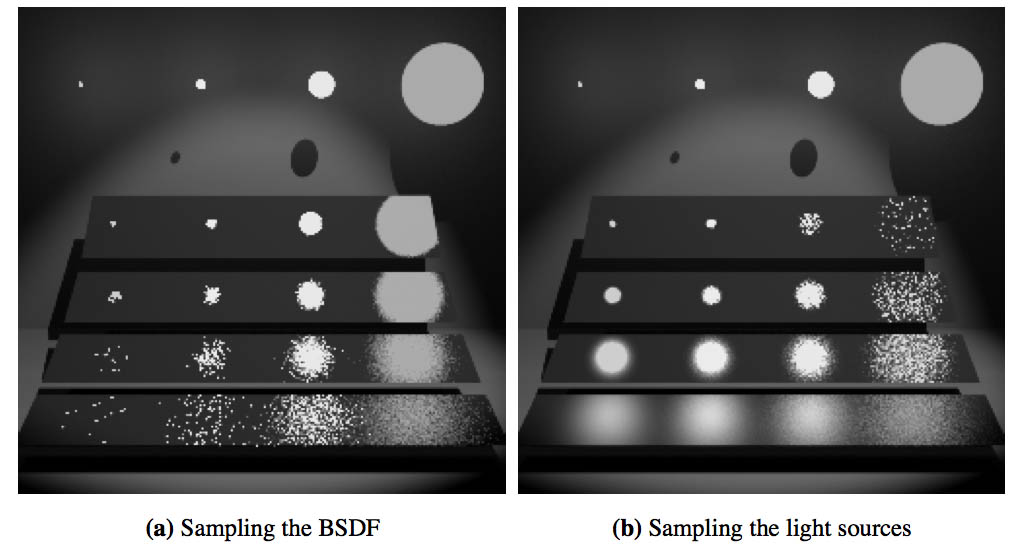

If you want to understand IBL, implement it using brute force Monte Carlo sampling -- generate thousands of random rays for each pixel, sample the cubemap for each ray to measure the incoming radiance, run that through your BRDF and then average all the results together weighted by the probability that the reflection ray would be produced by the microsurface.

For the perfect mirror, thats simple as only one reflection direction has 100% probability and every other direction has 0%.

For other materials, you need to convert the D term (the NDF) into a probability density function.

![]()