Hey guys, I wanted to do some reporting on a tech I'm trying to achieve here.

I took my old (2002) engine out of its dusty shelves and tried to incorporate raytracing to render light on maps,

I wrote a little article about it here:

https://motsd1inge.wordpress.com/2015/07/13/light-rendering-on-maps/

First I built an adhoc parameterizer, a packer (with triangle pairing and re-orientation using hull detection), a conservative rasterizer, a 2nd set uv generator;

And finally, it uses embree to cast rays as fast as possible.

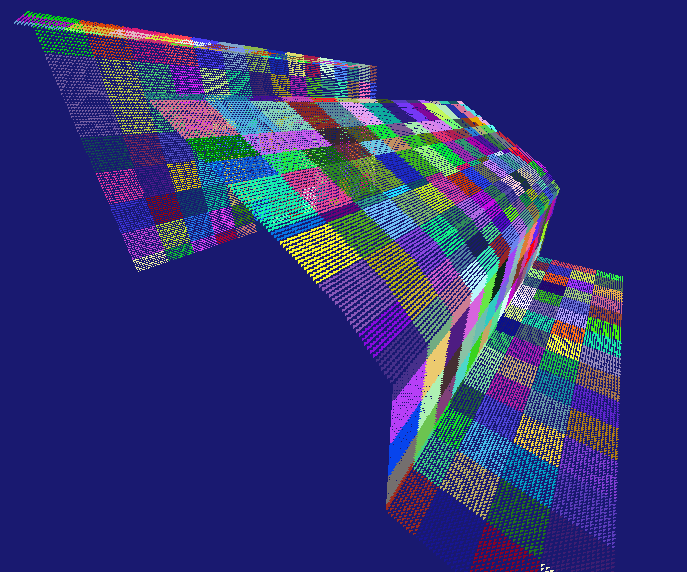

I have a nice ambient evaluation by now, like this:

but the second step is to get final gathering working. (as in Henrik Wan Jensen style)

I am writing a report about the temporary results here:

https://motsd1inge.wordpress.com/2016/03/29/light-rendering-2/

As you can see it's not in working order yet.

I'd like to ask, if someone already implemented this kind of stuff here, did you use a kd-tree to store the photons ?

I'm using space hashing for the moment.

Also, I have a bunch of issues I'd like to discuss:

One is about light attenuation with distance. In the classic 1/r*r formula, r depends on the units (world units) that you chose. I find that very strange.

Second, is about the factor by which you divide to normalize your energy knowing some amount of gather rays.

My tech fixes the number of gather rays by command line, somehow (indirectly), but each gather ray is in fact only a starting point to create a whole stream of actual rays that will be spawned from the photons found in the vicinity of the primary hit.

The result is that i get "cloud * sample" number of rays, but cloud-arity is very difficult to predict because it depends on the radius of the primary ray.

I should draw a picture for this but I must sleep now, I'll do it tomorrow for sure. But for now, the question is that it is kind of fuzzy how many rays are going to get gathered, so I can't clearly divide by sampleCount, nor can I divide by "cloud-arity * sampleCount" because the arity depends on occlusion trimming. (always dividing exactly by number of rays would make for a stupid evaluator that just says everything is grey)

...

I promise, a drawing, lol ;) In the meantime any thought is welcomed