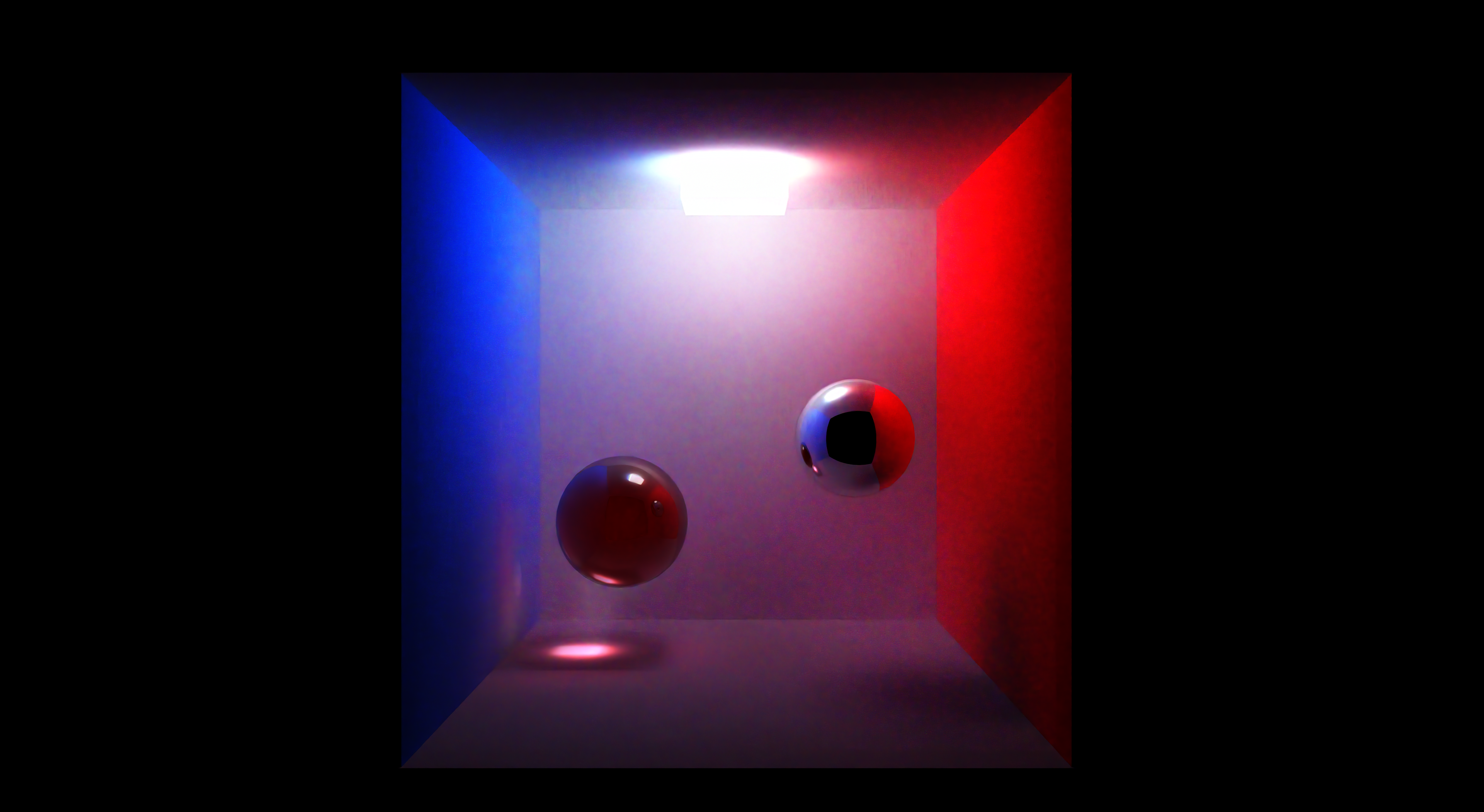

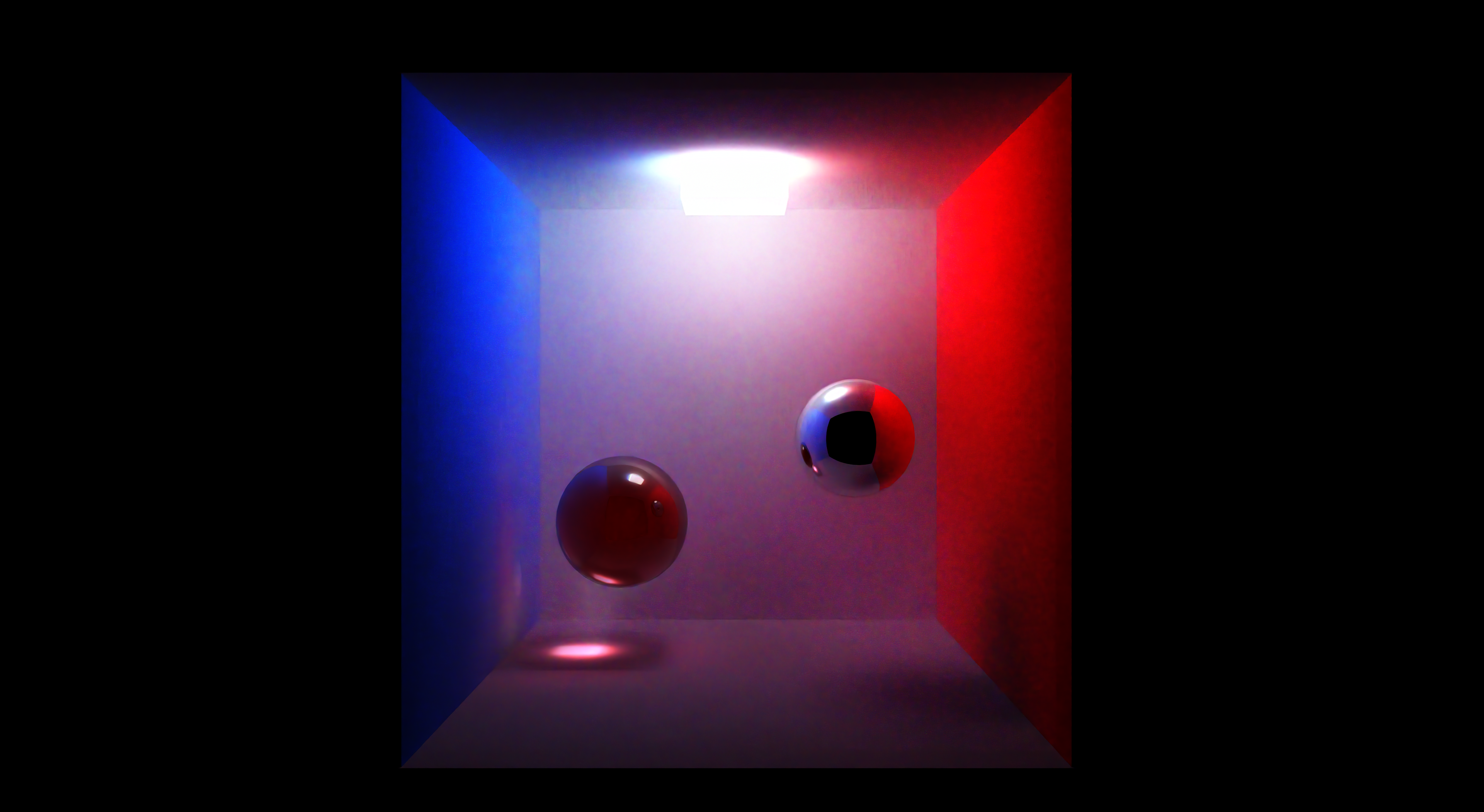

One question… should my tinted red transparent sphere reflect colours other than red?

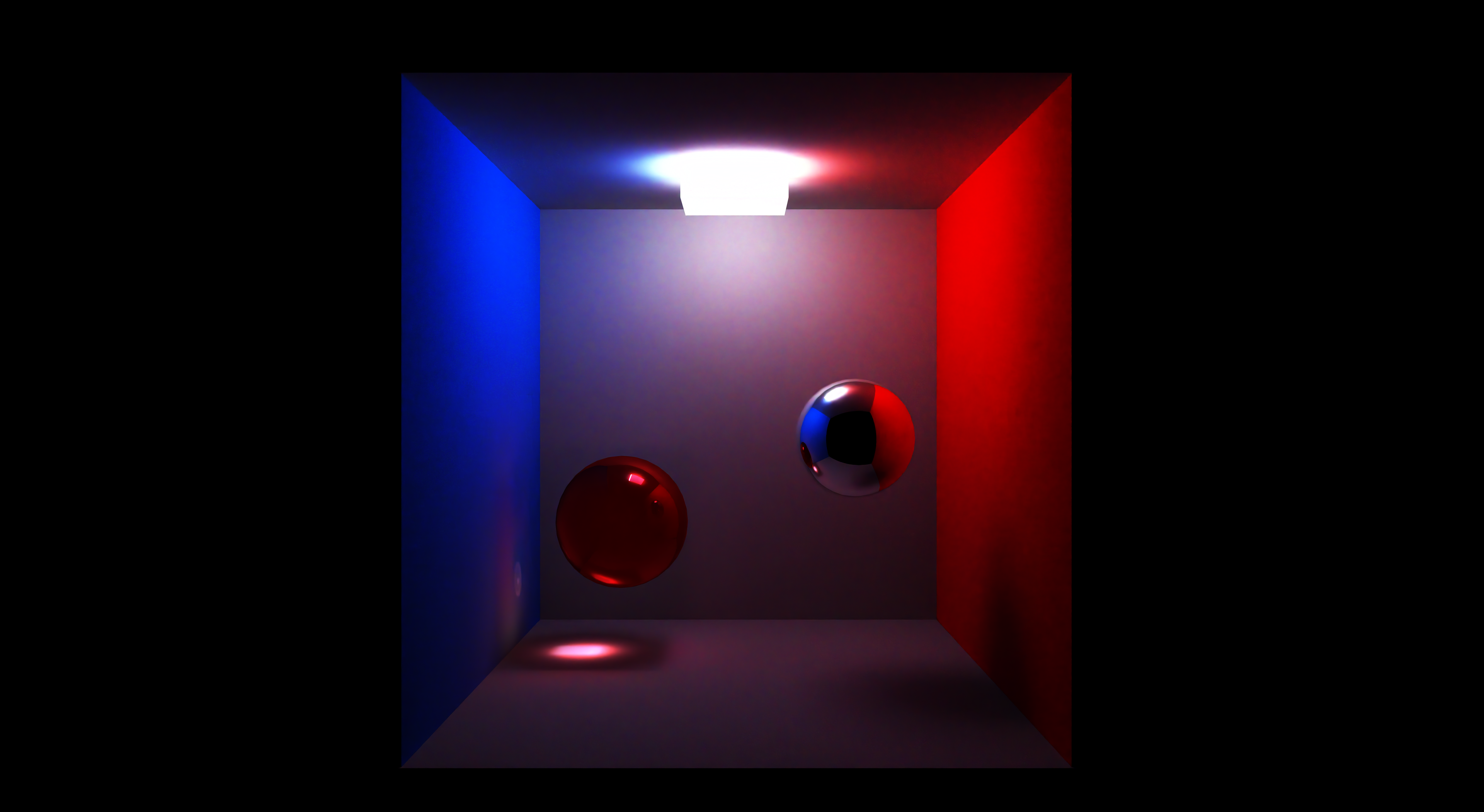

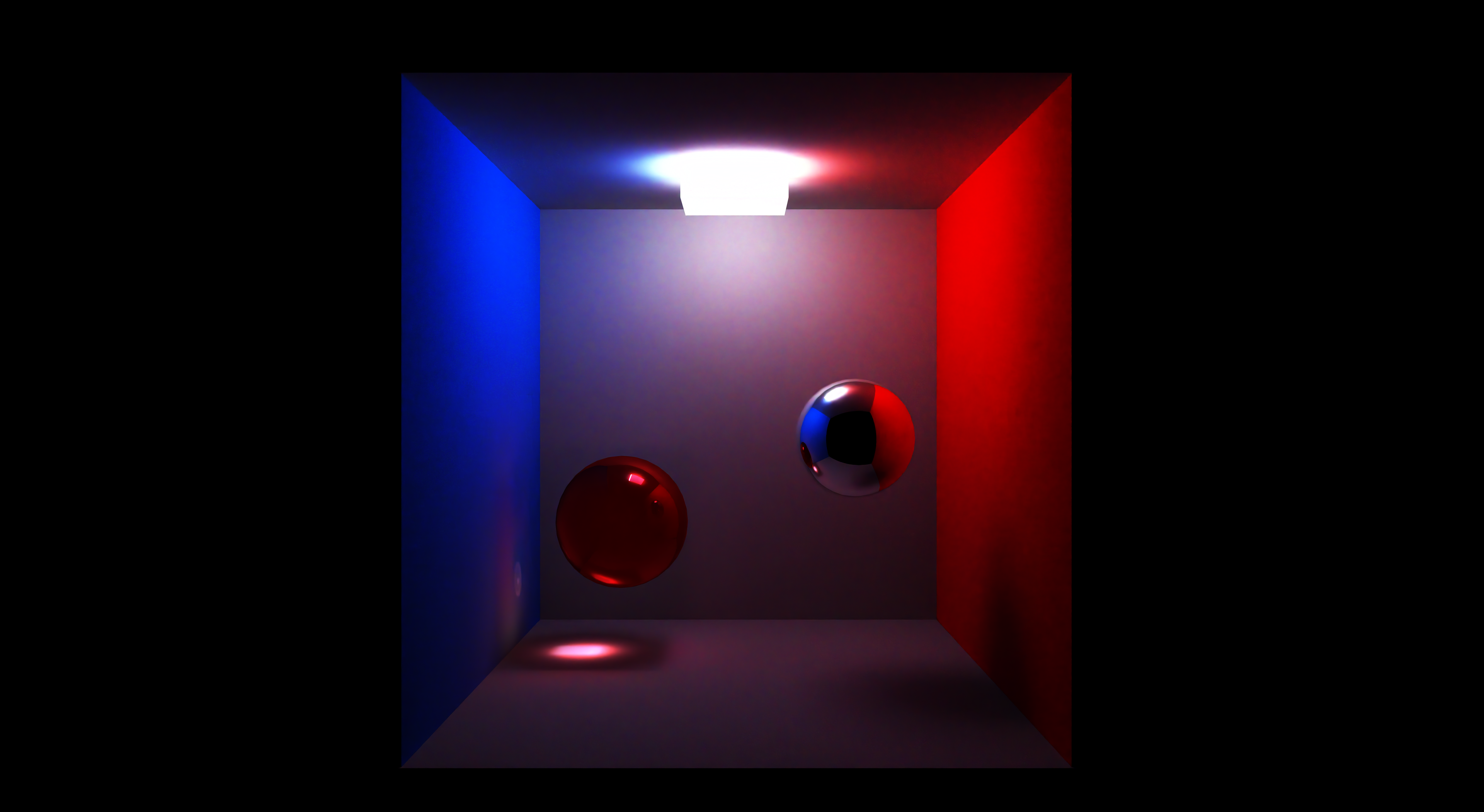

… or like this?

One question… should my tinted red transparent sphere reflect colours other than red?

… or like this?

taby said:

One question… should my tinted red transparent sphere reflect colours other than red?

In theory you can design any material you want. As long as it conserves energy, it could exist in reality.

But observing majority of materials from reality, they noticed that metals always tint (and maybe also that all their reflection is specular), and non-metals ('dielectrics', iifr.) do only tint the diffuse reflection, but not the specular reflection.

So your top material looks like glass, and your bottom material looks like transparent metal, which maybe can't exist but more likely is just very rare in reality, maybe existing only under certain conditions.

Maybe only aliens can create transparent metals in their labs for now. But nothing stops us from doing the same for games.

There were dozens of PBR blog posts and papers explaining related real world physics years ago. But this information only adds some context and is not really needed.

Btw, i never understood the actual difference between specular and diffuse reflection. Are those terms just made up for artistic reasons? Anyone knowing, enlighten me… ; )

(I notice the bug here too. Maybe the sphere reflects more light than it receives, and the error increases with more transparency.)

Btw, this basic PBR standard does not cover a lot of things:

Transparent / translucent materials (e.g. skin)

Anisotropic reflectance (e.g. hair, retro reflection of traffic signs)

Layered materials (e.g. clear coat, wet materials)

There are extended standards. Seems the one from Disney is most popular.

You had mentioned that my use of a buffer for rays is slow. Is there another way to do this? Like use an SSBO or something?

JoeJ said:

Btw, i never understood the actual difference between specular and diffuse reflection. Are those terms just made up for artistic reasons? Anyone knowing, enlighten me… ; )

Diffuse is purely randomized direction, versus specular which is purely reflected direction. Does that even make sense?

Anyway, it's how I get nephroid caustics – reflected energy.

taby said:

You had mentioned that my use of a buffer for rays is slow. Is there another way to do this? Like use an SSBO or something?

You need to explain how much memory you need for what, so the overall idea of the algorithm. (idk bidirectional PT and how you diverge from its general implementation.)

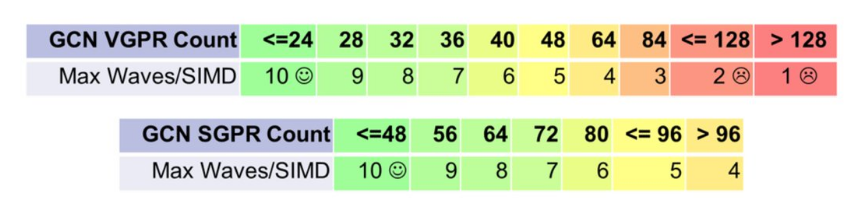

Some numbers i remember from AMD GCN, which probably still coarsely applies to newer GPUs of any v endor…

To achieve full occupancy, one thread can not use more than 24 registers (32 bit).

So if you use a 4x4 matrix which is unique data to each thread, you already use 16 registers for that.

If you use more, this image shows how occupancy (max waves/SIMD) decreases:

I talk about VGPRs, which means registers unique per thread (so a ‘V’ector of threads).

SGPR are ‘scalar’ registers, which are the same for all threads. So one register can be shared for all, and we rarely run in to limits here.

I've never heard NV also uses vector/scalar registers. They may just use vector registers for everything.

Notice high occupancy is not always a big win. It means that a CU has other work available which it can do while waiting on something, usually some memory operation om VRAM. Memory access can take hundrets of cycles, so it's good to switch to some other work during the wait, similar to HyperThreading on CPU.

Tracing a ray likely has a much larger waiting time than a single memory access.

But this does not mean we could compensate the cost in any way at all. So i have no idea how much optimization potential there is for you.

It's likely more important to talk about what happens if you simply use to many registers (>128 from the chart), which i guess you might do.

In this case the compiler puts the stuff to VRAM ('Register Spilling').

Things get really slow then. We really make sure this never happens.

Or at least we should. I have seen profiler outpot from the Portal RTX game, and they spill registers like crazy.

In other words, the genious innovators and experts at NV can not develop a game properly, lol.

Or, they did it by intent, leaving it to their driver team to patch the shaders like for any AAA game.

This way AMD HW, which the profiling output i saw comes from, would get the bad code to run slow, lol.

But the question is: What should you do if you just need the memory for your stuff to work?

Should you manage the VRAM yourself? Or should you use register spilling, hoping compilers deal well with it, and eventually can do it better than you could?

But i don't know this, because i never had such a problem. If i have to guess, i assume NV deals better with register spilling than AMD. AMD leaves much more to the programmer than NV does. But if you do it well and respect HW limitations, AMD is much faster than NV, according to my now very dated experience.

Anyway, to manage VRAM yourself, you would use SSBO, yes.

But there also is on chip RAM we could use, mostly called LDS. It's a bit of ram directly on the CU unit, or on some GPUs it's the same RAM used for L0 cache. For GCN AMD said that LDS is 14x faster than VRAM.

I would assume that spilled registers can live in L0 ideally, but there is no gurantee ofc. Still, that's probably a reason why you should not change anything.

To use and manage LDS ram, currently compute shaders is the only option afaik. So you would need to switch to inline tracing and rewrite everything.

And the real problem is: Inline tracing can disable HW optimizations (ray reordering, binning, etc.), so i would try to avoid it. Currently, NV and Intel implement such optimizations.

AMD, impelemting traversal in software, uses LDS memory for the ray traversal stack, afaik.

That said, there might be an option to do better. But it's a lot of work to try any option. Seems not worth it i would guess.

But if your algorithm could benefit from caching stuff in memory so work could be shared and thus reduced, it would be worth it pretty likely.

taby said:

Diffuse is randomized direction, versus specular which is reflected direction. Does that even make sense?

Yeah, that's what i think.

But then both is the same thing, and the terms would be kinda useless.

So maybe the real difference is:

Specular gets reflected immediately. The light jsut bounces off without change.

Diffuse scatters around on the surface, and when it exits, it may have changed it color.

Makes sense, but then it's wrong to say ‘metals only have specular reflections’, which i thought is true.

What a mess. But it's still better than working with meshes. ;D

Technically, subsurface scattering is not necessary for diffuse reflection. All you do is randomize the surface normal for diffuse reflection.

taby said:

Technically, subsurface scattering is not necessary for diffuse reflection. All you do is randomize the surface normal for diffuse reflection.

It's about scale. For diffuse reflection, we can assume the scattering radius is so small we can ignore it, while change in direction is large.

For subsurface scattering, the radius becomes large too, because the material is translucent.

taby said:

Speaking of subsurface scattering, how would one go implementing that in the path tracer?

Probably calculating a random scatter offset for the reflected ray to bounce off, plus modeling the change of color and intensity along this offset thru the material.

But not sure if this gives the expected blur or if i miss something. You need to check resources on how to model this anyway.

Pretty sure you also need a good model to make the effect noticeable, e.g. a human head for skin.

But do this only if you are really nerdy about rendering.

Imagine, you have a human head which looks like real, but then you animate it which lacks the same level of realism. You mostly amplify the Uncanny Valley effect for the very high cost of PT.

This becomes a noticeable problem already. E.g. i saw the trailer of the Avowed game. It uses UE5 and has very hq lighting, and also detailed rock models. Looks really awesome to me technically.

But the animation looks like a PS3 game. And this would not be so noticable if gfx would be worse too.

They need to improve animation first. This is what makes games look bad the most currently, not bad lighting.

I guess many will use AI to solve this. But that's actually an application without ethical or legal concerns, imo.

(Being so paranoid, it's actually difficult to draw a line between good and bad applications of AI. The generative content theft drags the whole field into the dirt, somehow.)

However, what i really mean is: Any technical progress from the current state is rocket science. You see it with PT, where you have just scratched the surface but no experience on what really matters to make it fast enough - denoising, restir, etc. And natural character animation isn't easier than graphics.

As a result, custom engines will soon vanish completely. And for those who will stay, realism is maybe not a good target.

We're dinosaurs, and the comet is already visible.

No need to say it - i'm the master of optimism, i know… ;D