In This Article

Siggraph Intro and Featured Speakers

2007 Autodesk User Group Meeting

Interview with Softimage

Allegorithmic Interview

Animation Theaters and Electronic Theater

Emerging Technologies

Other Events

Anecdote: The Tale of the Expired License

Siggraph Intro and Featured Speakers

On Tuesday, Joe Marks, the conference chair, welcomed everyone to the conference and spoke of some notable additions to this year's events including the FJORG! Event and the week's featured speakers.

He also spoke about the various committee's intent to embellish the conference events by curating specific projects in order to increase the quality of the submissions and quoted, "Curate to be great" as a mantra for the various committee chairs.

Who will be the "Viking Animator?"

The FJORG! event is being called an "iron animator" competition, which pitted teams of animators to create and animate a scene in 32 hours. The animation needs to be based on a theme which was announced at the kick-off of the competition. At the conclusion of the competition, a jury will announce which team has earned the title of "Viking Animator" and award prizes from the sponsors: Dreamworks Animation, AMD and HP.

To drum up support for the competitors, teams of student volunteers run around the show halls shouting "FJORG" while wearing plastic Viking hats with horns sticking out of each side. Conference attendees can watch the animations as they are being created via video monitors that show both the animations and the animator's screens simultaneously.

Regarding the competition, Joe Marks commented that he received an emergency call at 4 AM in the morning crying that the FJORG teams had run out of coffee, a problem that was quickly remedied.

The Featured Speakers

Another big change for Siggraph 2007 was the replacement of a single keynote speaker with several featured speakers. Joe Marks mentioned that it was difficult to find a single speaker of interest to the entire Siggraph community since so many varied and distinct industries are represented.

Instead, three featured speakers would speak throughout the week to present industry specific coverage that was of more interest to the various represented groups. Featured speakers for this year's conference were Glenn Entis, Senior VP for EA Games, for the commercial industries; Graphic novelist, Scott McCloud, was selected to present to the art community, and Vilayanur S. Ramachandran, Director of the Center for Brain and Cognition, was selected to address the academic contingency. Vilayanur S. Ramachandran's presentation, however, had to be canceled due to a death in the family.

Video Game Challenges

The first of two featured speakers at Siggraph 2007 was Glenn Entis, Senior Vice President for EA Games. Glenn also is one of the founders of Pacific Data Images (PDI), an early pioneering studio in 3d animations. He spoke of the various thrills that he's seen over the years of attending Siggraph. Throughout the years, there has always been one or two key films, technologies or demonstrations that provided a unique thrill to the attendees that seemed to dominate the conversations for the week such as raytracing and fractals, which amazed everyone when it was first introduced.

Creating these thrills is what drives us to do what we do and these thrills can come in the form of 1. Characters, 2. Worlds they inhabit, or 3. Tools used to create the characters and worlds.

There are 3 phases to the problems we face in computer graphics. The first phase is to ask what and how, represented by a question mark (?), the second phase is to determine what to do with it, represented by an exclamation point (!), and the third phase is to make money on the thrill through commoditization, represented by a dollar sign ($). During this final phase, some of the thrill tends to fade as we being doing the same thing over and over again.

As examples of these phases, he cited how PDI was paid thousands of dollars per second for broadcast graphics spots like flying logos. This enabled them to build the studio one job at a time. With 3 major networks vying for attention, this phase was easy, but as cable introduced 500+ channels, the work was commoditized and prices dropped aggressively forcing PDI to move on to commercials and music videos to find the new thrill. An example of the thrill found here was the morphing used to create Michael Jackson's Black and White music video.

Morphing was an amazing technology when it was first introduced, but as everyone started to do it, it moved from amazing to interesting and finally to the commonplace, mass production problem.

Glenn then moved on to show how real-time graphics and games became the next area where you could find thrills. From the early days of Siggraph, real-time graphics was like a country cousin lacking the visual fidelity of the other technologies. This was evidently shown using some quick calculations. Games are shot at 60 fps or 216,000 frames per hour, and run off a $400 console, but films are rendered at 24 fps or 86,400 frames per hour on a $3000 renderfarm, so games require rendering 72,000 times faster than what is rendered for film and if you consider the price/performance difference of 540,000. This points to the fact that games are clearly a commodity problem or how can you get rendered pixels faster and cheaper.

This is evident showing a slide of content from 1982 that showed the visual difference between a live action corvette shot vs a CGI shot for Tron and a video game shot of Pacman. Clearly the visuals involved in video games was significantly inferior to other medias at the time.

All graphics benefit from Moore's Law, which defines the rapidly increasing visual acuity from year to year. However, real-time graphics gets a second ride because the consoles get more powerful as well. This was shown using a video of the Need for Speed franchise from 1997 to 2006. The difference is incredible over not to many years.

Real-time graphics will never be as powerful or as polished as non-real-time graphics, but the gap is closing. As the visual gaps are closed, the new challenges emerge. One such problem is dealing with what a Japanese researcher calls the "Uncanny valley." This problem states that if you plot emotional response to a character verses its realism, the graph increases linearly, but at a point where the characters approach realism, the emotional connection drops off dramatically. Another way to look at this is to plot motion fidelity vs. visual fidelity and any game that drops below the line loses its appeal. This line is called the "Zombie Line," or the point where the characters lose their emotional connection to the audience.

As an example of this, the ghosts in Pacman weren't realistic, but with their roving eyes, the game was engaging and we pumped thousands of quarters into the machine. Another example is the puppet Ernie from Sesame Street. Again, not very visually realistic, but when speaking by merely opening its mouth, an array of expressions could be created that create an emotional response. A third example is the characters in the Final Fantasy, the Spirits Within movie, who although they were very realistic with incredible motion and visual fidelity, many viewers rejected them because the visual appearance didn't match the motion.

Adding more polygons to a character to improve its visual fidelity doesn't make it work. If you ignore its motion fidelity, then more polygons actually makes the problem worse and keeps the character below the "Zombie Line." The solution to this problem was to capture everything using 3-camera motion capture systems. By coupling characters with visual fidelity with near perfect motion, the characters came alive, but the new problem was that the characters lacked personality and interactive life. If a character continues to box after an atomic bomb has exploded, then the audience knows immediately that something is not right. The characters need to react to their environment and it turns out that "intelligence is better than motion." Characters have to learn to respond and react. The illusion of interactive life is a whole new set of opportunities.

In addressing the problems of worlds, Glenn mentioned that early in computer graphics, it was a fight to get visual effects shots included in films. From the recent trends, we can now declare happily that "we won." Visual effects are ubiquitous in film, but now it feels like drinking from a firehouse.

A good example of a world that deals with the need to respond and react is the Crysis game engine developed by CryTech. This engine includes multiple new environmental controls that respond by "putting nature in your hands." The environment lets trees fall and impact with the world around them, oceans that causes surrounding objects to bob up and down on the waves, and bullets that can penetrate any scene object.

The tools arena is unique because while characters and worlds are for millions of users, the tools are for us, a specialized group. There are several games that address the idea of making tools as the game. A good example of this is The Sims, which is the world's best selling game franchise. One of the reasons for its success is that Sims players love to make stuff. The game allows them to make content that they can then interact with. These tools keep the player engaged in the game.

Another good tool example is the Virtual Me character creation tool that lets players create, style, paint and output their character avatar. A final example is Spore, which includes powerful tools to morph and auto rig characters that interact within an environment where everything can be controlled. Although there is a game, it is the powerful tools that will keep the users engaged and coming back.

To conclude, Glenn mentioned that if people are not having fun creating games, then players won't have fun playing them. People will want to create content themselves. Players really want characters that react and talk back, worlds that look beautiful and behave beautifully with real-time dynamics and tools that are not only for the professionals, but also for millions. This is a huge opportunity for new thrills.

2007 Autodesk User Group Meeting

The 2007 Autodesk User Group Meeting gathered together a sizable crowded to make several product announcements, to show off new features in 3ds Max, Maya and Toxik. The group was greeted by Marc Petit, Senior VP over the Media and Entertainment Group and then turned over to Shawn Hendricks use acted as the MC for the event.

The meeting started with the announcement that Autodesk had acquired Skymatter the creators of Mudbox, a software package that simulates modeling with clay. This announcement was cheered by the enthusiastic crowd. The Skymatter team was then invited up to the stage to explain how Mudbox came to be.

The next guest to take the stage was Christine Mackenzie, Executive Director or Marketing for Chrysler. She spoke about how Chrysler had used the Autodesk tools to create a virtual fleet saving a large amount of cost required to produce, ship and display prototype vehicles as a vehicle approaches its release date. Every attendee was also given a collectible toy car courtesy of Chrysler. I ended up with a green, sporty 2006 Dodge Challenger Concept muscle car. To end her talk, Christine revealed a brand new prototype of the Chrysler Demon vehicle located to the right of the stage (Figure 1).

The next part of the program showed off the latest features to be included in 3ds Max 2008. These features were demoed by Vincent Briseboise and included the following:

- Self Illumination materials that work with mental ray that can provide light to the scene using actual physical Candela values.

- A new mental ray Photographic Exposure Control with controls common on cameras including aperture, ISO and F-Stop.

- The mr Sky Portal control for focusing light from a daylight system shining through a window.

- The ability to view lights and shadows within the viewport in real-time.

- The new Scene Explorer interface, which provides a table of settings for all objects in the scene.

- The new Line of Sight tool to see what is obscured from a camera or light.

- A new X-Ray joints viewing method.

- A new Smoothing skin weight tool.

- The ability to rig models non-destructively.

- The new Move Joint tool.

- The ability to bind skin regardless of the current pose.

- The Copy Weight tool that appeared in Maya 8.5.

Shawn then introduced the new 3ds Max and Maya Masters who were each presented with a nice jacket and recognized by the user group for their achievements.

Then Vincent returned to show off some more new 3ds Max 2008 features including:

- The Preview Selection option available when poly modeling

- The new Normal constraint poly modeling option.

- The ability to divide a Chamfer into segments.

- The new Working Pivot that can be set as you work.

- The ability the apply UV mapping over several pieces at once.

Then Michel Besner, Senior Director of Product Management, gave all the attendees a look at some under wrap projects including the new Sextant tool that is being developed. Sextant is a visual design tool used to create storyboards. It includes a sketching mode and a timeline for sequencing. It also has review and annotation features. The tool can work with 2D and 3D content including the ability to import 3D models and motions.

Another look at research at Autodesk was provided by Duncan Brinsmead who showed off a number of fun examples of Maya in use. Duncan's examples included using cloth to create a book with flipping pages, using hair to create a realistic, twisting phone cord, using a cloud field to simulate the look of ink being dropped and mixed in water, and a realistic slinky simulation.

In one of the best examples, Duncan showed an email received from a user who asked how they could create the effect of having the letters on a page fall away from the page and float to the ground. Duncan then displayed his response which had the letters of the user's actual email fall away floating to the ground. Duncan concluded his interesting presentation with the simulation of several paper airplanes created in Maya.

Following the user group meeting, the attendees were treated to a private party called the Steel Beach Base aboard the USS Midway docked in San Diego bay complete with food, drink and a fireworks show. The party was sponsored by Autodesk and Caf? FX.

Interview with Softimage

I had a chance to speak with Kevin Clark of Softimage. Softimage had a busy show announcing the release of Softimage XSI 6.5 for Essentials and Advanced with over 30 new features. These developments are the results of the work over the last 8 months with their various partners including Valve, Lionhead Studios, EA and Animal Logic. Many of the new features were co-developed with their partners to address specific problems and are now included in the product.

Softimage also announced a new pricing structure for their XSI line-up. The entry-level Foundation package will remain at $495. The professional-level Essentials package is increasing in price to $2995, but it now includes Joe Alder Hair and Fur, Syflex Cloth and Physics modules previously unavailable in the Essentials package. With these additions, the Essentials package includes everything a digital artist wants.

The price of the Softimage XSI Advanced has dropped to $4995, which now includes 5 more render licenses for a total of 6 render nodes. This offering includes everything you need to build a small production studio from one box. The Advanced package also includes a Fluid solver, a Behavior module for doing crowds, and the Elastic Reality morphing module. The goal is to make it easy for artists and technical directors to get all the tools they need.

Essentials and Advanced also include the new features released as part of 6.5 including HDRI support, improved SDK, enhanced UV mapping, updated and enhanced audio support for precise audio synching.

Kevin also mentioned the benefits that are being realized from making the rendering API open in version 6. Several new 3rd party rendering offerings are now available for XSI including the Maxwell rendering system, V-Ray and 3-Delight. Mental ray is still included as the embedded renderer. There is also rumors of talks with Pixar to integrate with Renderman, but no announcement can be made at this time.

Softimage Face Robot 1.8 was also announced at the show. This release includes fast integration with Maya and other game pipelines. Many companies balk at the $100,000 price tag for Face Robot, but they don't realize that this high price tag is the top end price which includes a team of consultants and ownership in the product. Face Robot and its pricing structure are very scalable. It can even be rented for a 2 month project for a reasonable fee.

The benefits of Face Robot make it possible for all game characters to have believable realistic emotional performances instead of just focusing on the main characters. This can be done using keyframing or mocap. Kevin wanted the game industry to know that Face Robot is a unique production tool that brings a whole new level of realism to your game.

The new features in version 1.8 include running inline with the XSI 6 engine. It also works via Crosswalk with 3ds Max or Maya. It has a new shape rig export system to blend shapes and an updated pipeline export that includes auto enveloping. It also ships with 7 new preset head models instead of just the one including different races, genders and styles letting you start out more accurately. There is also a 23-part set of training videos available.

Overall, Softimage has just reported its best fiscal quarter in the past couple of years and feels the company has great momentum. Siggraph is always a great chance to see the various Softimage customers showing off their amazing works.

Interview with Allegorithmic

One problem facing the gaming world is the on-going need for content creation tools, especially tools for creating and dealing with textures. Textures are expensive, both in time required to create them and in memory footprint within the game engine. One company that has appeared to address these issues is Allegorithmic.

Allegorithmic was formed to commercialize the procedural texture research by Sebastien Deguy. The company has already delivered two products--MapZone, a texture authoring tool; and ProFX, a middleware component that plugs into the game engine to produce procedural textures in real-time.

MapZone

MapZone allows you to build complex 2D textures in the same way you'd build 3D shaders using a hierarchical tree structure. This non-linear approach doesn't require flattening like textures made in Photoshop would. By reusing sub-nodes that have already been created, MapZone enables textures to be created up to 2 times faster than current methods. MapZone also provides more variations and a chance to experiment with different looks and effects.

MapZone 2.5 is available for a free download from the web site and version 2.6 is currently in development. The company has noted 25,000 downloads of MapZone since March. A retail version of MapZone, called MapZone Pro is available and bundled with ProFX.

Sebastian commented that procedural textures shouldn't replace hand-drawn textures for all game assets, but at least 70% of a common game's textures can be replaced with procedural textures.

ProFX

ProFX is a middleware component that plugs into the game engine to deliver real-time procedural textures to the game. These procedural textures are between 500 to 1000 times smaller than standard textures. The current version supports Epic's Unreal Engine 3, Gamebryo, Virtools, and Trinity. There is also an API available to tie ProFX into other engines. It is currently available for PC, XBOX 360 and PS3 support is coming later this year. The ProFX components can deliver textures to the game at a speed of 20 MB/sec.

Naked Sky's Roboblitz, delivered on the Unreal Engine 3, was developed using PhysX and ProFX components to XBOX Live within a 50MB footprint. Another example is the Virtual Earth project, which specified an 8MB footprint. It is available as a download over the Web.

Animation Theaters and Electronic Theater

Computer Animation Festival

I found time on Sunday to attend the Animation Theater, which features the best animated pieces submitted over the last year. Many of these shorts are stunning examples, some are delightful and humorous and several are just plan weird.

Improved resolution of 4K

As I walked into the theatre, a 12-minute film written and directed by Peter Jackson was playing in the 4K format via a Sony SXRD projector. This simple short was shot to show off the new 4096 by 2048 resolution camera technology and it was amazing. The clarity and picture quality is several times better than HD and the entire production was shot in merely two days. The key was using a unique compression drive that enabled a huge amount of film data to be captured with minimal loss.

One segment of the festival showed other 4K examples including a rendered piece about flying to the center of the Milky Way galaxy. Now that we've all converted to HD, it is interesting to see what is next on the horizon.

In another part of the conference was a huge flat screen display showing slides in 4K and it was close enough that you could examine the details of this improved resolution up close. Cool stuff.

Game animations and awards

All of the accepted pieces were divided into several categories including 4K, Music, Creativity, Games and FX, Science, Storytelling, and Madness. Of all these categories, I probably enjoyed the Games and FX category the best. Many of the games and film studios continually blow away the competition with their cutting edge work. The pieces included in the category included birth of Sandman from Spiderman 3, the intro to Marvel Ultimate Alliance, the opening sequence to Lost Odyssey, and animations from World of Warcraft--The Burning Crusade, Warhammer Online, Half-Life 2: Episode 2, Fight Night Round III, and NBA Street.

Three shorts were singled out from the record-breaking 905 submissions, Dreammaker, submitted by Liszek Plichta, Institute of Animation, Visual Effects, and Digital Post Production, Filakademie Baden-Wurttemberg, Germany was awarded Jury Honors, the short film, EnTus Brazos, submitted by Fancois-Xaxier Goby, Edouard Jouret, and Matthieu Landour, Supinfocom Valenciennes, France was given the Award of Excellence, and Ark, submitted by Grzegorz Jonkajtys and Marcin Kobylecki, Poland was cited as Best in Show.

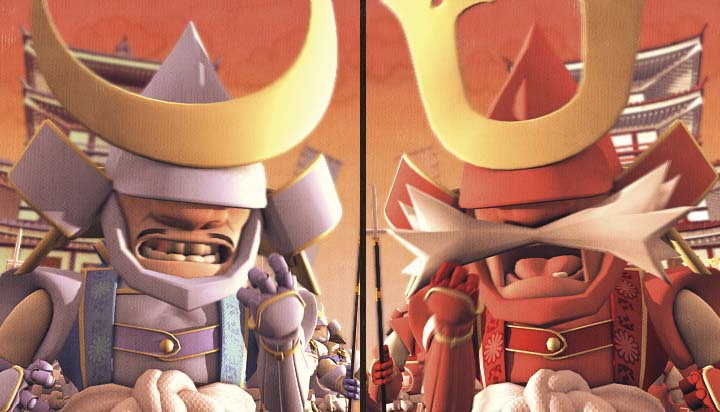

Of all the shorts, one of my favorites was the Versus (Figure 1), which showed a duel between two comic Japanese warlords fighting foolishly over a small island that was between them.

Image Courtesy of Supinfocom/Premium films

Another one of my favorites was called Contrast, which featured Escher prints where the sketched objects are animated in 3D.

Animation Theater Chair

I had a chance to speak with Paul Debavac, the Animation Theater chair, about the pieces selected for this year's Animation Theaters. Paul mentioned that there were over 900 submitted pieces. One criteria that seem to work against submitted pieces was if they seemed derivative. The committee was on the look out for animations that were new, unique and original.

Paul also mentioned that many of the European animation pieces met this original criteria and in many ways, the schools in Europe are raising the bar for the quality of work displayed at the Siggraph.

Electronic Theatre

Of course the best animated pieces are saved for the Electronic Theater. This year's Electronic Theatre pre-show featured a laser typically used in planetarium light shows configured to re-create the early video game hits, Asteroids, Tempest and Star Wars. Players for each game were selected by tossing out soft objects into the audience.

For the players, it must have been quite a thrill to be playing these classic video games on a 40 foot screen with an auditorium of 3000 people cheering for you. The player selected for the Asteroids game in my session was confused by the controls and quickly died being hit by the first rock that came his way as the whole audience boo'ed his lack of success. He was mercifully given a second chance where he did much better, even blowing up a couple of spaceships. The Tempest and Star Wars players also seemed to die off quickly.

The animation pieces presented in the Electronic Theatre were absolutely spellbinding. Each diverse from the other, the collection featured film shots from Pan's Labyrinth, World Trade Center, Surf's Up, Spiderman 3, 300, Pirates of the Caribbean, and the digital birth from Children of Men among others. There were also humorous shorts from Pixar, about an alien trainee program and Blue Sky, the makers of Ice Age featuring Skrat, the saber-toothed squirrel. These shots clearly showed why these studios have been so successful.

Games clips in the Electronic Theatre included a humorous clip explaining the upcoming Valve game, Portal, along with a show reel of EA games showing Crytek, Gears of War, and Resistance-Fall of Man.

Emerging Technology Venue

I had a chance to tour the Emerging Technologies floor with John Sibert and Kathy Ryall, the co-chairs for this venue. Kathy mentioned that many of the displays are at various levels of readiness with some being commercially available and others still in early stages of development. Regardless of their stage, these technologies represent not the cutting edge, but the "bleeding edge" of technology.

The Emerging Technology venue showed off several new technology developments including several that were curated by the show's co-chairs, such as Microsoft Surface, which was being shown for the first time on the west coast. Many other curated developments focused on display technologies. The co-chairs for this event mentioned that 1/3 of the 23 available exhibits were curated.

The first display showcased a system of motion detectors used to determine the movement of crowds of people through the conference floor. This technology could be used someday to create smart buildings that adapt their layouts based on the flow of people.

Other displays included a lens-less stereo microscope and several haptic interface devices that provide feedback to the user. These devices could make a big splash in the gaming world where feedback could help players make critical decisions.

Several display technologies showed backlit monitors that can be readily seen even in bright sunlight. Another monitor had embedded optical sensors making the screen able to detect touch and even track a laser pointer.

Another display, developed by a team at the University of Southern California, showed a 360 degree view produced by lights traced at 5000 rpm. Although the display is only black and white, it could be seen from any angle without any sweet spot in true 3D. Another display featured an HDR display side-by-side with a normal display where you could really see the difference that High Dynamic Range images make.

Another clever interface device was called a Soap mouse. It worked by surrounding an optical mouse with the mousepad so the mouse can be used without requiring a surface to be placed against it. One of the developer's showed how his game play improved using the Soap mouse by storming through an Unreal Tournament level.

In the way-out there arena was a device that executed specific commands to the game when the two players touched or clasped hands together. It worked by issuing a small electric current through the game controller. When two players touched hands, the circuit is completed and the touch is detected (Figure 1). This could be really interesting if two side-by-side players needed to give hi-fives in order to launch a special attack.

Image Courtesy of the Kyushu University

Another way-out design was an wind interface that could detect wind created using a hand fan and simulate the same wind using an electric fan along with a video feed to another computer.

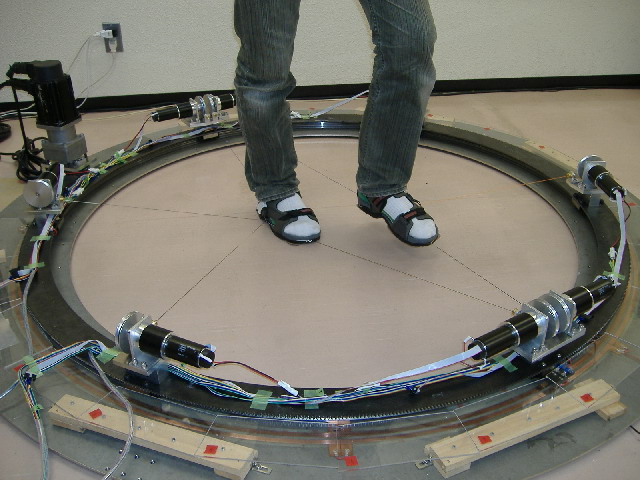

There was also a device called the String Walker (figure 2), presented by a team from the University of Tsukuba, that lets you walk endlessly using a VR system without moving by sliding the feet back and forth over a surface.

Image Courtesy of the University of Tsukuba

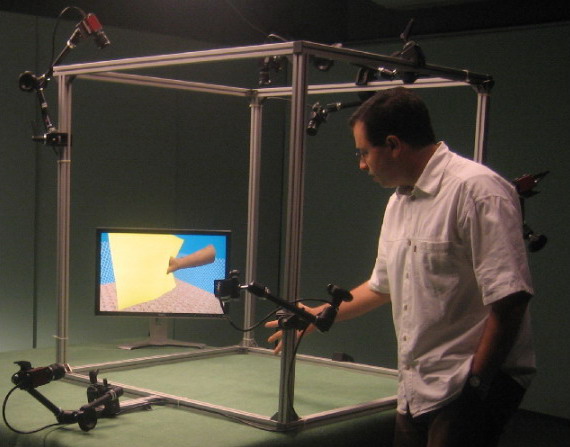

One of the coolest displays showed off a real-time 3D geometry capture system that worked by using an optical camera to scan any object, including a hand, placed in the detection field along with its motion and display it back on a monitor (figure 3). The detected object could then interact with the virtual objects.

Image Courtesy of INRIA

Other Events

Exhibition Floor

The Exhibition Floor at Siggraph as usual was a frenetic display that included all the top vendors. There were a total of 235 exhibits this year, an increase of 12% from the previous year. Joe Marks, the conference chair, commented that technology seems to go through highs and lows and that this increase seems to mark the beginning of a dynamic growth period.

Most of the larger booths were filled with the software tool companies including Softimage, Autodesk, Pixelogic, etc. Hardware systems including AMD, Nvidia, and Intel were also a strong presence. Other key booths included a number of schools including the Art Institutes, Savannah College of Art and Design, and Ringling College; and a number of studios including Pixar, Blue Sky, Rhythm and Hues, Digital Domain, Sony, Disney, etc.

The recruiting team for EA games invited all attendees that passed by their booth to their hosted room where they threw out games and t-shirts to a wild crowd before selecting a grand prize winner to receive a cool EA backpack filled with a game console and several accompanying games.

Also of note were several new motion capture companies that designed system around the new inertia sensor that enabled these expensive systems to be built for a fraction of the cost without the expensive gear.

Job Fair

The Job Fair was located in a separate room from the exhibition and was organized and run by CreativeHeads. The fair included several key studios and game recruiters including Vivendi, EA, Animal Logic, Blizzard, CafeFX, Insomniac, Microsoft, Intel, Radical, Red 5, Sega Studios, Sony, Disney and many others. Most were accepting resumes and demo reels and several were scheduling interviews throughout the week.

Guerilla Studio

The Guerilla Studio venue provides attendees with a chance to go hands-on. With donated software and hardware coupled with several experienced tutors, attendees can spend time creating art. The atmosphere is friendly and inviting and even included several 3D printers to try out.

Also new this year were 6 artist-in-residence tutors that were invited to live in the Guerilla Studio during the show. 3 of these artists were new to digital technology and the experiment was to see how their traditional skills translated to the new media.

Art Gallery

The theme for this year's Art Gallery display was "global eyes." The exhibit included submitted works as well as several curated works from well-known popular artists. Many of the artists in developing countries were represented.

Special Sessions

Several special sessions were held at Siggraph where some of the best visual effects for the year were explained by the experts. Special sessions were available for Happy Feet, Shrek 3, Transformers and Spiderman 3.

I was able to attend the Spiderman 3 special session presented by Spencer Cook, Ken Han, and Peter Knoffs. Spiderman 3 included 900 visual effects shots, but many of these shots were quite advanced shots when compared to the previous two Spiderman movies. The director, Sam Raimi, believed that you should shoot as much as possible and use it when you can. The availability of high-speed winches made a lot of shots possible that weren't possible before.

Some of the tricks used on this film were to use where possible face replacement. By scanning and texturing CG faces, the actual filmed faces could be replaced with its CG duplicate if the expression or look of the character wasn't what the director wanted. This was also used to change costumes such as the design of Spidey's black suit, which changed late in the production. Another place this was used was to replace limbs to create smooth effects as the characters moved about.

The environments were another challenge tackled by the VFX team including the airborne battle between Spiderman and Harry, which took place in the "world's longest alley."

Anecdote: The Tale of the Expired License

Computer conferences can be tough. You spend a long week in a unfamiliar city. There are sessions to attend, meetings that can't be missed and strange city streets that you need to navigate. I've found that if you plan out the conference beforehand, that things will go much smoother because something is always bound to be a wrinkle in your trip.

My wrinkle for this conference came in the form of a birthday present. My birthday occurred about a week before the conference started and with my busy preparations, I forgot to notice that my driver's license had expired. A fact that didn't bother me until I arrived in California and tried to rent a car to drive to the conference. So, there I was stranded in the airport miles away from the conference that I had so carefully planned out. Luckily, with several taxis, a shuttle and the Amtrak train, I manage to find my way to the conference, but my carefully laid out plan was already out of whack.

For the rest of the week, I managed to learn the ins and outs of the San Diego mass transit system, which seemed to work out just fine. It dropped me off fairly close the conference center and I didn't have to worry about parking. Everything seemed to be going along fine until I tried to book the final train ticket to the airport.

When trying to buy a train ticket to the airport, I was informed that I couldn't purchase a ticket without a current ID. Luckily, I had a school ID that the ticket agent accepted or I'd still be in San Diego trying to thumb my way home. Actually, I think the ticket agent took pity on me after a desperate plea to just let me go home. Well, I made it to the airport okay.

But, then again at the airport, the ticket agent marked my boarding pass with a bright yellow highlighter. A mark that they immediately recognized at the security gate. In fact, it seemed to make everyone at the security check very uneasy and landed me in a special queue. I was flagged as someone that needed extra attention. I'm sure my full beard didn't help my appearance, I knew I should have shaved for the conference.

Finally, after an exhaustive search that included being padded down, I was allowed to board and return home. Having learning my lesson, I cautiously and well within the speed limit drove home and renewed my license the next day. Now I don't have to worry about this happening for another 7 years.