Before we get into it - I'll just warn you I was forced to use flickr for the pictures this time as I haven't been able to upload to gamedev for 4 days and counting ... hopefully it works ok.

The best advice I ever heard when tackling a large complicated bit of work is: refactor until it is trivial. The idea being that, instead of fundamentally breaking your code with some wholesale change and then spending months trying to make it work, that you make a series of small cleanups/improvements/extensions to the code, that eventually makes what you're trying to do both very safe and very simple.

In the spirit of that advice, the first step in adding hardware shaders doesn't involve hardware shaders at all! All we're going to do is take the current fixed-function rendering code (which supports material sorting, transparent object sorting, and stencil-buffer shadow volumes) and decompose it (i.e. refactor it) into a series of rendering building blocks which we can then use to build more complicated rendering loops. If we do our job right, the game should look exactly the same before and after ... but obviously under the hood, it should be a little cooler.

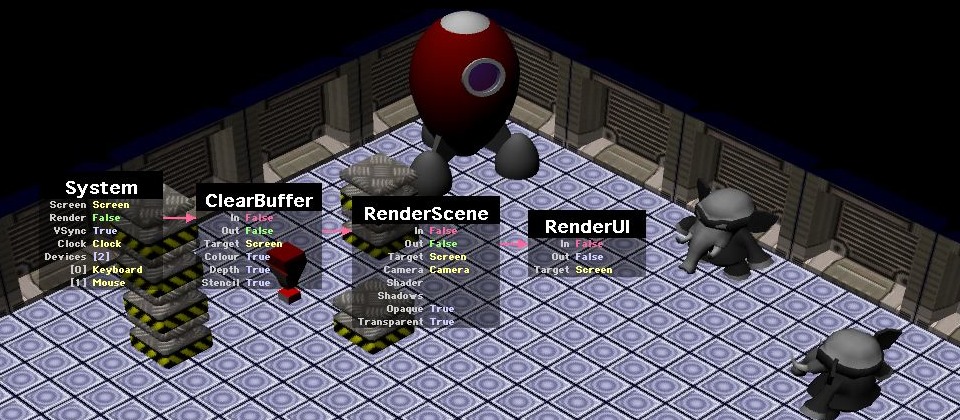

The first step was to turn the old "Renderer" object (which did all the work) into a virtual base class for any object that wants to render objects in the scene. This obviously means we're going to pay a virtual function call overhead that we didn't before (and traverse the scene multiple times for each render pass), but this is just the price of admission for a more flexible renderer. I then moved all the basic scene rendering code into a new RenderScene class, and put all the old UI rendering code into a RenderUI class. Finally, I pulled the buffer clearing out into another object, and gave all the objects In and Out properties that allowed you to assemble them into a sequence.

With the basic rendering cleaned up, I then ported the old shadow code onto the new Renderer interface (so it runs as "just another scene rendering pass"), and hooked in into the render loop.

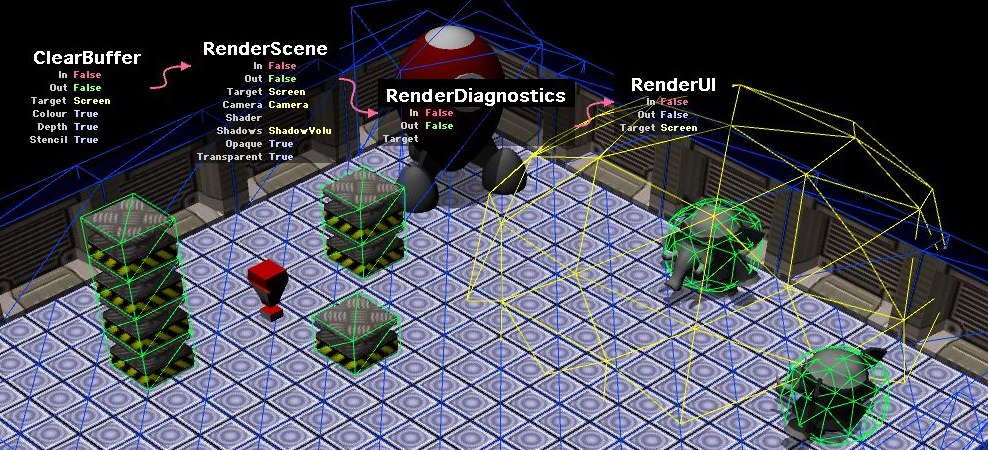

And then I made all the old physics diagnostics part of a new RenderDiagnostics pass. The cool thing here is that the blocks in the render loop could be any object in the game: you could run a script in the middle of the render loop, hide something, move things, change material properties ... anything you want really.

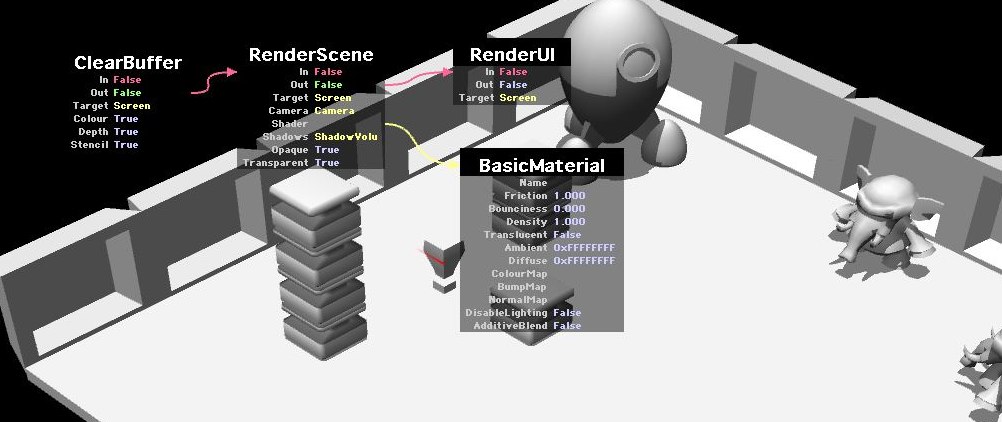

The final step in the fixed-function cleanup was to extend the RenderScene block to let you select the Camera you wanted to use, filter which passes you wanted to draw (opaque/transparent), and override the shader if you wanted. This last one is particularly powerful once we start looking at fullscreen effects (as it allows us to do things like depth passes, normal passes, etc) - but for now, here's a trivial example where we render the whole scene using a while lambert shader:

And that brings us to the end of the fixed-function cleanup. There are a couple of tricks we've skipped over for now (the render Target object being the main one), but on the whole, we've now turned our monolithic render into a set of rendering modules we can flexibly assemble into a render loop. And while we haven't got any real eye-candy working just yet, we've got the building blocks we need. All we need now are hardware shaders ...

Cheers!

Do you literally create a linked chain of these render objects that pass control to the object pointed to by their output once they are finished, or is this more a conceptual way of thinking about it?

I can see that chaining objects together like this to perform a sequence of steps would facilitate generating this stuff from scripts or editors. It's a very appealing approach.