Now I realise this is stretching slightly outside the normal realms of gamedev, but while working on my texture tools I've turned my attention again to something that is often a problem for photographers, white balance.

While white balance is something that is easy to correct when you have a RAW image file (before white balance has been applied), I've always found it considerably more difficult to correct for when all you have to work with is a JPG, or video stream. Hence the general advice to photographers to get white balance right in the camera (using grey card preset balance etc).

Of course the world is not ideal and sometimes you end up with images that have a blue or yellow cast. I've found the colour balance correction tools built into things like photoshop and the gimp to be pretty awful. Often when you pick a gray / white point you can very roughly correct things overall but you get colour tinges in other areas of the photo, and it never looks as good as a correctly balanced photo.

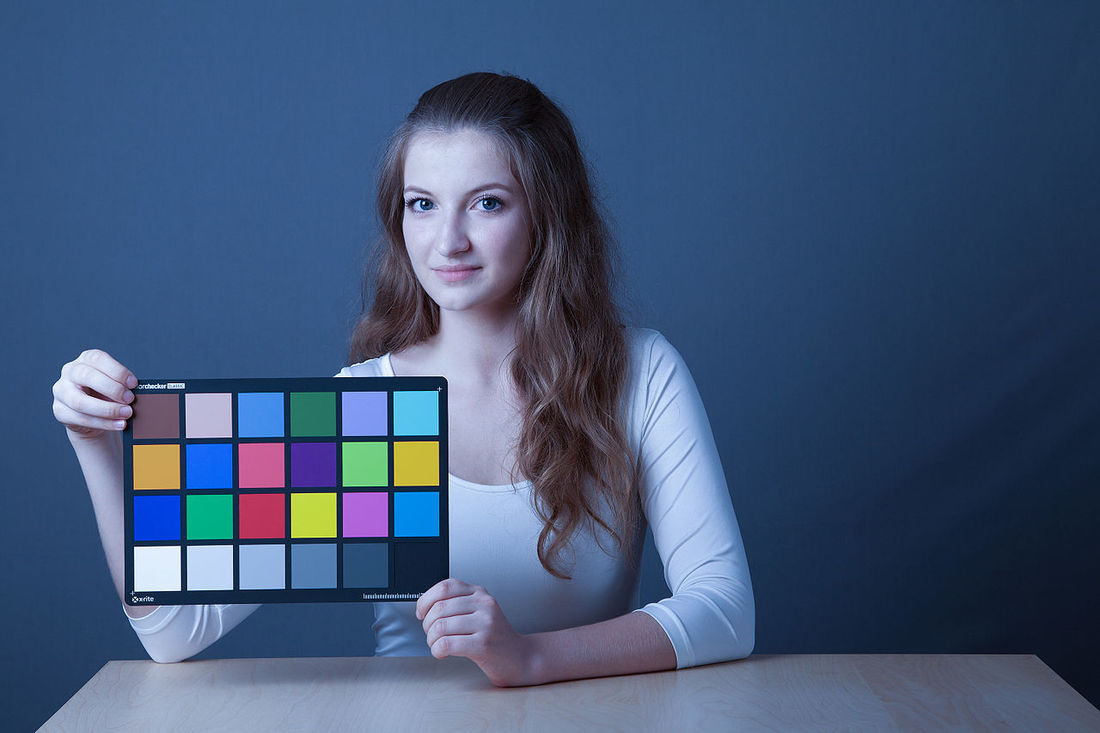

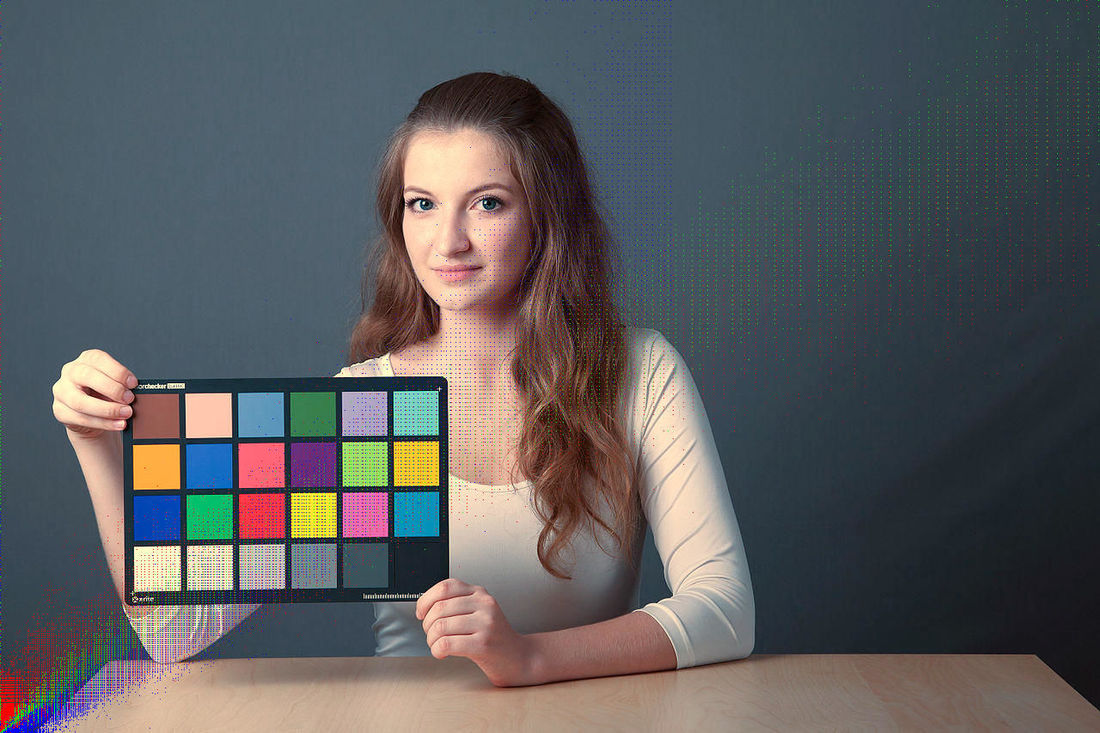

Tungsten (blue cast - needs correcting)

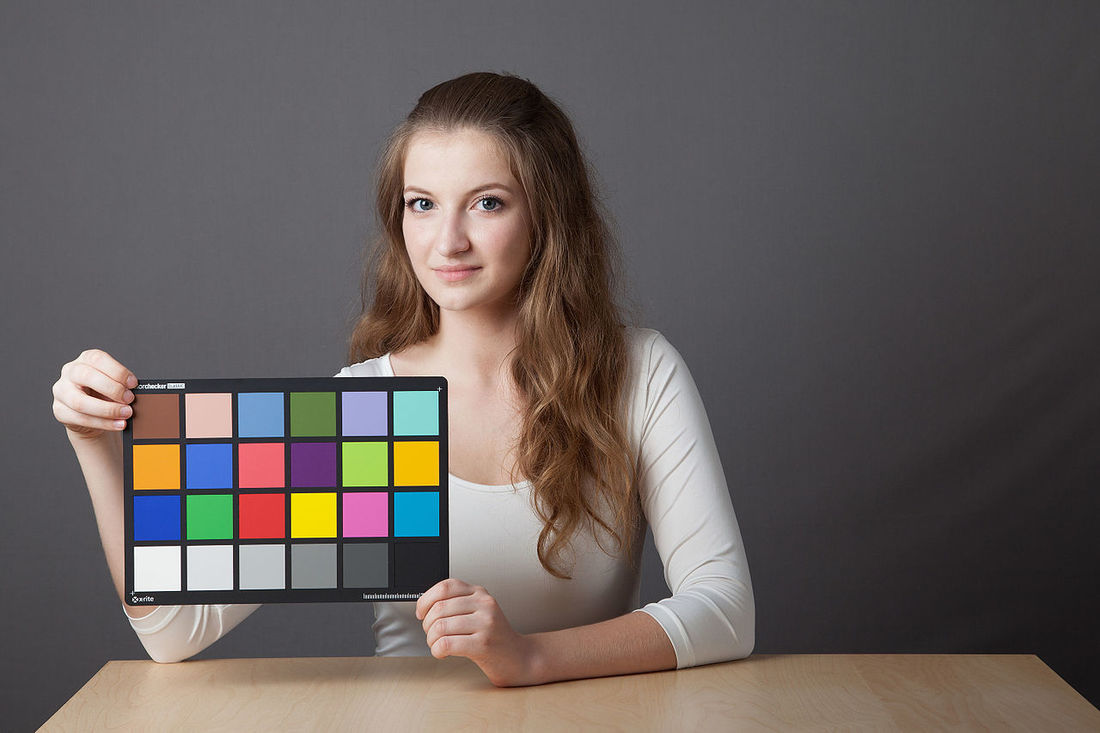

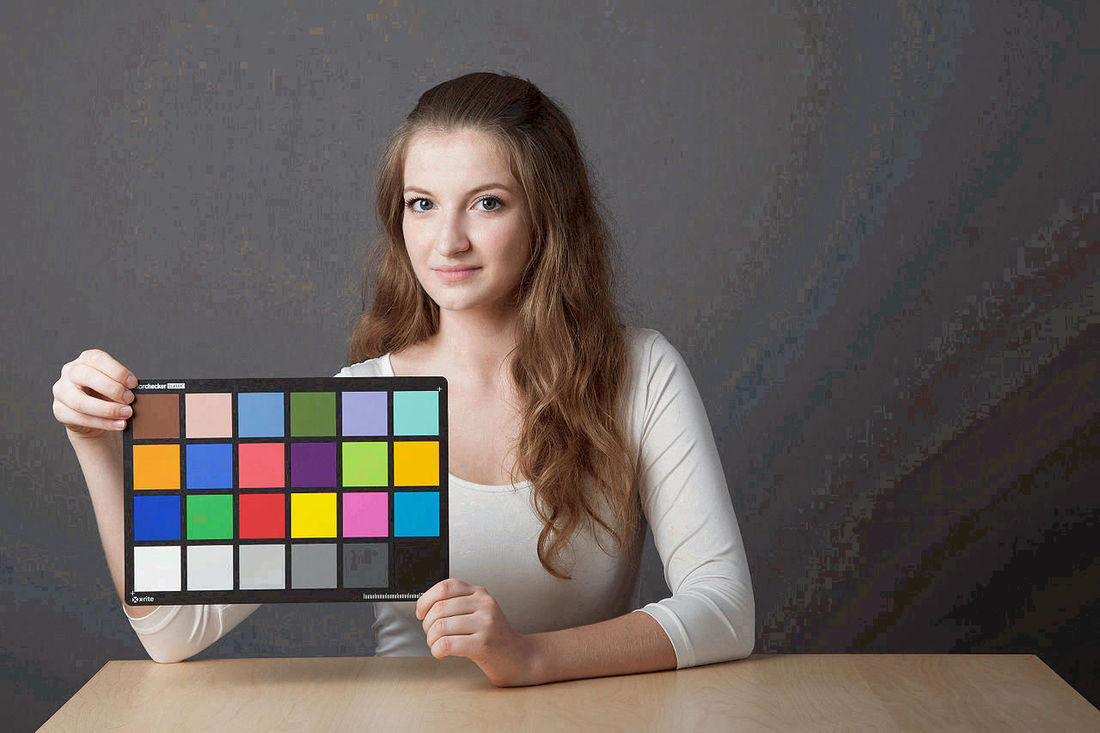

Neutral (correct, reference image)

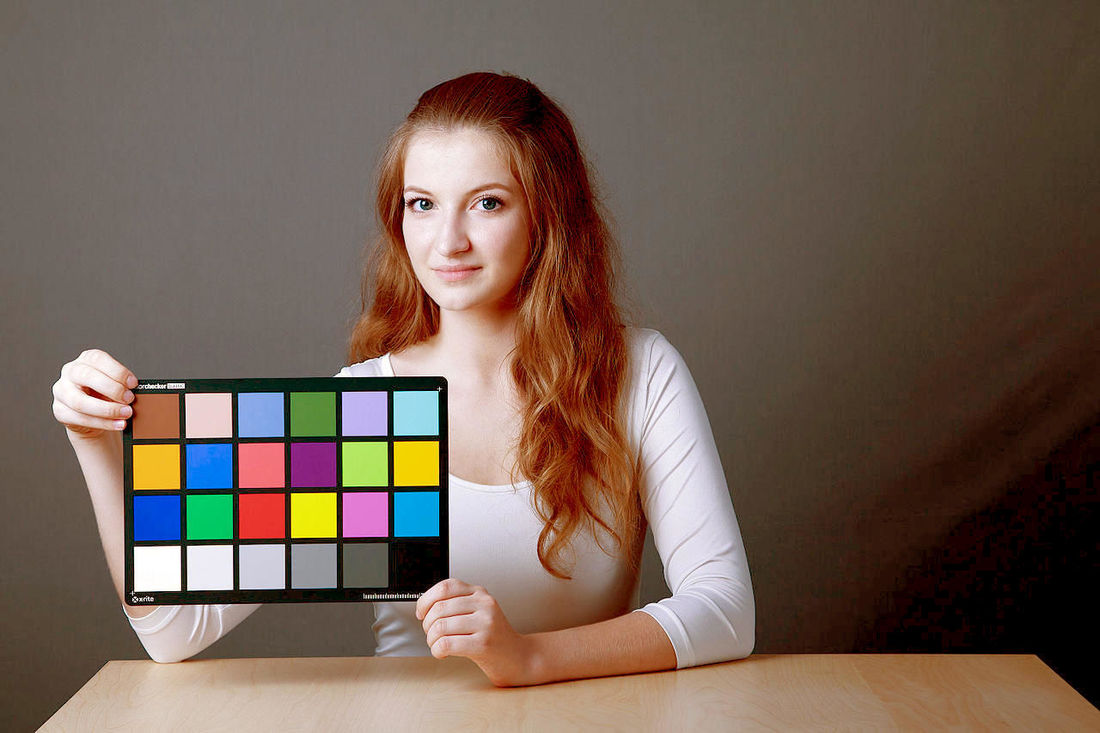

Attempt to colour correct in Gimp using white and gray points (overly saturated result)

I've worked through various naive approaches to balancing photos in the past. I started long ago with the very basic multipliers on the sRGB 8 bit data. First mistake of course .. this is gamma compressed, and to do anything sensible with images you have to first convert them to linear with a higher bit depth. I now know better and use linear in nearly all my image manipulation.

Colour Spaces

The next step is colour spaces. I must admit I have found this very difficult to wrap my head around. I have used various other colour spaces like LAB and HSL in the past and just have used conversion functions without really knowing what they were doing.

This past week I have finally got my head around the CIE XYZ colour space (I think!), and have functions to convert from linear sRGB to XYZ and back.

Next I learned that in order to do chromatic adaptation (the technical term for white balance!) it is common to transform to yet another colour space called LMS space, which is meant to more accurately represent the colour cone cells in the eye.

Anyway to test this all out is luckily rather easy. All you need is a RAW photo, and export it as jpg with different white balance, then attempt to transform the 'wrong' jpg image to the 'right' jpg image.

Usually I would do this myself but there are some rather convenient already made images here:

https://en.wikipedia.org/wiki/Color_balance

So I've been using these to test, trying to convert the tungsten (blue cast) to neutral.

I've had various other ideas, but first wanted to try a very simple, attempt to get the photo into the initial colour space / gamma, alter the white balance, then convert it back again.

To do this I converted both the blue (tungsten) image and the reference (neutral) to sRGB linear, then to XYZ, then to LMS. I found the average pixel colour in both images, then found the multiplier that would convert the average colour from the blue to the neutral. Then I applied this multiplier to each pixel of the blue image (in LMS space), then finally converted back to sRGB for viewing.

Results

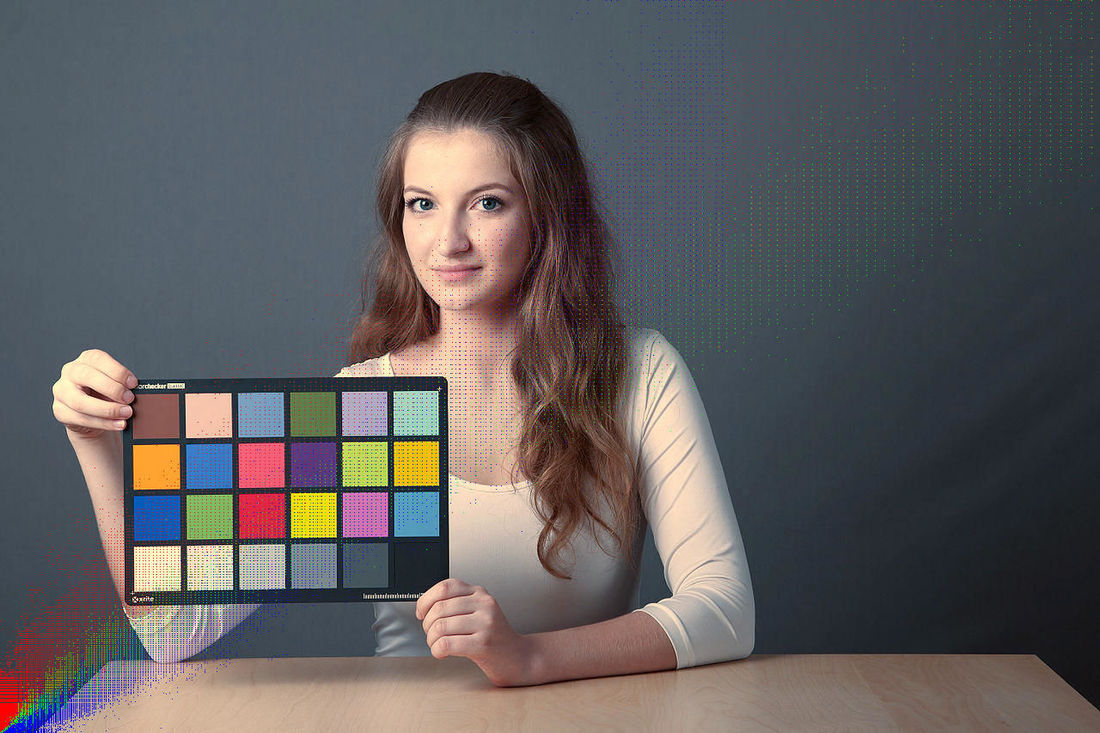

LMS colour space

Linear RGB colour space

The results showed a slightly better result doing this process in LMS space versus linear RGB space. The background turned a better gray, rather than still having a blue tinge with the RGB space. The result looks pleasing to the eye but you can still tell something is 'off', and the white point on the colour checker chart in the photo is visibly off.

As well as the photo, I also superimpose a plot of the RGB values with converted value against expected value, to show how accurate the process is. A perfect result would give a straight line plot. Clearly there is quite a bit of colour variation not corrected for in the process.

My current thinking is that there are 2 main things going on here:

- To do the conversion, the colour space / gamma should exactly match that in the same stage in the RAW conversion. Maybe it doesn't. Is the RAW conversion done in linear camera space rather any standard colour space? I don't know. I've attempted to dig into some open source RAW converters to get this information (DCRaw and RawTherapee) but not had much luck.

- To get things to the state when the white balance was applied in the RAW converter, you not only have to reverse colour spaces, you have to reverse any other modifications that were made to the image (picture styles, possibly sharpening etc). This is very unlikely to be possible, so this technique may not be able to produce a perfect result.

Aside from the simple idea of applying multipliers, my other idea is essentially a 3d lookup table of colour conversions. If (and that is a big if) there is a 1:1 mapping of input colours from the blue image to reference colours in the neutral image, it should be possible to simply lookup the conversion needed. You could do this by simply going through the blue image till you found a matching pixel, then find the corresponding pixel in the neutral image and use this. In theory this should produce a perfect result in the test image by definition (if the 1:1 mapping holds).

I should say at this point the intended use is that if you can find a mapping for a specific camera to get from one white balance setting to another, you can then apply this to correct images that were taken BEFORE the mapping was found. So if you are attempting to convert a different photo it is likely that there will be pixels that do not match the reference images. So some kind of interpolation and perhaps lookup table would be needed, unless you were okay with a dithered result.

At this point I'm going to try the lookup table approach. I suspect it may give better results, but not perfect, because I do fear that picture styles / sharpening may have made a perfect correction impossible, much like the entropy reversing idea of putting a shattered teacup back together, from Stephen Hawking and our old friend Hannibal Lecter.

Ideas?

Anyway this blog post was originally meant to be a question for the forum, but grew too large. So my question would be whether any of you guys has experience in this, and advice or ideas to offer? Through my research I found this whole colour thing I felt like a real newbie, and it is quite a big field with a number of people working on it, I'm sure there must be some established ideas for this kind of thing.

I'm getting more and more the impression that 3D lookup tables (LUTs) is now the go to solution for this problem, and maybe has replaced the eye dropper 'white point' approach. I'll try it out!

https://lutify.me/free-white-balance-correction-luts-for-everyone/