Hello! It's your boy clocking in again on this beautiful Saturday. It's time for yet another Weekly Update blog post.

First off, I want to inform you all that this will be the last Weekly Update of 2020. I'm taking a two weeks break and be back next January. I may be working a bit, but there won't b…

Hello there! It's Saturday again! Time for your favourite Weekly Update blog! This week I was lightheaded. There were a lot of things happening lately. I simply didn't have much focus.

So this means that this is going to be a short and sweet post.

Minor Updates- Added better details to the 9th jungle c…

Hello hello! It's your boy again! It's time for this week's Weekly Update! I have to say that this week there's not a whole lot of new content. So this update won't be very long. The focus was on optimizing and bug fixing. I was, however, able to add another new type of firearm.

So let's get started…

Hey hey! It's time for another Weekly Update! This time there are a few new kinds of stuff so let's get this started right away!

New WeaponsFirst off, there are two new weapons for players to play with: the shotgun and the uzi.

ShotgunThis one is my personal favourite. Shotguns are mid-ranged firearm…

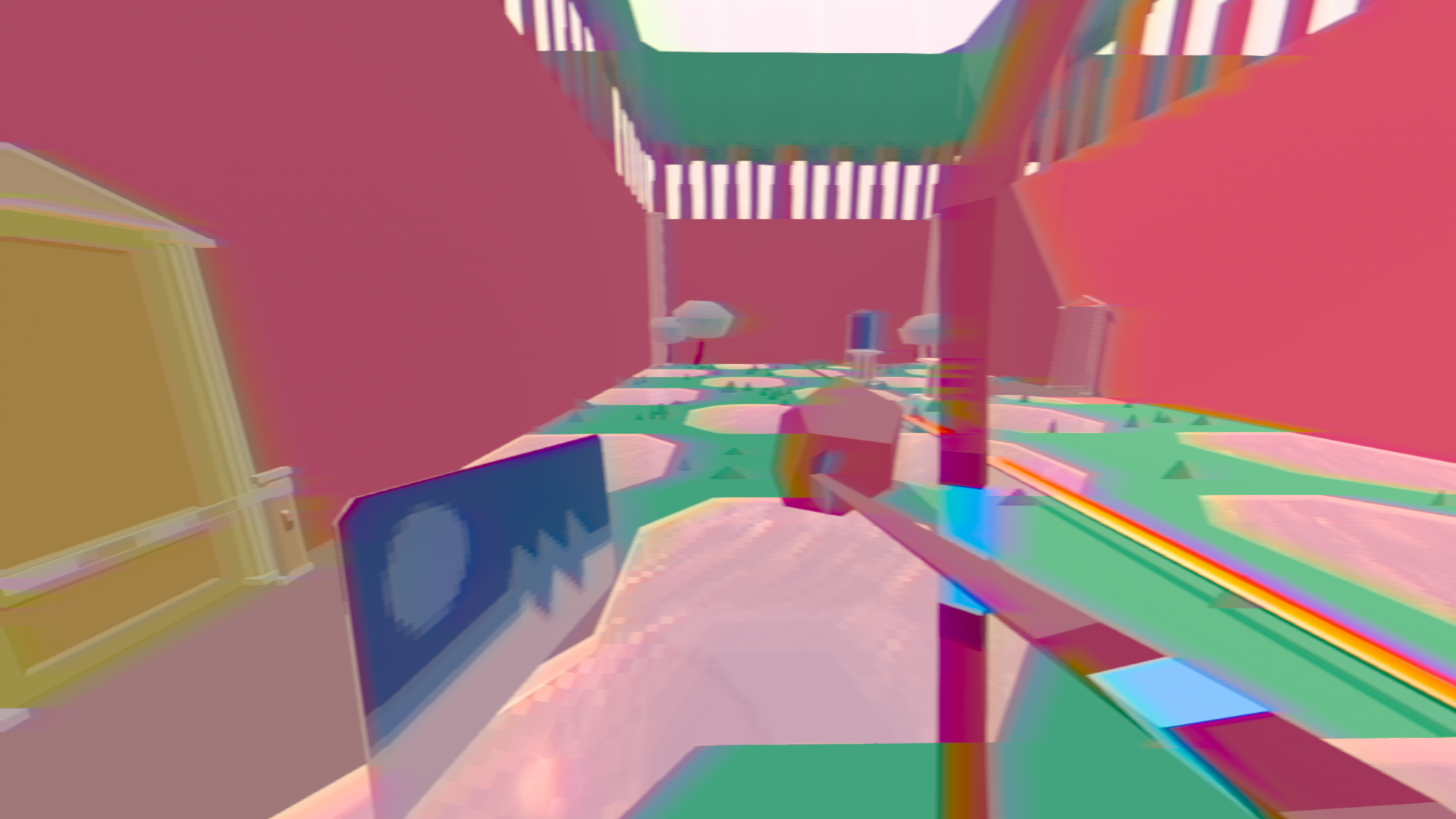

Hello there! It's your boy! Time for another Weekly Update! This week there's a couple of surprises along with a couple of small updates. It's a very visual one this week, so there's a lot of pictures for the eyes to see! Let's get started, shall we?

Small Mall UpdatesFirst, malls got another small …

Hello there! It's yet again your favourite weekly update blog post coming to you live on this lovely Saturday! This week there's quite a bit of graphical and light upgrades along with a couple of new gameplay features. So let's cut the chase and get right to it!

Gun UpdatesFirst up, there's a new me…

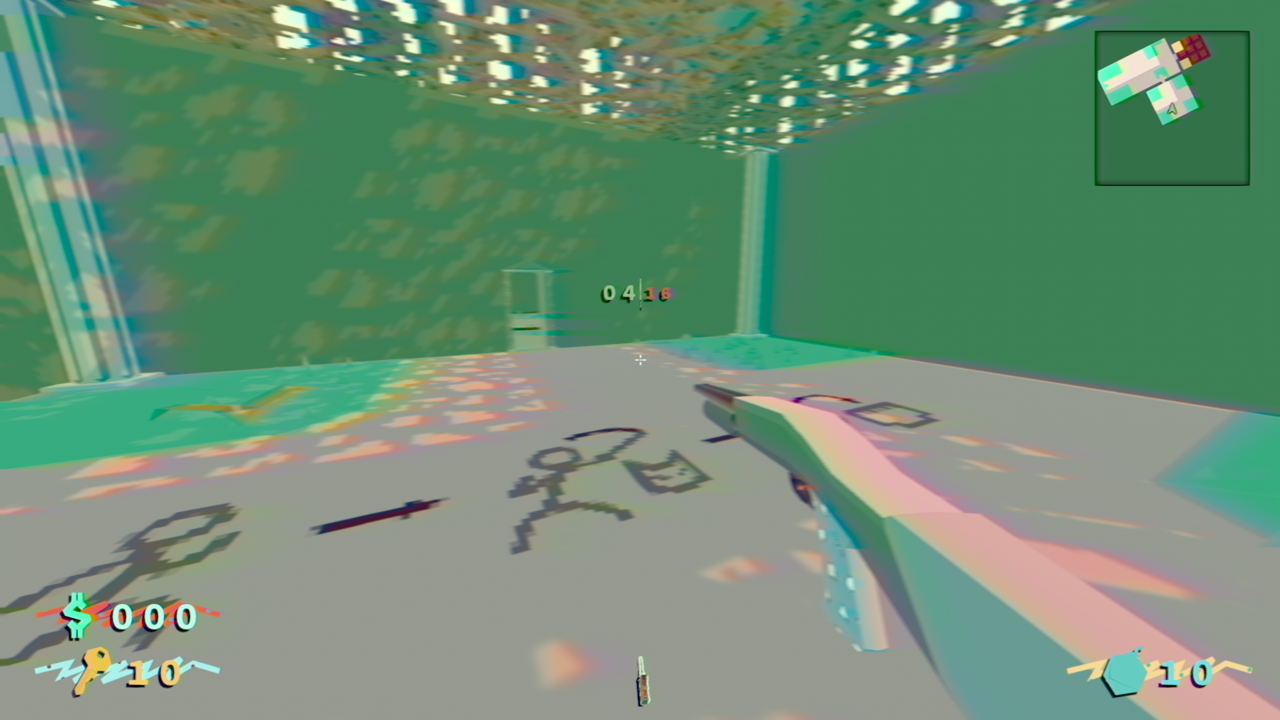

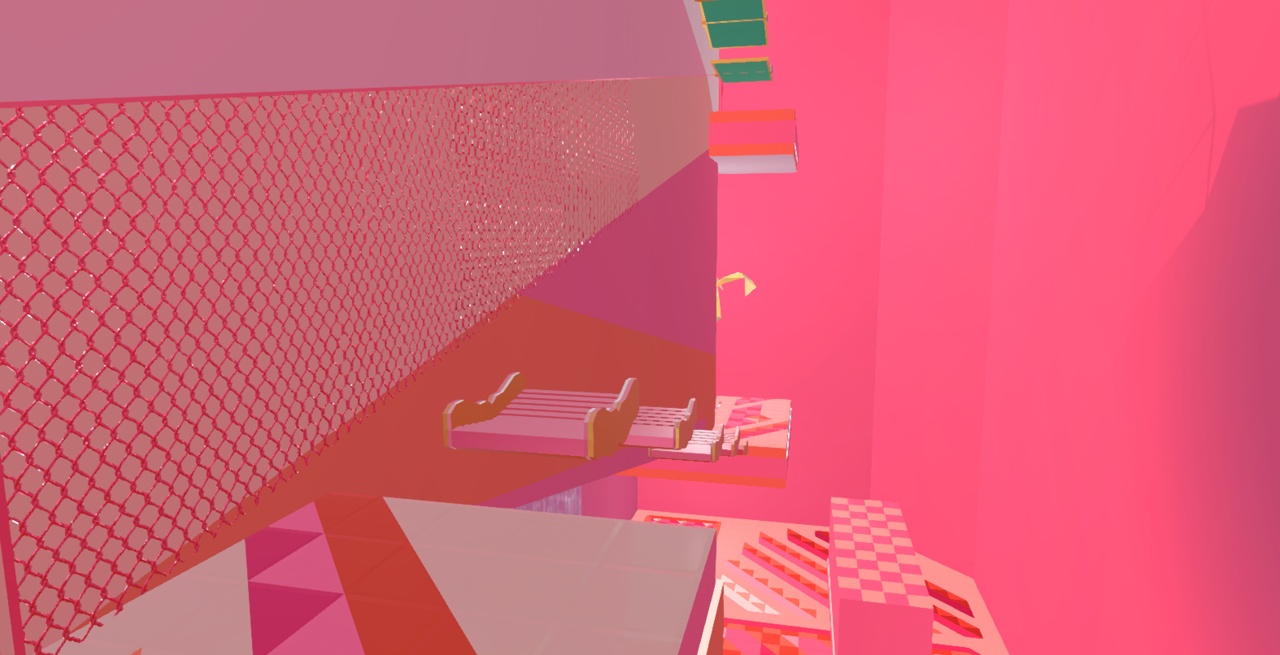

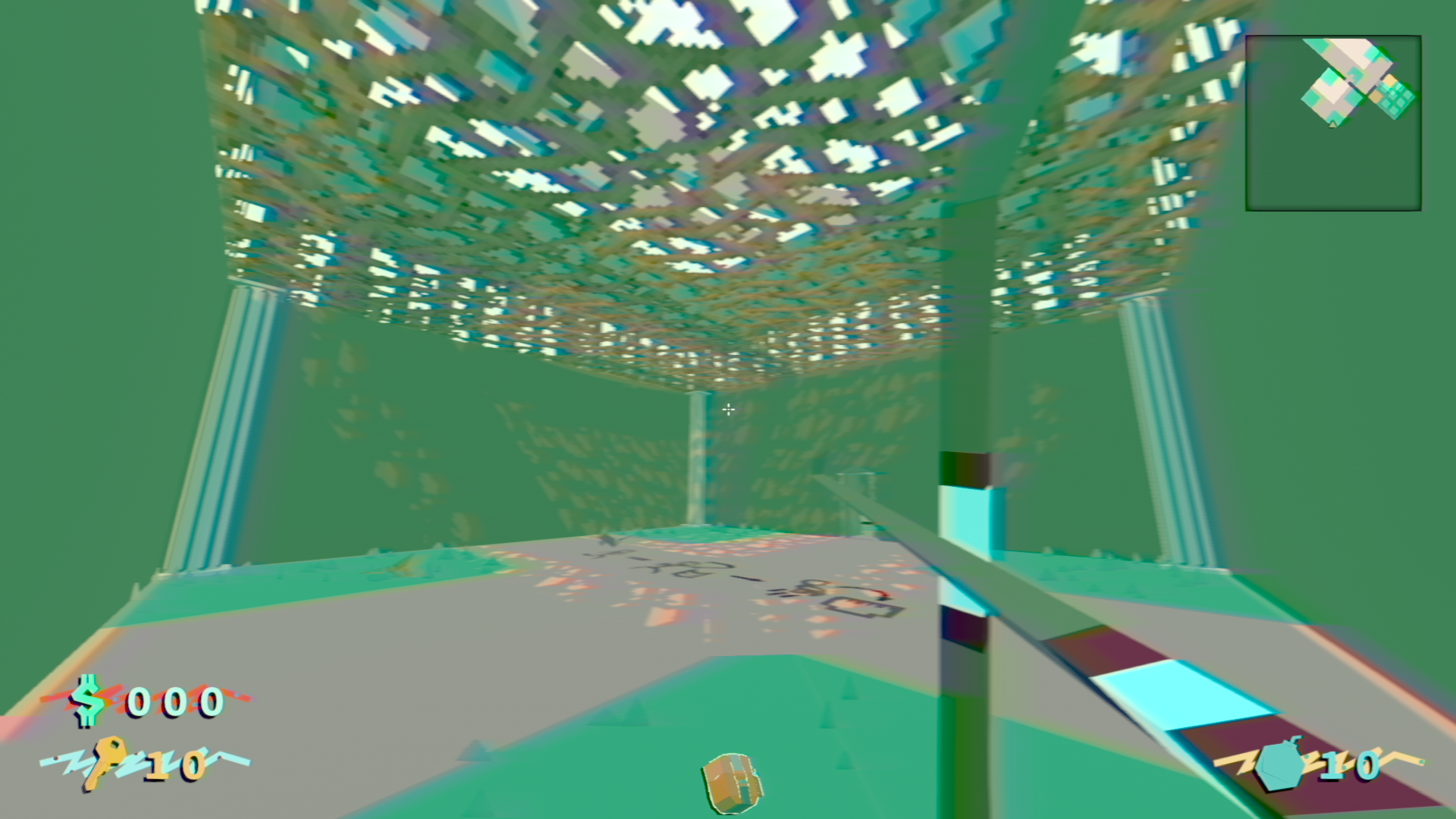

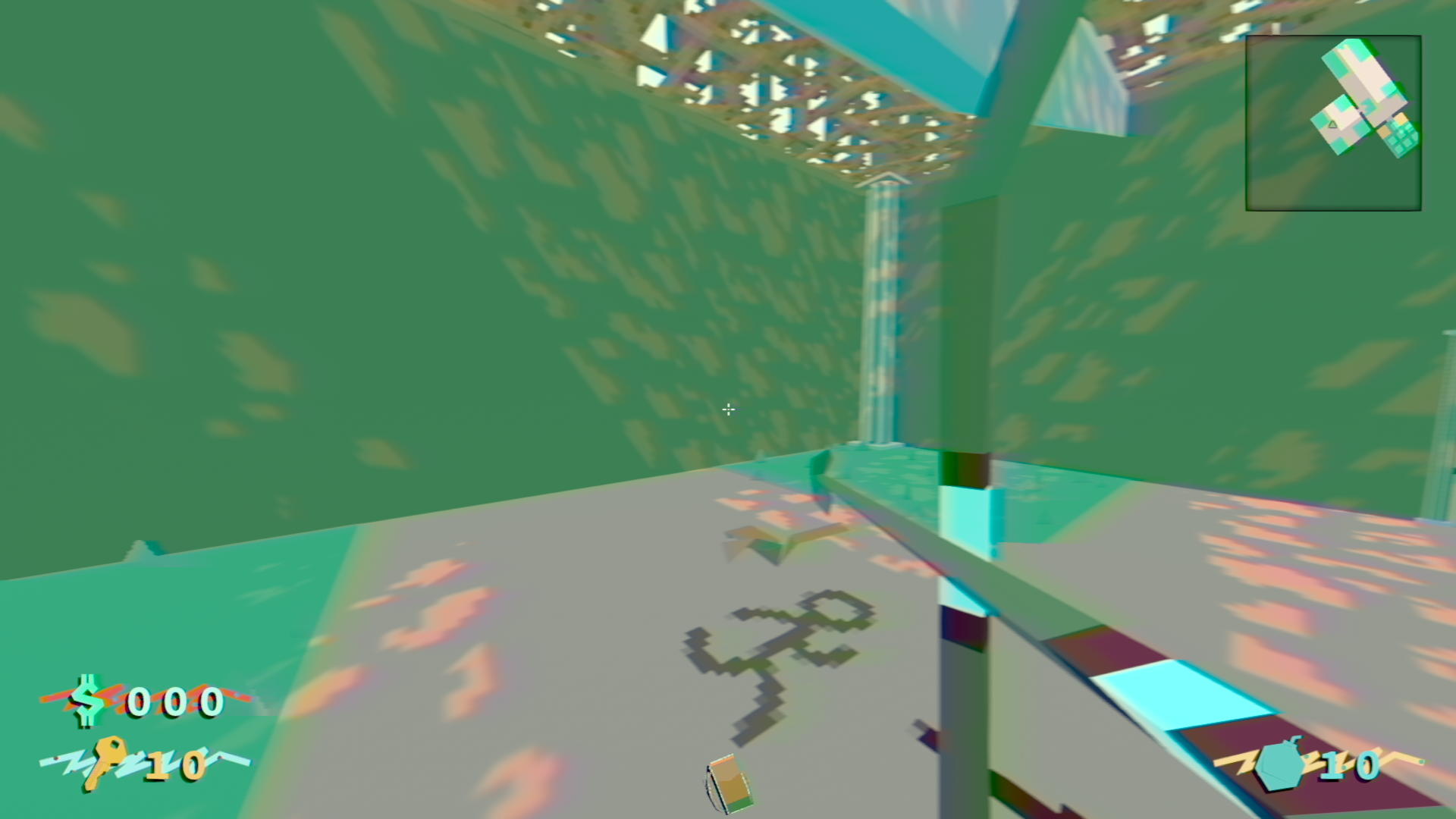

Hey hey! Guess what? It's your favourite weekly update post! This week, there's a whole lot of substantial graphical updates so let's get right to it!

And the lights wereSo my main focus for this week was to check, update and polish most of my generic challenges.

The most striking differences are by …

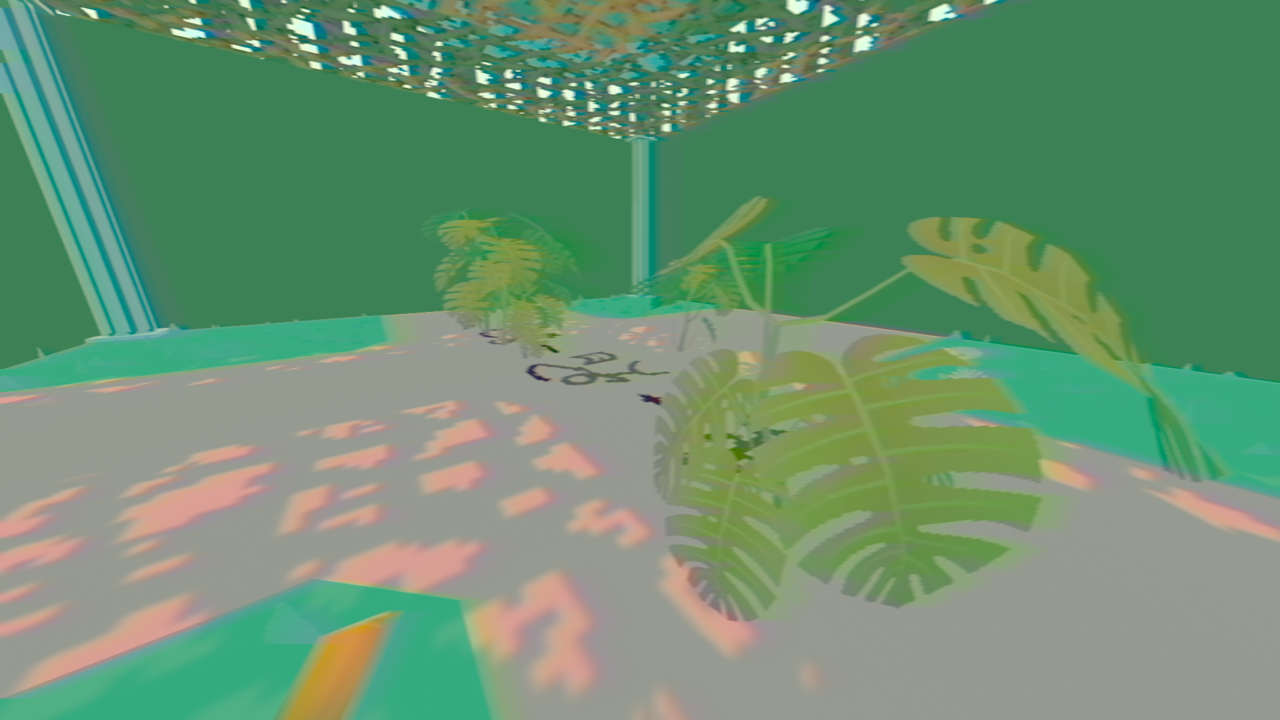

Hello there! Welcome to this week's Weekly Update post! This week was nicely packed! I was able to work on balancing and resolving bugs, among other things. In terms of new features, there isn't a whole lot, to be honest. But anyway, let's get this party started!

A New PlantThis week, there's only o…

Hey! Hello there! Welcome to your favourite Weekly Update post! This week was quite a creative one. Most of it is about texts, props and feedback. So without any further ados, let's get right to it!

Feedback received!First up, let me say this real quick. My patron's feedback was all read and added t…

Hello! It's been a while, isn't it? But that's behind us now. I'm back and that's what matters! I first up want to clear up what has happened to me in the last couple of weeks...

What happenedSo first let me say that I'm fine, and the game is still going strong. I'm right now more determined t…

Hi.

It's been a while, huh? I hope you guys are well!

First off, I want to apologize. It's been nearly a month since my last Weekly Update and this is unacceptable. Life has been not kind to me lately and things are a bit rough at the moment.

The main reason for this is because my Linux rig still need…

Hey there! This week I'm only going to make a tiny update. Basically most of my week was about building my new linux testing rig. I didn't really touched the code much, instead giving all my time to initializing and building the new pc. In fact I'm still initializing it as we speak.

But fear not! On…

Hello there! It's your favourite Weekly Update blog post again! This week was kinda rough A lot was going on at home, which made it difficult to do any work at all. There's not a whole lot of new stuff this week either. No bug fix or massive new mechanics. However, I've still managed to pull someth…

Hello, y'all! It's time yet again for your favourite Weekly Update blog post! This week was slightly busier than expected. I was finally able to lay down the foundation of the last jungle challenge. However, it's not quite done yet. I still have something to show off so let's get this started.

AI up…

Hello there! It's time for your favourite Weekly Update post yet again! This week's been kinda slow actually. There were a lot of changes happening in my life lately, so there's not a whole lot of new stuff. So let's get right to it, shall we?

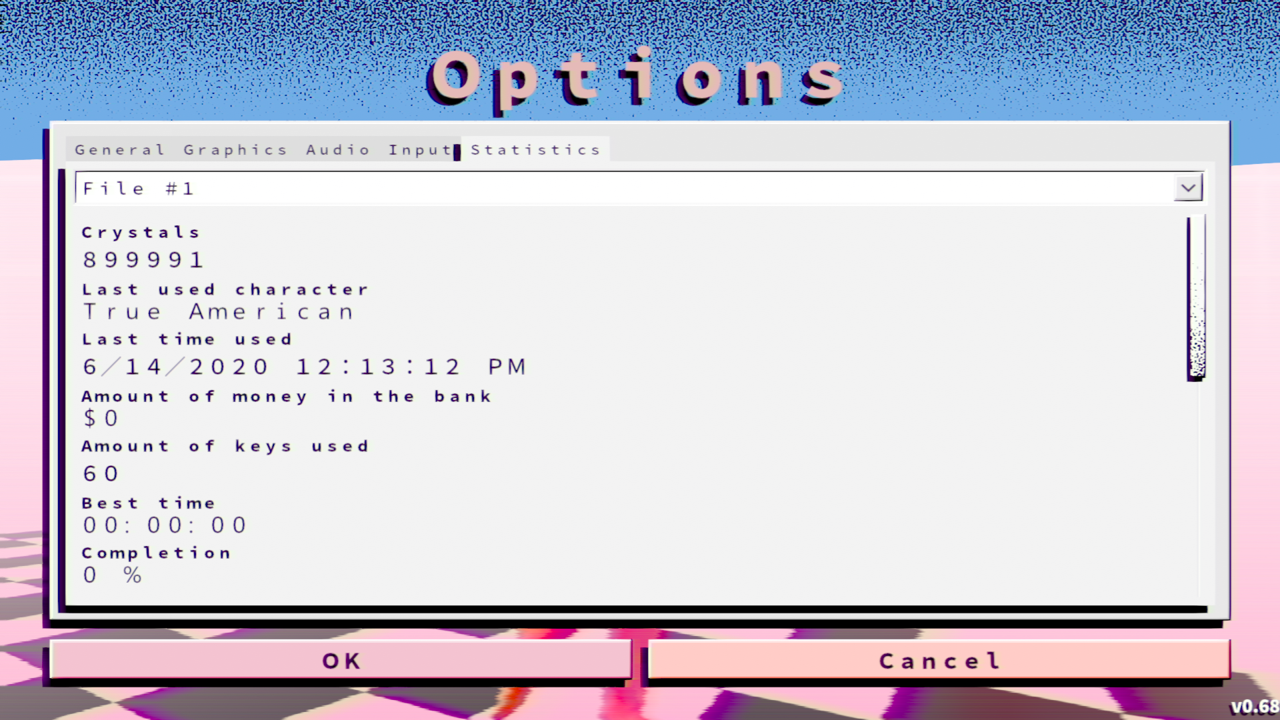

BalancingThis week's theme was mainly balancing. The fee…

Hi! It's Saturday again! Time for your favourite Weekly Updates blog! This week there isn't a lot of new stuff. Instead, there was a lot of document writing, brainstorming and researching. I had my first feedback last week and have been writing all of it down. I'm sure that with a bit of simmering …

Ho! Hello there! Welcome to your favourite Weekly Update blog! The monthly build is on its way. After a few unexpected bugs and a lot of vigorous testing, the game is finally stable enough for an exclusive release. It should launch today if not tomorrow. There are still some documents to write and …

Hello there! This is your Weekly Update post coming in a little late this week due to unexpected events. Last week was quite similar to the week before. The main goal here was to clean up the code and test the game out to get ready for the first monthly build.

I have to say that we are 99% don…

Hey hey hey! It's me again! Today, we're going to have a quite small update indeed. While it was quite a busy week, there are no new mechanics at all. I'm trying to tidy up the game as much as possible. This means general optimizations, refactorings, bug fixing and another trivial programming.

Becau…

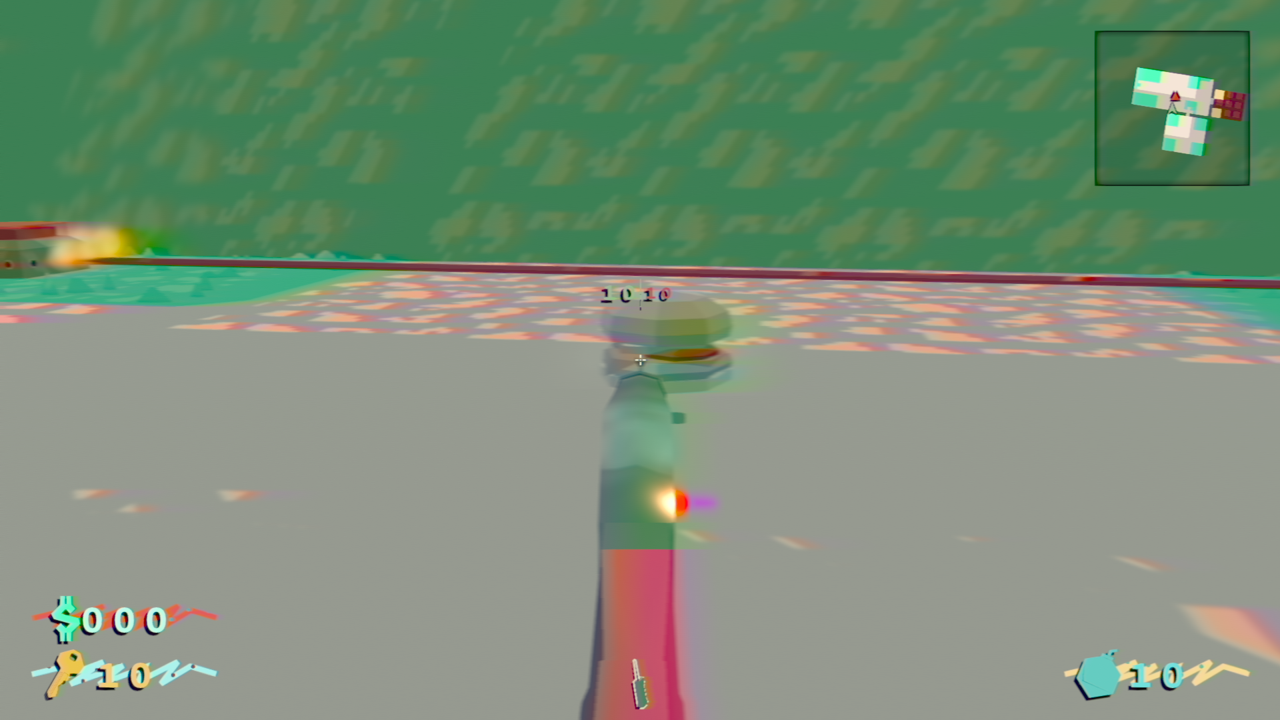

Hey hey! It's time for another Weekly Update post! Last week was a bit slow, to be honest. Nevertheless, I've managed to do a couple of things... So let's get this thing started, shall we?

Lights UpdateFirst off, I've proud to announce that, after changing the colour settings, most lights are now fi…

Hello there! This is me coming to you live for your favourite Weekly Update blog!

This week was kinda slow, but nevertheless I've managed to finish up what I started last week to an extend, so kick back and enjoy!

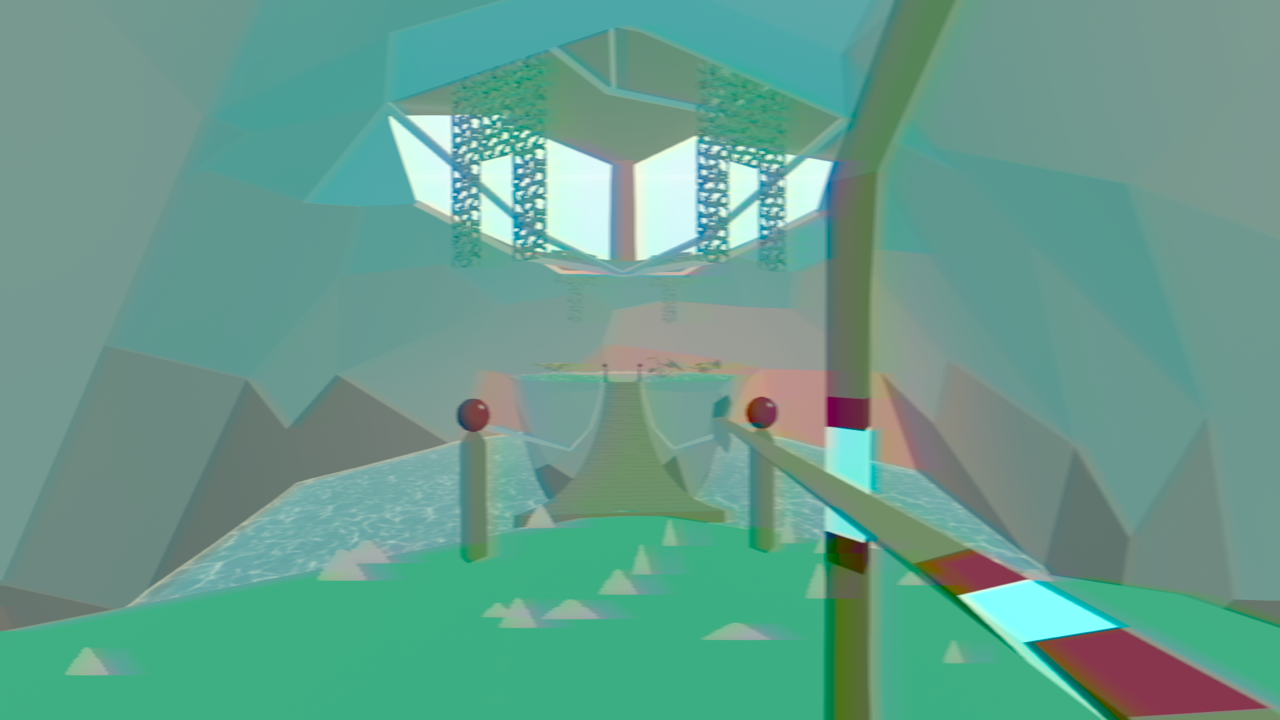

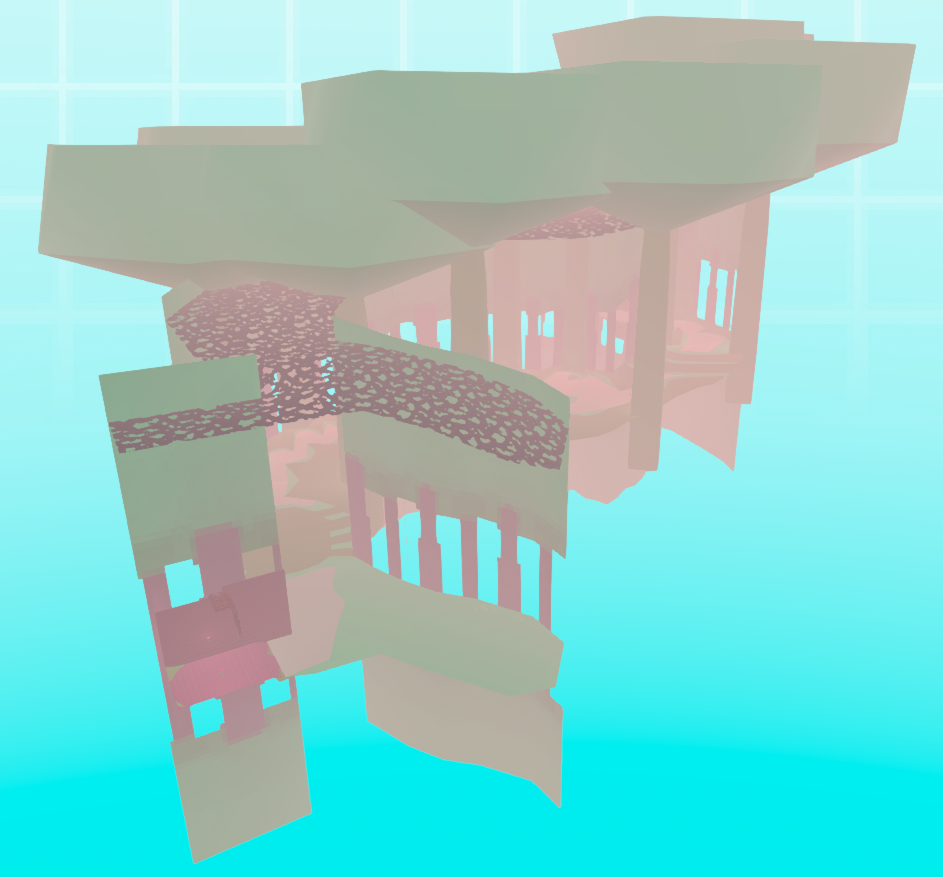

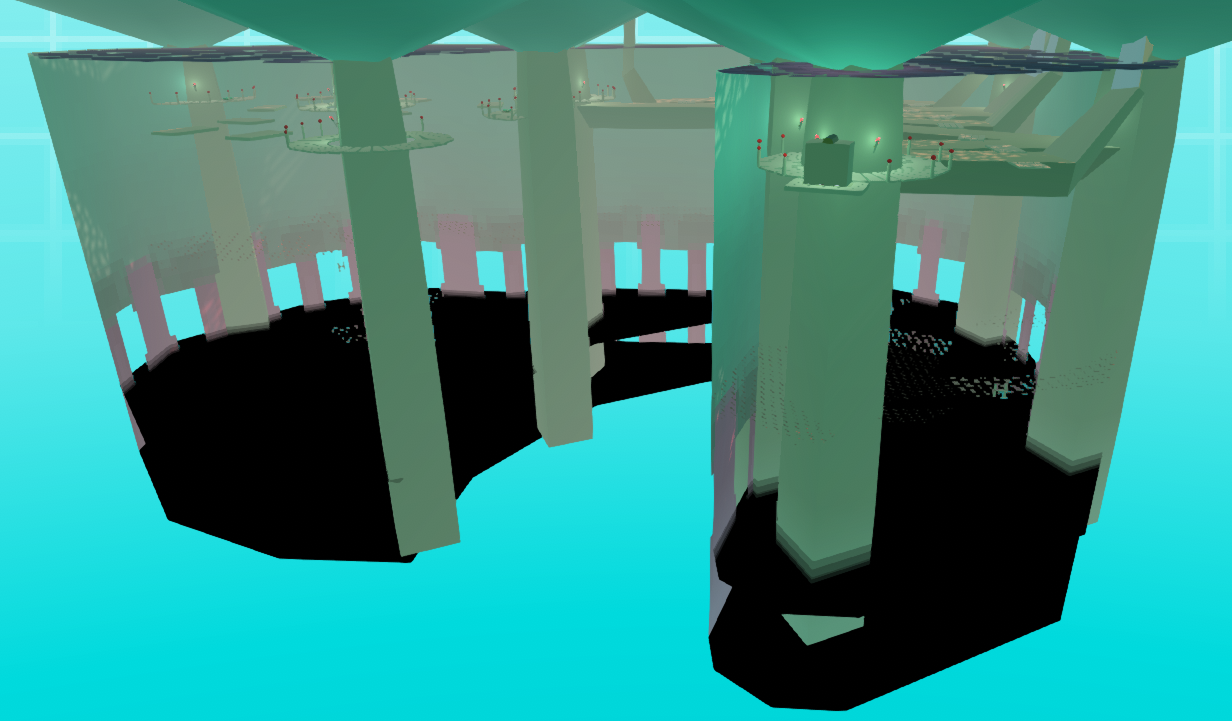

New Jungle ChallengeThis new jungle challenge was cooking up for a while and I'm …

Hello there! I hope you're having a nice Saturday!

I'm gonna put it simply: this week was kinda tough to work on stuff. There were a lot of internal Unity bugs and most of the week was waiting on recompiling most scripts and shaders to fix them.

Nevertheless, I was still able to add three new pieces …

Hey.

It's been a while, huh?

I have quite a bit to talk about today, and not just about the game.

So lets me get this straight about what's been happening first.

What's happened? Why did you go quiet for about three months now?So, in case you forgot, last time I talked about my sick cat. Unfortun…

Hey guys. First of all happy new year! May this year be a merry and successful one. Be thankful for another year and take a resolution to not make any resolutions!

So aside from that, I have some not so good news... Recently, one of our pet cats has been losing weight a lot and knowing her 12 years …

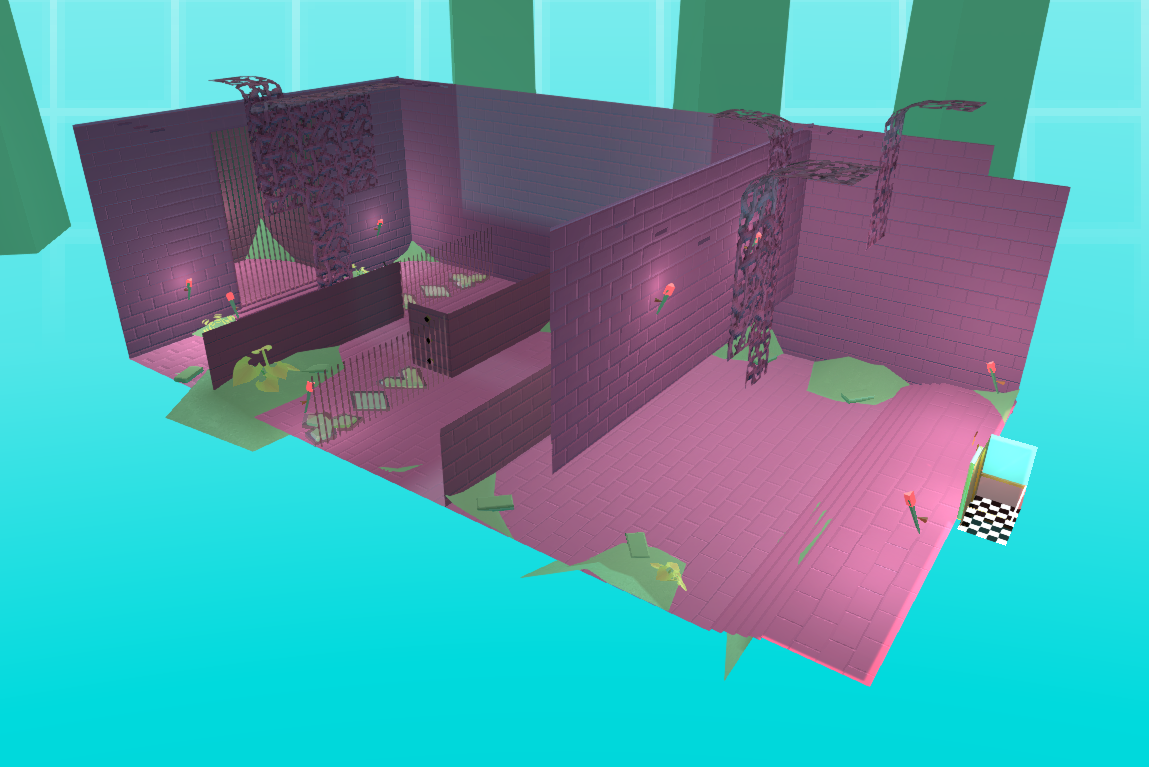

Well well, would you look at the time! It's time for another entry in your favourite Weekly Update blog! This week was quite busy I have to say. There were a lot of things to develop, refactor and design. However, there's only one new challenge this time. Nevertheless, let's get started!

Challenge #…

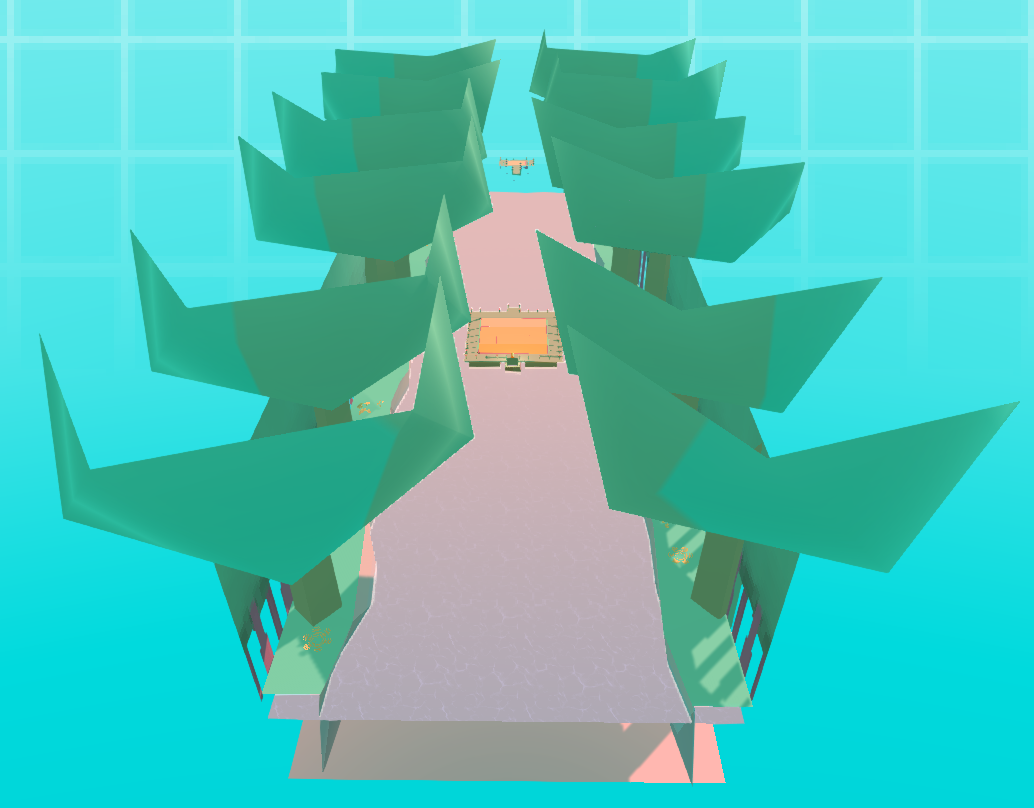

Hey hello there, it's you! Another Saturday is upon us so here's this week's Weekly Update. This week was by far much more about marketing than anything else. Nevertheless, I was still able to come up with one new jungle challenge. So let's get started right away!

New ChallengeLet's start with the n…

Hi there! I don't usually post things mid-week, but this is worth blogging about!

First up, after a bit of brainstorming, I finally gave my game a title:

While it's a working title it's still a big upgrade form the vague name it once had.

Second, coming with the brand new title is the new offici…

.png)

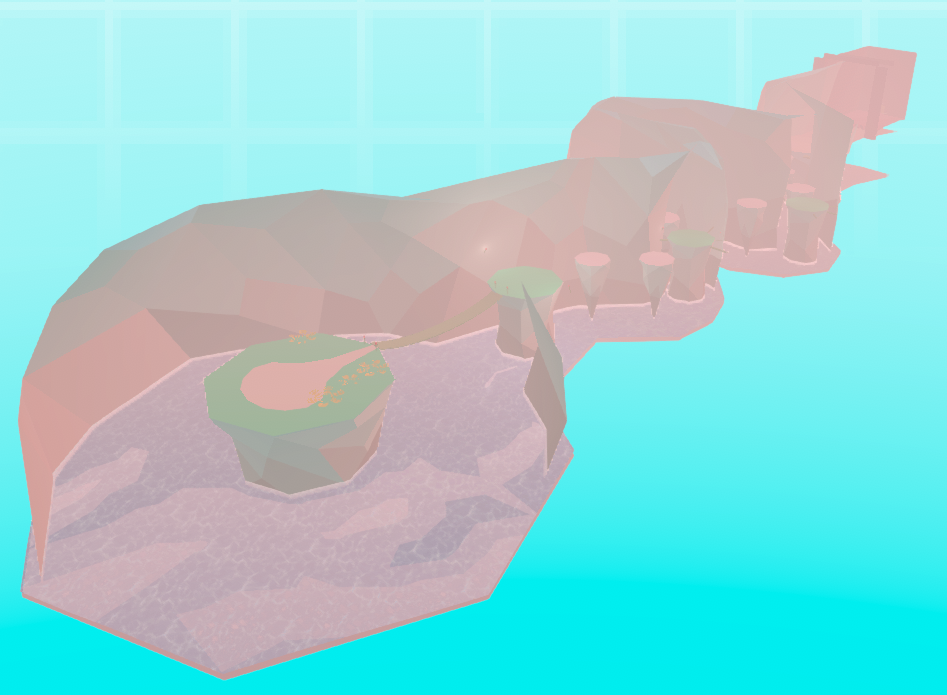

Hey, nice to see you again! Guess what? it's Saturday! It's time for this week's Weekly Update post! Last week was quite productive. There are two new jungle challenges. This means no minor updates yet again... But anyway, let's get it started!

New ChallengesSo, last week I added two new platforming…