Developer Blogs

This game has been in the works for the past couple of years now, although I only joined the team back in August as part of my college capstone project. I was predominantly a gameplay programmer for the team, which was a great experience. I worked a lot on crystals in the game, which are you…

Continuing on from my Tokkitron announcement last month, I did a new game jam project called Cult of Taal-zuz! I swear it’s just a coincidence that I’ve done two game jams in quick succession, and I’m not just planning to keep doing game jams forever. However for a while I’v…

Hi everybody!

I post very rarely to this blog, but I am still alive! I just released a video on the topic of egocentric projection. It's not a topic tightly related to game development, however, I think it is a really neat and rarely talked about technique in computer graphics. Egocentric projection…

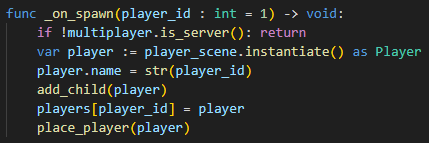

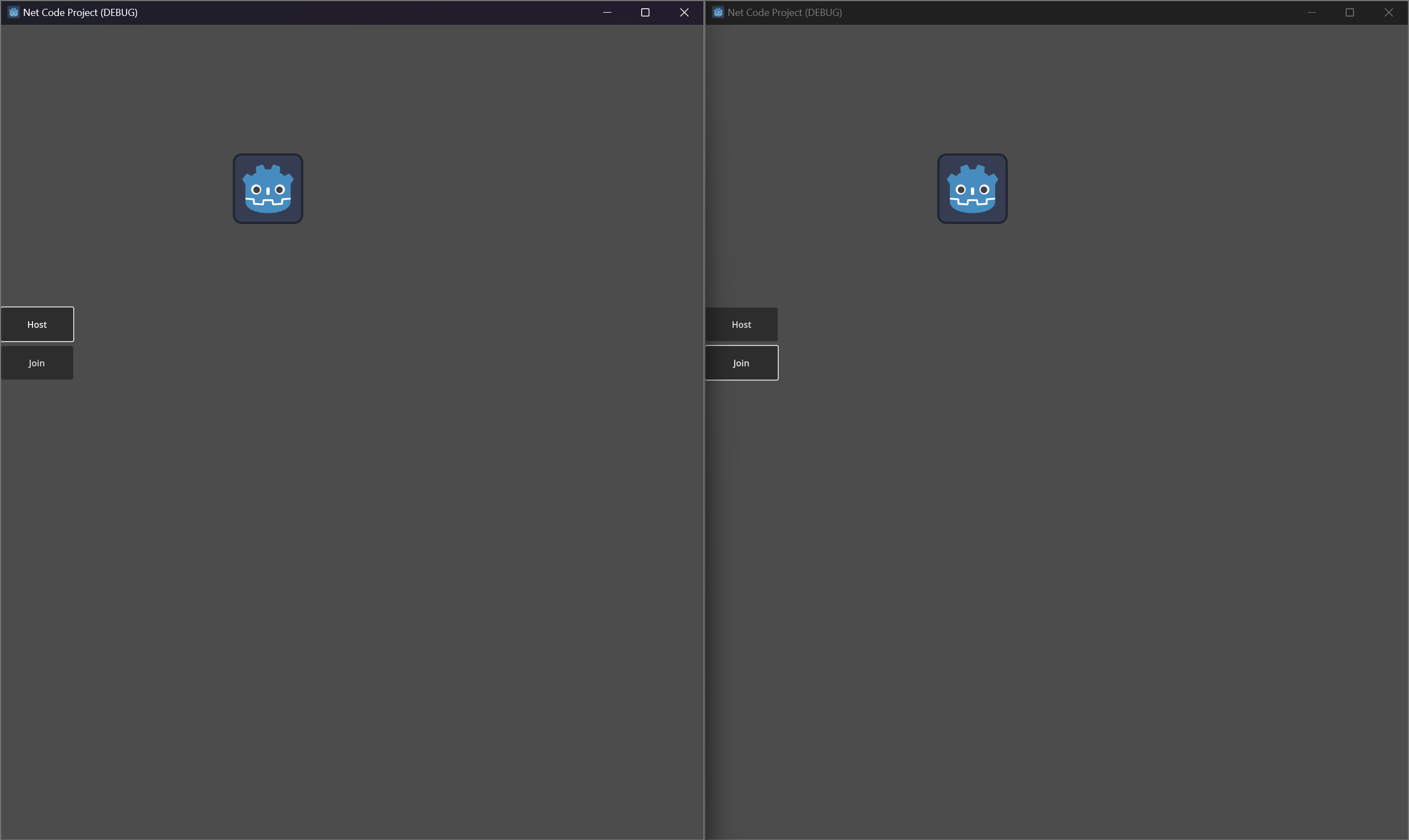

This week I continued my experimentation on net-code in Godot. This is a continuation of the last post, so read here for context on what I will be discussing here.

When we left off we had a simple net-code project where players could host lobbies for others to join by entering an IP address. My firs…

“c1ic.mx” is a game project, is the only site that uses a single button for everything.

You can see movies, read books and play video games just by clicking in the screen.

History

I'm a game developer since year 2000 and this project starts around 2005 as a personal challenge to make one button games …

Hey gamers and readers, I have a game called Espada de Sheris, Open your sketbr.gamejolt.io/eds

https://kittycreampuff.itch.io/eds for play this amazing indie game

Changes to the game

- New Level Design

In This new level design have upgraded and beautiful graphics

- Kibo Particles in ground

When this cat “is…

https://store.steampowered.com/app/2861610/The_Krilling_Scare_Feast/

The Krilling is a sandbox game based around being a ghost shrimp and getting into trouble. In it, you possess various object around a bayou themed hotel and invoke emotions onto the guests to create a spooky recipe to return to yo…

This is part two of this project! For part 1, see here

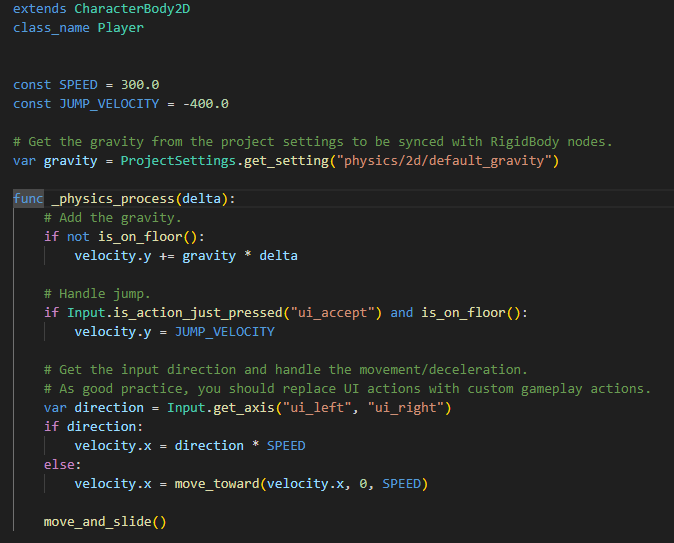

This week I will be taking my previous dive into Godot and multiplayer and bringing it up to a higher level! I think what I would like to do is to make some sort of platform racer. However, I would also like to see if I could set up the net code…

❗ Buy the game now for up to 20% off 💚

Buy it today: https://bit.ly/3ulWJmU

Get ready to fire a f*rt straight from the depths of your bowels! 😂

💥 In A Story About Farting

🟢 F*rt and burp like a mad man

🟢 Collect achievements to show off to your friends

🟢 meet new characters

🟢 click, laugh and …

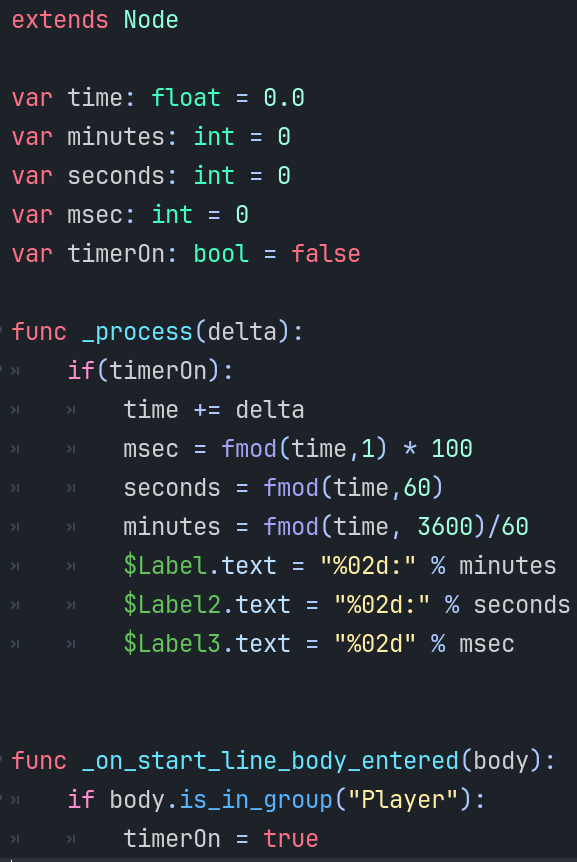

This week I am beginning my role analysis and next week I plan to complete it! For this project, I am going to be using Godot for the first time as I heard that it allows for a fairly simple implementation of net code. I will note, more time than expected was spent this week downloading Godot as th…

With The Krilling set for release, this week was more time to work on some side projects. This week I started a small and short project that is mostly just an experiment of how multiplayer networking works in Godot.

At the start, I had one scene and just placed a host and join button for the player …

The world can be linear or open. The environment can be hand-crafted or procedural. But more important is the requirements of the story structure. Is the story scripted or systemic? Most games use a mix of all of the above. But the needs of the story will usually result in limits of the available v…

This article was originally published on GameDevDigest.com

Here is another week of tips, including some on keeping your scope achievable. Enjoy!

Nathan Tolbert pushes the limitations of new games on old consoles - Nathan Tolbert is a lead research software engineer at the National Center for Supercom…

I have extended my steam similarity app to show tags popularity compared to their popularity among all studied games.

I also added communities detection between games. I used previous estimated similarities to create weighted graph of similarities and than I ran few communities detection algorithms …

Get ready for an extraordinary gaming experience that will make you laugh to tears and leave you smiling for hours! 🎮💨

Welcome to the world of A Story About Farting - a game that will transform your app…

First of all, would like to start by saying a big thank you to the dozen or so people who joined this project on the many platforms it was shown; you weren’t forgotten, so much so that this entry is mostly dedicated to those involved. Although, might be useful for other devs as well.

It was mostly k…

Our steam page is finally up! If you are interested please go wishlist it here. Not much was done on my part this week so once again I will be talking about the side project of mine. For context feel free to read my previous posts about it. You can find my last one here.

So today I will be talking m…

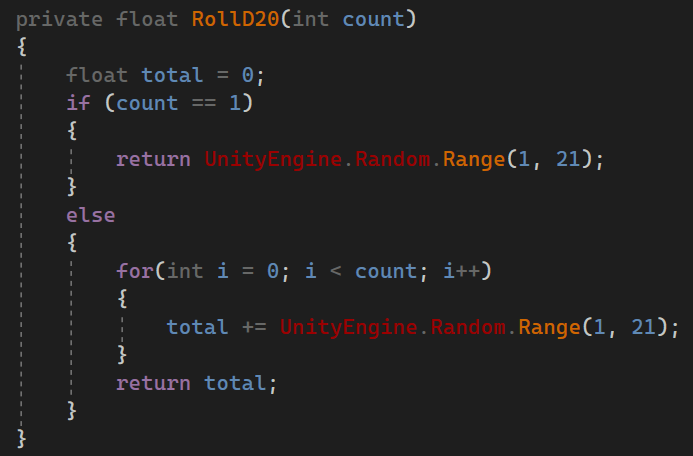

This week was a little light for me as other classes’ final projects have begun, but I have set the groundwork for what I hope will become a fairly robust project in the next few weeks!

This week, I created a custom dice roller for a game of 1st edition pathfinder game I am playing. So far it isn’t …

![General structure and design of the Acclimate Engine [Part 3]](https://uploads.gamedev.net/profile/photo-thumb-170374.png)

Welcome back. Last time, we looked at the runtime-extensibility of the Acclimate Engine (https://www.gamedev.net/blogs/entry/2277862-general-structure-and-design-of-the-acclimate-engine-part-2/. Now we are going to see, how this all applies to scene-managment.

The basicsIn general, scene-managment …

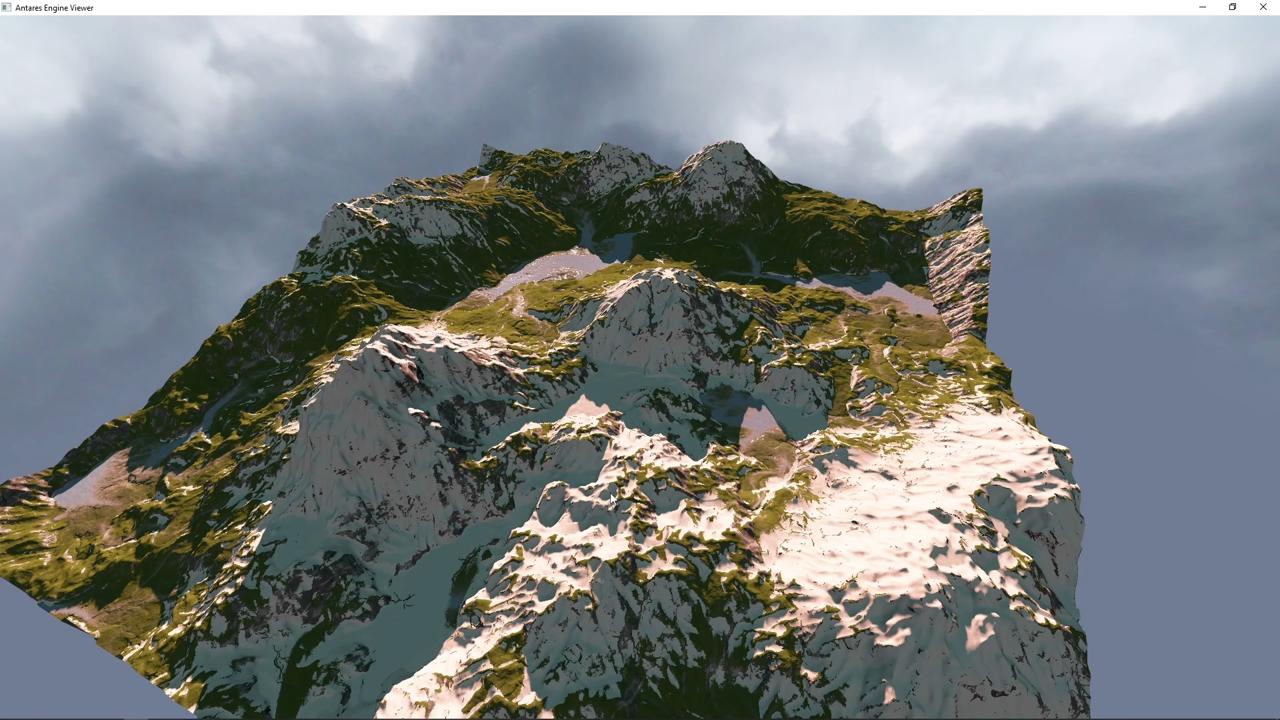

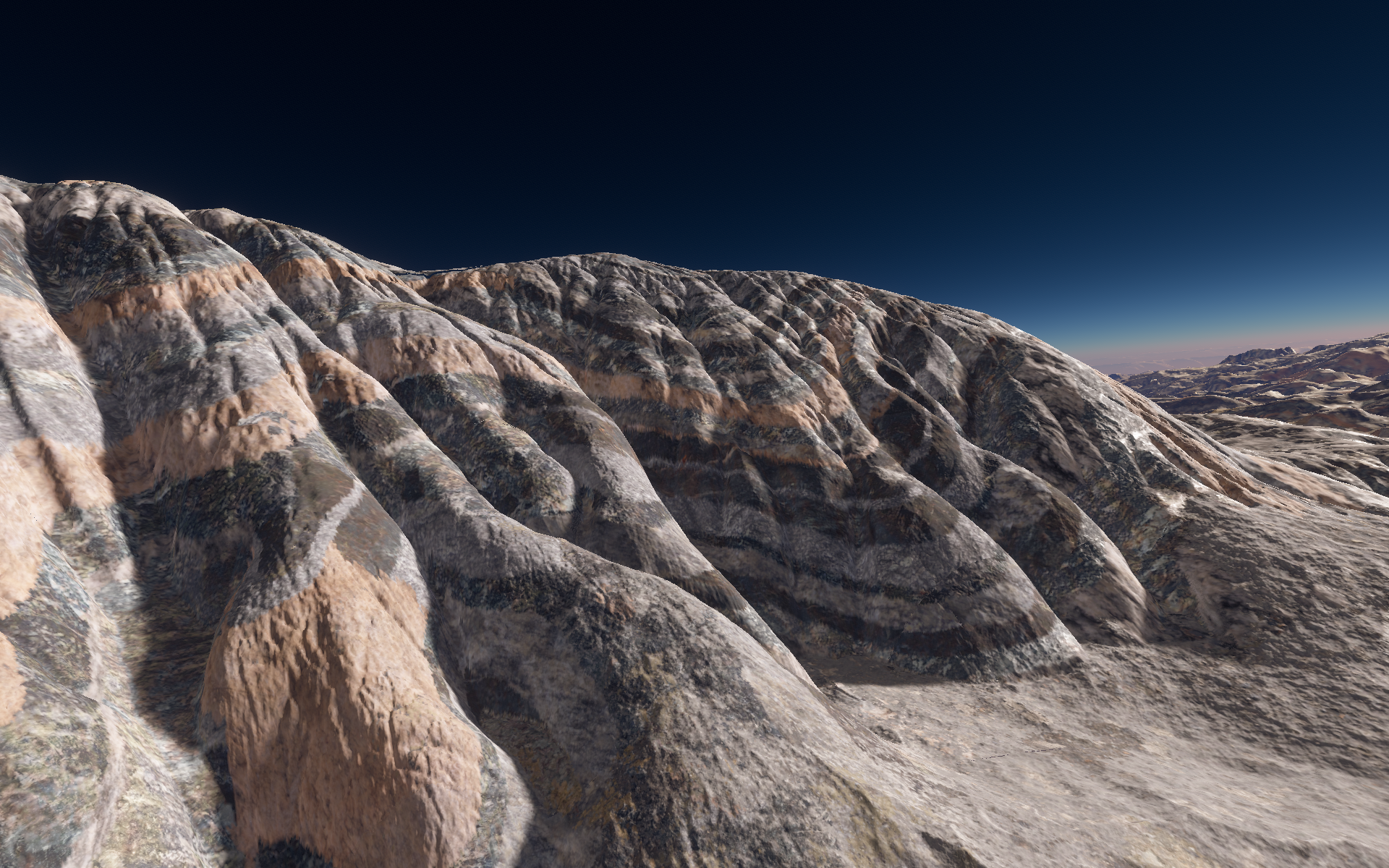

In this blog post I will describe how my planet terrain erosion simulator handles rock layers and deformation of layers. Here is a picture of the current results:

You can clearly see the multiple layers and their deformation. Each type of layer has its own erosion intensity, which influences how the…

The concept of health points comes from old tabletop games such as Dungeons and Dragons. It's a simple abstraction of the real world complexities. With computers keeping track of the game, it should be possible to do something much more interesting.

Health bar as user interfaceFor a typical action g…

Update 5 How to write C++ variables for Unreal blueprint; UPROPERTY Basics

What's wrong with just putting EditAnywhere on everything? Here I explain the different mark up keywords for configuring UProperty variables for the editor.

The reason you don't want to use EditAnywhere on everything, i…

This article was originally published on GameDevDigest.com

A look at the artistic side of things. Enjoy!

Level up: exploring the artistic side of video game design - Existing in parallel to a world of hyperrealistic CGI is a growing segment of gaming using illustration and painterly art to make mesme…