Tinting is harder to implement than I thought.

taby said:

Tinting is harder to implement than I thought.

Looking at your orange sphere i can see it reflects the blue wall with a blue tint, which seems wrong and probably is the source of confusion.

If the orange material has RGB color (1, .5, 0), and you multiply this by incoming blue light (0, .1, 1), the result would be (0, .05, 0). So almost nothing with a bit of green, but no blue.

That's the case for a perfect Lambert Diffuse material.

For PBS it's more complicated, assuming a small fraction of light reflects from the surface immediately, without scattering in the material to change it's color.

So the orange material can reflect a tiny bit of blue light, but usually not enough to be visible.

In this case the orange is the aldebo of the material, and the direct reflectance color is usually a small constant used to define the F0 term used later to calculate the specular term:

vec3 F0 = vec3(0.04);

F0 = mix(F0, albedo, metallic);Code from learn ogl.

If the material is pure metal but no orange plastic, there is no such small white color allowing to reflect any color a bit.

Instead the aldebo color is used for everything, and thus tints everything, simply due to the component wise color multiplication.

EDIT: It would be a good idea if those tutor guys would show light sources of multiple colors, instead using just 4 white lights! :D

You do some strange things with hue to model diffraction effects, iirc.

Maybe this complexity confuses you at this point on doing simpler things.

Also, notice the choice of using constant 0.04 for anything is a compromise to reduce required data. It would be a waste to store individual parameters in textures which tend to be close to constant in reality.

This simple PBR model also uses manually designed functions to fit measured real world materials as good as possible with minimal instructions. This is not physics simulation, but rather only inspired from physics, aiming to achieve virtual materials looking realistic. It's good enough, but no laws of physics set in stone.

Beside realism, another advantage is to have a material standard so we can interchange assets across multiple engines and they should still look the same everywhere, so it eases up content creation.

Vilem Otte said:

Whenever working with importance sampling and various BRDFs - first - go back to the beginning and that's naive path tracing. Let your integrator (which should be correct!) run until you get smooth result and work from there - this is going to be your ground truth.

@taby Agree on this. So if you have not yet reproduced the Cornell Box scene as specified to compare and validate results, that's still something you should do, eventually first. Only then you know your stuff is right.

(Unfortunately the specs give colors in a spectral representation, so doing this is not as easy as it sounds. Reproducing scenes in blender etc. is another option.)

I'm not pedantic about correctness in general.

For example, the fresnel term has ruined lots of visuals of the PS4 gen, and it's still a problem now.

Devs have a tendency to insist on correctness in a stubborn way, even if results look like shit, which they do.

It looks bad because they use things like static reflection probes, so while the fancy material model is right, the incoming light is still wrong.

As an artist i might just decide to ditch the fresnel term. Looking good is more important than looking correct.

But before we can make such artistic decision, we want to see reference images to know what we talk about.

And as a programmer i want to have correct reference too, to reduce doubt and to see the error of my fast approximations, helping to decide what's acceptable or requires which improvements.

So i think there is no way around correctness and proof, at least at some point along our path.

JoeJ said:

I'm not pedantic about correctness in general.

I know it's a bit “generic senior advice” - but It depends. My view is:

In the latter some laws can help (like energy conservation), but they are not necessary. Who cares when your lighting is not energy conserving when you're rendering anime-like game?

Using either is fine as artistic direction (you can also mix between them) - speaking of art, it is not necessary to follow any rules when it comes to art, it is entirely up to artist to express himself through that.

JoeJ said:

Reproducing scenes in blender etc. is another option.

Reproducing same scenarios in integrators and with different post-processing pipeline is a lot more challenging than what one thinks.

I did attempt to do this in my BSc. and MSc. thesis back in the day - and it took a lot more time to end up with proper setup. Which is why I suggested to build as simple integrator as possible that will eventually get to ground truth (by throwing large amount of samples at it).

Vilem Otte said:

f you aim for photorealistic rendering or simulation, you NEED correctness.

What i mean is, you want correctness. But what if you can't have it?

Probably there is no general answer. Whatever you do, somebody will have a problem with the error. For me that's SSAO and faked reflections, for others it's TAA smearing, blurry vaseline look, DOF or MB, low res textures, etc. Everybody has a problem with a certain something.

I can disable many things in gfx options, so indeed i tend to turn SSAO off.

But nobody let's me turn off the Fresnel term.

This is not fair, sniff. In a time where every minority gets attention and support from virtual society, i deserve my fresnel off button too <:¬|

You must know, i also hate this very similar effect of rim lighting to highlight objects, or even characters /:O\

They do this so i can see i could eventually interact with some thing, what a surprise!

But i have to vomit if i see this! It causes me eye cancer!! It looks like spilled shit, eaten three times!!!

Please don't do it anymore, dear gamedevs!

I promise, i will find those interactive objects and collectibles without your clumsy attempt to help!

hehehehe :D

Vilem Otte said:

Which is why I suggested to build as simple integrator as possible that will eventually get to ground truth (by throwing large amount of samples at it).

But how do you know your ground truth is correct? Doing it slow but simple does only decrease chance of bugs, but does not confirm anything.

What worked for me: Using a model of the Cornell Box scene, using only simple Lambert Diffuse material for everything, and disabling any image processing (gamma, tone mapping, exposure, color management, etc…). Also make sure there is no constant ambient turned on somewhere. (Blender seriously uses the background color from the 3D modeling viewports - after i've turned this from grey to black i got my proper reference render.)

This gave me a good reference, and the images looked the same to mine, so i was sure enough my Lambert is correct.

But i do not know how to do the same regarding PBR, because every DCC tool has its own slightly different approach here. I've heard some people use Mitsuba, but did not try since Lambert is actually all i need.

JoeJ said:

But how do you know your ground truth is correct? Doing it slow but simple does only decrease chance of bugs, but does not confirm anything.

This is tricky - how do you guarantee Blender (or rather - your setup is being correct)? That's actually much harder than what I suggested (and it is exactly for the reasons .

Why?

It is necessary to understand what the algorithm is trying to solve - it is trying to solve some form of The Rendering equation, F.e. this:

Nothing more, nothing less. It is just looking for solution of this “simple” integral equation per each pixel on the camera. Or rather - we are trying to approximate solution of this equation with numerical integrator. Doing simple Monte-Carlo random walk to solve this equation is straight forward and will become exact solution at infinite samples.

We of course don't need infinite samples (due to numerical precision of value representation) and we are okay with something that's visually indistinguishable from actual solution.

So - to answer your question, you can only guarantee that your ground truth is correct if and only if you perform a mathematical proof that your approximation is a solution for the rendering equation (in a form you use it).

JoeJ said:

What i mean is, you want correctness. But what if you can't have it?

Then you clearly (as you said) have to cut corners. The problem is - how far you go.

You can simplify the rendering equation to suit your needs (i.e. use only direct lighting, use some rough approximation for indirect, etc.). What you describe is cutting corners in a way that impacts visual quality in one way or the other.

I was never a fan of SSAO, nor TAA with washed frames, nor upscaling that results in terrible image quality. When making a game (that is different than rendering systems/simulators - where we aim for accuracy) - performance and visually pleasing results are more important than accuracy.

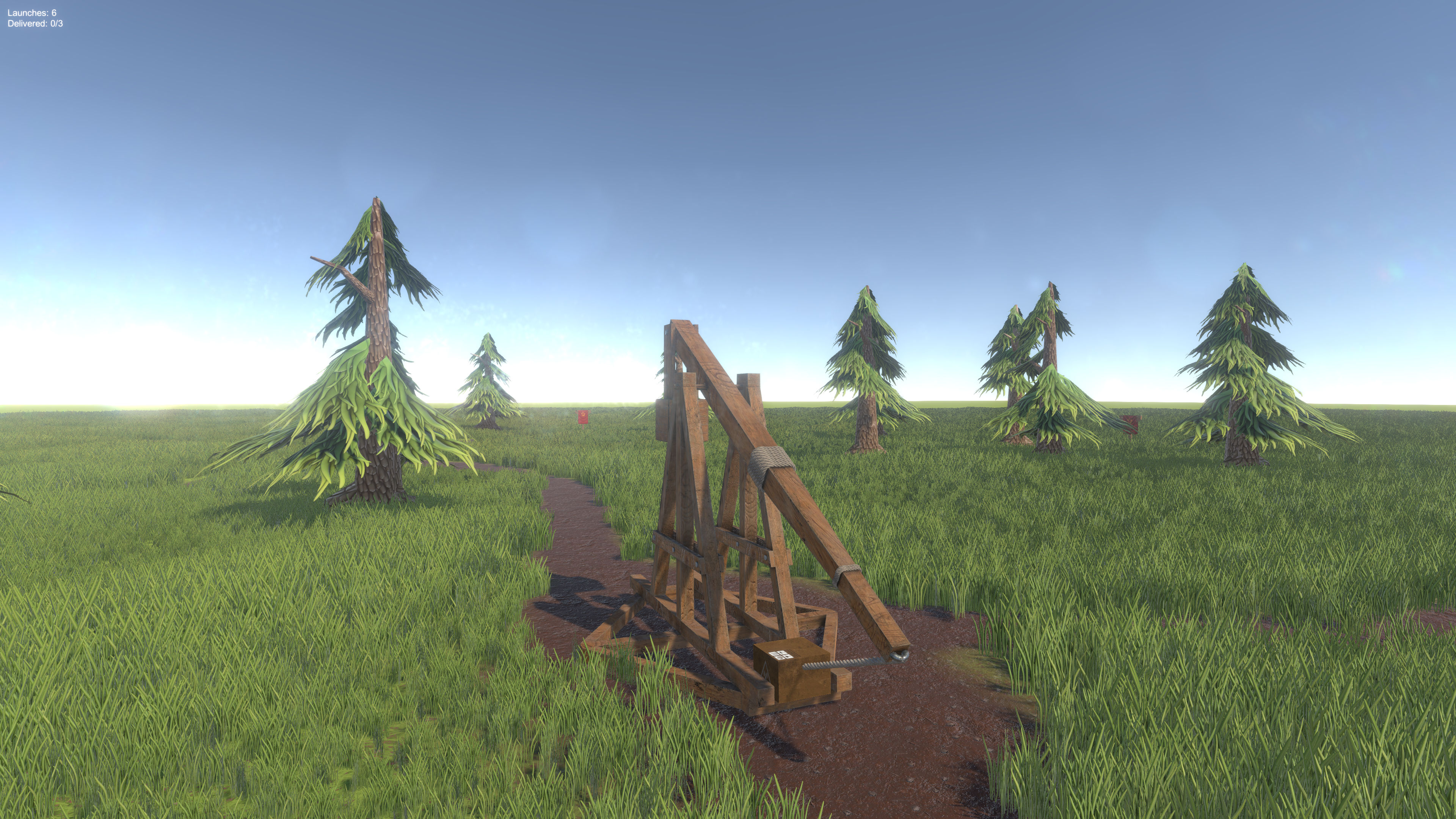

I could output here images from simulators which are accurate, but extremely boring. Or I could output here this:

It's just from Ludum Dare game I made - art was also made by me over that weekend (programmer's art level 9999). But - it runs fast enough even on lower end spec hardware. No SSAO, just some bloom, shadow maps, a bit of blinn-phong shading, and some basic ambient light with reasonable values.

Is it accurate? Hell no!

Does it look visually pleasing? In my subjective opinion, and regarding the time-frame I had - yes (although this is going to be subjective - 10 men, 10 different opinions).

Vilem Otte said:

So - to answer your question, you can only guarantee that your ground truth is correct if and only if you perform a mathematical proof that your approximation is a solution for the rendering equation (in a form you use it).

I see.

But tbh, i trust the expertise of blender devs more than my ability to read the rendering eq. ; )